Hands-On Linear Programming: Optimization With Python

Table of Contents

What Is Linear Programming?

What is mixed-integer linear programming, why is linear programming important, linear programming with python, small linear programming problem, infeasible linear programming problem, unbounded linear programming problem, resource allocation problem, installing scipy and pulp, using scipy, linear programming resources, linear programming solvers.

Linear programming is a set of techniques used in mathematical programming , sometimes called mathematical optimization, to solve systems of linear equations and inequalities while maximizing or minimizing some linear function . It’s important in fields like scientific computing, economics, technical sciences, manufacturing, transportation, military, management, energy, and so on.

The Python ecosystem offers several comprehensive and powerful tools for linear programming. You can choose between simple and complex tools as well as between free and commercial ones. It all depends on your needs.

In this tutorial, you’ll learn:

- What linear programming is and why it’s important

- Which Python tools are suitable for linear programming

- How to build a linear programming model in Python

- How to solve a linear programming problem with Python

You’ll first learn about the fundamentals of linear programming. Then you’ll explore how to implement linear programming techniques in Python. Finally, you’ll look at resources and libraries to help further your linear programming journey.

Free Bonus: 5 Thoughts On Python Mastery , a free course for Python developers that shows you the roadmap and the mindset you’ll need to take your Python skills to the next level.

Linear Programming Explanation

In this section, you’ll learn the basics of linear programming and a related discipline, mixed-integer linear programming. In the next section , you’ll see some practical linear programming examples. Later, you’ll solve linear programming and mixed-integer linear programming problems with Python.

Imagine that you have a system of linear equations and inequalities. Such systems often have many possible solutions. Linear programming is a set of mathematical and computational tools that allows you to find a particular solution to this system that corresponds to the maximum or minimum of some other linear function.

Mixed-integer linear programming is an extension of linear programming. It handles problems in which at least one variable takes a discrete integer rather than a continuous value . Although mixed-integer problems look similar to continuous variable problems at first sight, they offer significant advantages in terms of flexibility and precision.

Integer variables are important for properly representing quantities naturally expressed with integers, like the number of airplanes produced or the number of customers served.

A particularly important kind of integer variable is the binary variable . It can take only the values zero or one and is useful in making yes-or-no decisions, such as whether a plant should be built or if a machine should be turned on or off. You can also use them to mimic logical constraints.

Linear programming is a fundamental optimization technique that’s been used for decades in science- and math-intensive fields. It’s precise, relatively fast, and suitable for a range of practical applications.

Mixed-integer linear programming allows you to overcome many of the limitations of linear programming. You can approximate non-linear functions with piecewise linear functions , use semi-continuous variables , model logical constraints, and more. It’s a computationally intensive tool, but the advances in computer hardware and software make it more applicable every day.

Often, when people try to formulate and solve an optimization problem, the first question is whether they can apply linear programming or mixed-integer linear programming.

Some use cases of linear programming and mixed-integer linear programming are illustrated in the following articles:

- Gurobi Optimization Case Studies

- Five Areas of Application for Linear Programming Techniques

The importance of linear programming, and especially mixed-integer linear programming, has increased over time as computers have gotten more capable, algorithms have improved, and more user-friendly software solutions have become available.

The basic method for solving linear programming problems is called the simplex method , which has several variants. Another popular approach is the interior-point method .

Mixed-integer linear programming problems are solved with more complex and computationally intensive methods like the branch-and-bound method , which uses linear programming under the hood. Some variants of this method are the branch-and-cut method , which involves the use of cutting planes , and the branch-and-price method .

There are several suitable and well-known Python tools for linear programming and mixed-integer linear programming. Some of them are open source, while others are proprietary. Whether you need a free or paid tool depends on the size and complexity of your problem as well as on the need for speed and flexibility.

It’s worth mentioning that almost all widely used linear programming and mixed-integer linear programming libraries are native to and written in Fortran or C or C++. This is because linear programming requires computationally intensive work with (often large) matrices. Such libraries are called solvers . The Python tools are just wrappers around the solvers.

Python is suitable for building wrappers around native libraries because it works well with C/C++. You’re not going to need any C/C++ (or Fortran) for this tutorial, but if you want to learn more about this cool feature, then check out the following resources:

- Building a Python C Extension Module

- CPython Internals

- Extending Python with C or C++

Basically, when you define and solve a model, you use Python functions or methods to call a low-level library that does the actual optimization job and returns the solution to your Python object.

Several free Python libraries are specialized to interact with linear or mixed-integer linear programming solvers:

- SciPy Optimization and Root Finding

In this tutorial, you’ll use SciPy and PuLP to define and solve linear programming problems.

Linear Programming Examples

In this section, you’ll see two examples of linear programming problems:

- A small problem that illustrates what linear programming is

- A practical problem related to resource allocation that illustrates linear programming concepts in a real-world scenario

You’ll use Python to solve these two problems in the next section .

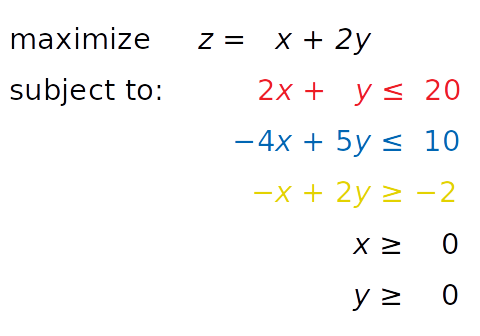

Consider the following linear programming problem:

You need to find x and y such that the red, blue, and yellow inequalities, as well as the inequalities x ≥ 0 and y ≥ 0, are satisfied. At the same time, your solution must correspond to the largest possible value of z .

The independent variables you need to find—in this case x and y —are called the decision variables . The function of the decision variables to be maximized or minimized—in this case z —is called the objective function , the cost function , or just the goal . The inequalities you need to satisfy are called the inequality constraints . You can also have equations among the constraints called equality constraints .

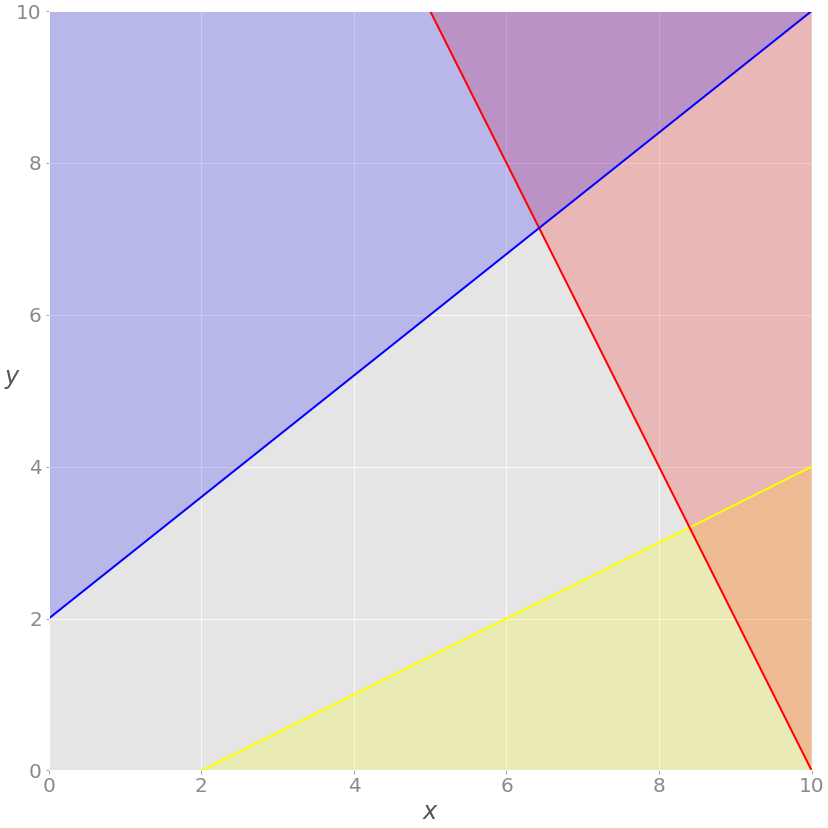

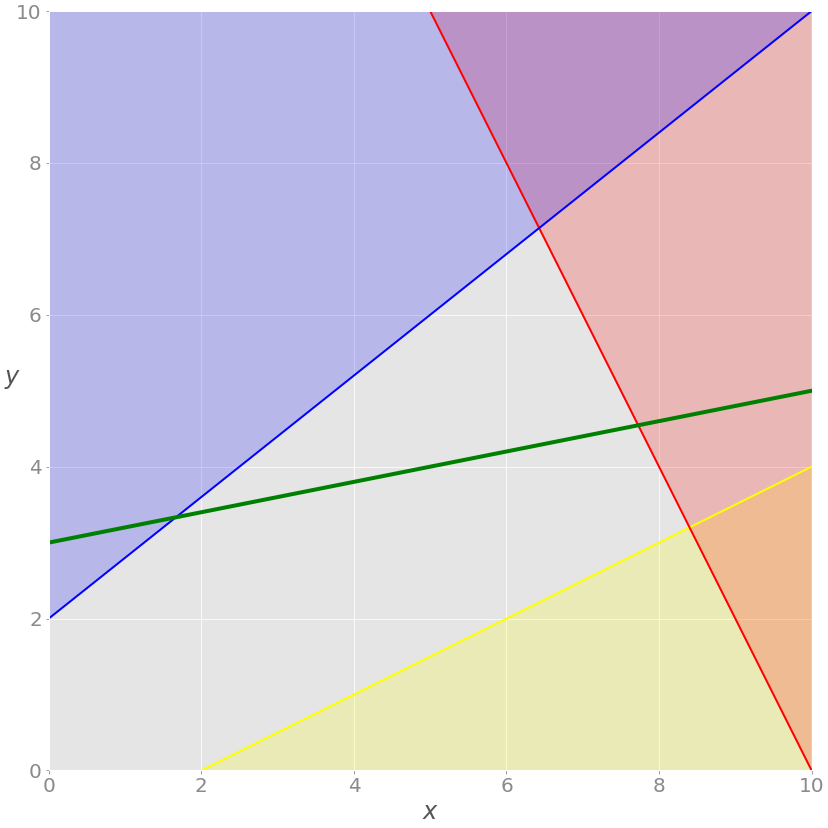

This is how you can visualize the problem:

The red line represents the function 2 x + y = 20, and the red area above it shows where the red inequality is not satisfied. Similarly, the blue line is the function −4 x + 5 y = 10, and the blue area is forbidden because it violates the blue inequality. The yellow line is − x + 2 y = −2, and the yellow area below it is where the yellow inequality isn’t valid.

If you disregard the red, blue, and yellow areas, only the gray area remains. Each point of the gray area satisfies all constraints and is a potential solution to the problem. This area is called the feasible region , and its points are feasible solutions . In this case, there’s an infinite number of feasible solutions.

You want to maximize z . The feasible solution that corresponds to maximal z is the optimal solution . If you were trying to minimize the objective function instead, then the optimal solution would correspond to its feasible minimum.

Note that z is linear. You can imagine it as a plane in three-dimensional space. This is why the optimal solution must be on a vertex , or corner, of the feasible region. In this case, the optimal solution is the point where the red and blue lines intersect, as you’ll see later .

Sometimes a whole edge of the feasible region, or even the entire region, can correspond to the same value of z . In that case, you have many optimal solutions.

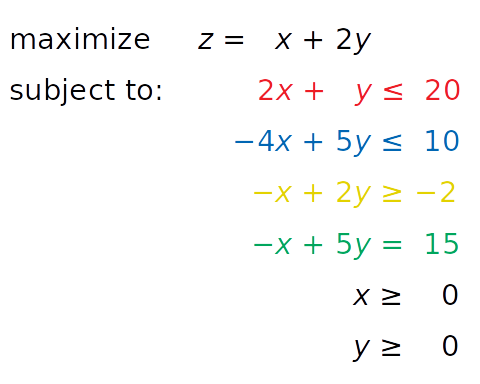

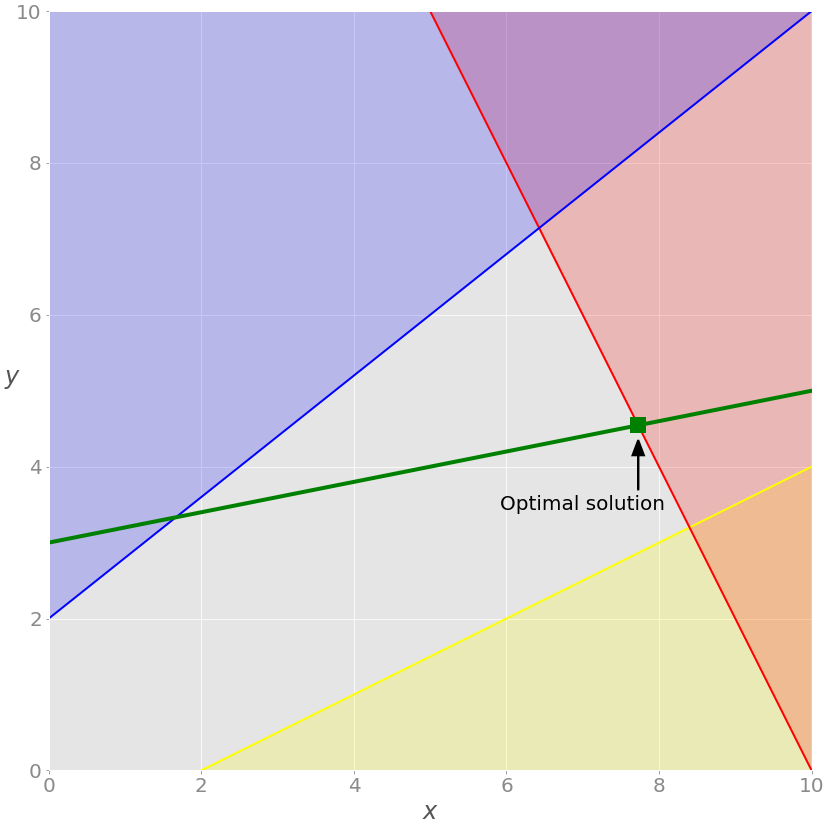

You’re now ready to expand the problem with the additional equality constraint shown in green:

The equation − x + 5 y = 15, written in green, is new. It’s an equality constraint. You can visualize it by adding a corresponding green line to the previous image:

The solution now must satisfy the green equality, so the feasible region isn’t the entire gray area anymore. It’s the part of the green line passing through the gray area from the intersection point with the blue line to the intersection point with the red line. The latter point is the solution.

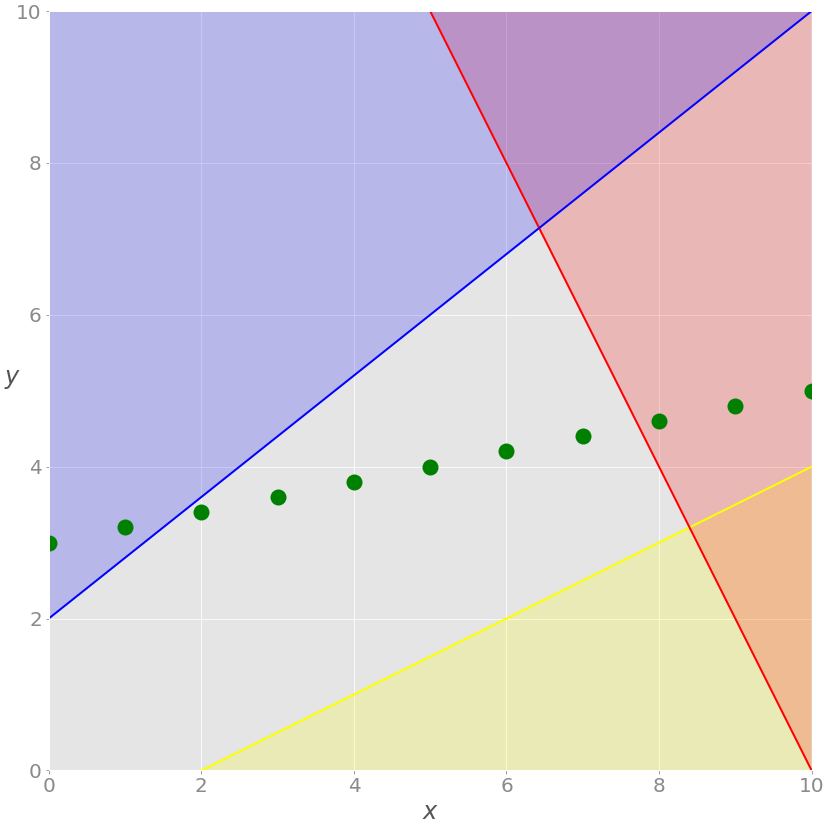

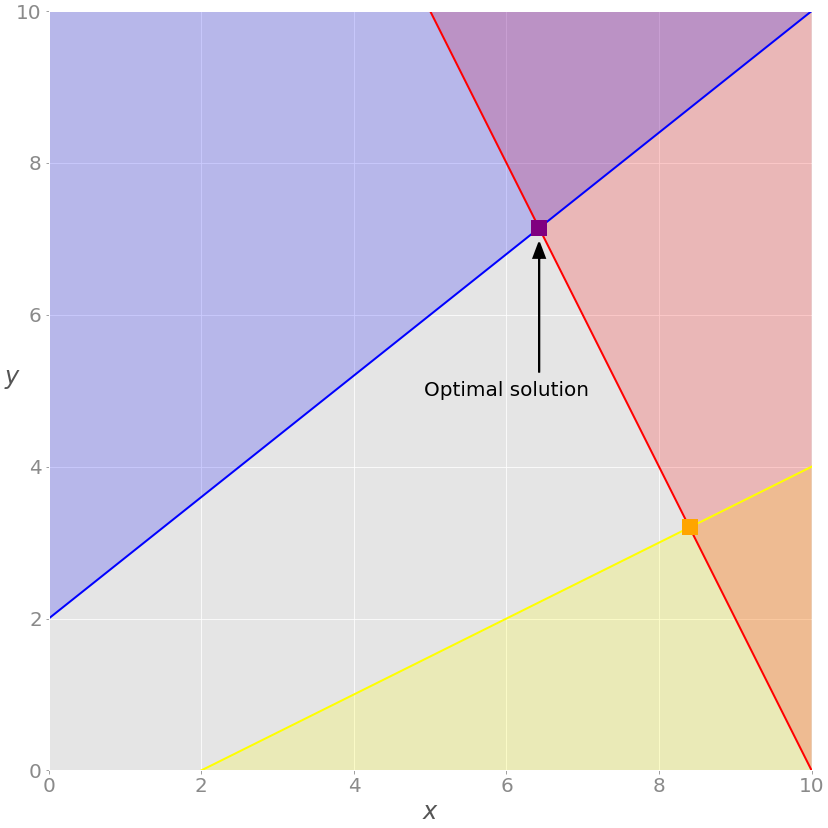

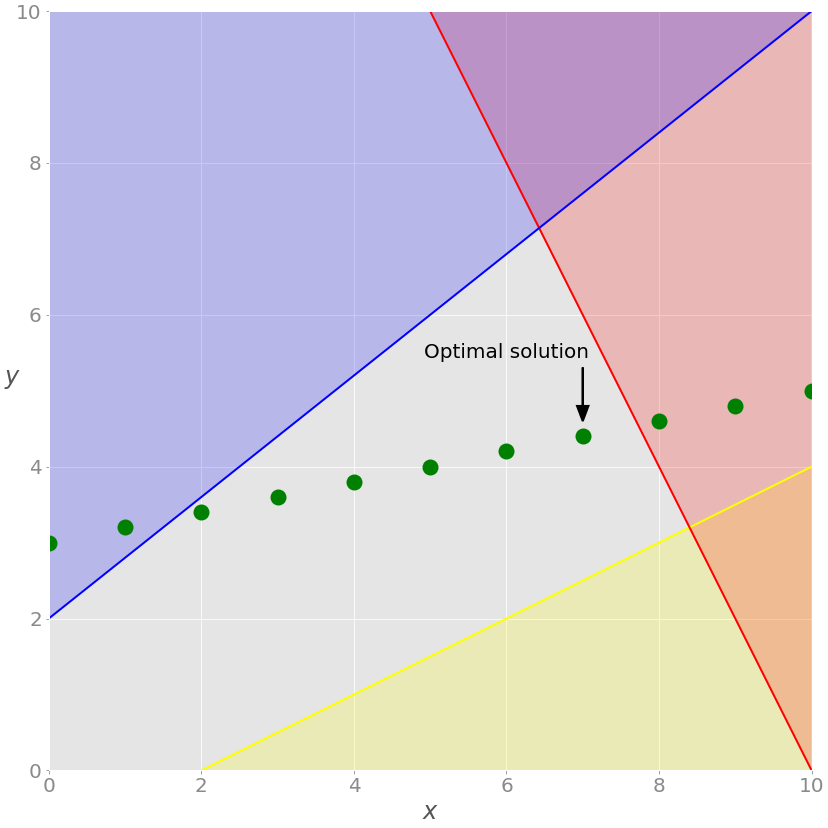

If you insert the demand that all values of x must be integers, then you’ll get a mixed-integer linear programming problem, and the set of feasible solutions will change once again:

You no longer have the green line, only the points along the line where the value of x is an integer. The feasible solutions are the green points on the gray background, and the optimal one in this case is nearest to the red line.

These three examples illustrate feasible linear programming problems because they have bounded feasible regions and finite solutions.

A linear programming problem is infeasible if it doesn’t have a solution. This usually happens when no solution can satisfy all constraints at once.

For example, consider what would happen if you added the constraint x + y ≤ −1. Then at least one of the decision variables ( x or y ) would have to be negative. This is in conflict with the given constraints x ≥ 0 and y ≥ 0. Such a system doesn’t have a feasible solution, so it’s called infeasible.

Another example would be adding a second equality constraint parallel to the green line. These two lines wouldn’t have a point in common, so there wouldn’t be a solution that satisfies both constraints.

A linear programming problem is unbounded if its feasible region isn’t bounded and the solution is not finite. This means that at least one of your variables isn’t constrained and can reach to positive or negative infinity, making the objective infinite as well.

For example, say you take the initial problem above and drop the red and yellow constraints. Dropping constraints out of a problem is called relaxing the problem. In such a case, x and y wouldn’t be bounded on the positive side. You’d be able to increase them toward positive infinity, yielding an infinitely large z value.

In the previous sections, you looked at an abstract linear programming problem that wasn’t tied to any real-world application. In this subsection, you’ll find a more concrete and practical optimization problem related to resource allocation in manufacturing.

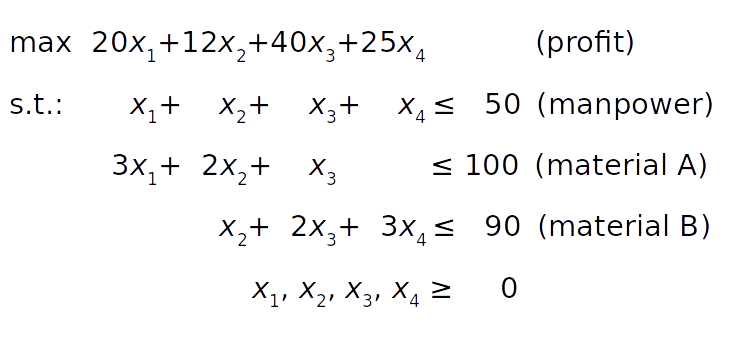

Say that a factory produces four different products, and that the daily produced amount of the first product is x ₁, the amount produced of the second product is x ₂, and so on. The goal is to determine the profit-maximizing daily production amount for each product, bearing in mind the following conditions:

The profit per unit of product is $20, $12, $40, and $25 for the first, second, third, and fourth product, respectively.

Due to manpower constraints, the total number of units produced per day can’t exceed fifty.

For each unit of the first product, three units of the raw material A are consumed. Each unit of the second product requires two units of the raw material A and one unit of the raw material B. Each unit of the third product needs one unit of A and two units of B. Finally, each unit of the fourth product requires three units of B.

Due to the transportation and storage constraints, the factory can consume up to one hundred units of the raw material A and ninety units of B per day.

The mathematical model can be defined like this:

The objective function (profit) is defined in condition 1. The manpower constraint follows from condition 2. The constraints on the raw materials A and B can be derived from conditions 3 and 4 by summing the raw material requirements for each product.

Finally, the product amounts can’t be negative, so all decision variables must be greater than or equal to zero.

Unlike the previous example, you can’t conveniently visualize this one because it has four decision variables. However, the principles remain the same regardless of the dimensionality of the problem.

Linear Programming Python Implementation

In this tutorial, you’ll use two Python packages to solve the linear programming problem described above:

- SciPy is a general-purpose package for scientific computing with Python.

- PuLP is a Python linear programming API for defining problems and invoking external solvers.

SciPy is straightforward to set up. Once you install it, you’ll have everything you need to start. Its subpackage scipy.optimize can be used for both linear and nonlinear optimization .

PuLP allows you to choose solvers and formulate problems in a more natural way. The default solver used by PuLP is the COIN-OR Branch and Cut Solver (CBC) . It’s connected to the COIN-OR Linear Programming Solver (CLP) for linear relaxations and the COIN-OR Cut Generator Library (CGL) for cuts generation.

Another great open source solver is the GNU Linear Programming Kit (GLPK) . Some well-known and very powerful commercial and proprietary solutions are Gurobi , CPLEX , and XPRESS .

Besides offering flexibility when defining problems and the ability to run various solvers, PuLP is less complicated to use than alternatives like Pyomo or CVXOPT, which require more time and effort to master.

To follow this tutorial, you’ll need to install SciPy and PuLP. The examples below use version 1.4.1 of SciPy and version 2.1 of PuLP.

You can install both using pip :

You might need to run pulptest or sudo pulptest to enable the default solvers for PuLP, especially if you’re using Linux or Mac:

Optionally, you can download, install, and use GLPK. It’s free and open source and works on Windows, MacOS, and Linux. You’ll see how to use GLPK (in addition to CBC) with PuLP later in this tutorial.

On Windows, you can download the archives and run the installation files.

On MacOS, you can use Homebrew :

On Debian and Ubuntu, use apt to install glpk and glpk-utils :

On Fedora, use dnf with glpk-utils :

You might also find conda useful for installing GLPK:

After completing the installation, you can check the version of GLPK:

See GLPK’s tutorials on installing with Windows executables and Linux packages for more information.

In this section, you’ll learn how to use the SciPy optimization and root-finding library for linear programming.

To define and solve optimization problems with SciPy, you need to import scipy.optimize.linprog() :

Now that you have linprog() imported, you can start optimizing.

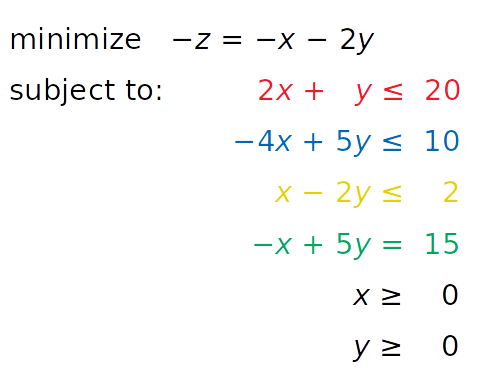

Let’s first solve the linear programming problem from above:

linprog() solves only minimization (not maximization) problems and doesn’t allow inequality constraints with the greater than or equal to sign (≥). To work around these issues, you need to modify your problem before starting optimization:

- Instead of maximizing z = x + 2 y, you can minimize its negative(− z = − x − 2 y).

- Instead of having the greater than or equal to sign, you can multiply the yellow inequality by −1 and get the opposite less than or equal to sign (≤).

After introducing these changes, you get a new system:

This system is equivalent to the original and will have the same solution. The only reason to apply these changes is to overcome the limitations of SciPy related to the problem formulation.

The next step is to define the input values:

You put the values from the system above into the appropriate lists, tuples , or NumPy arrays :

- obj holds the coefficients from the objective function.

- lhs_ineq holds the left-side coefficients from the inequality (red, blue, and yellow) constraints.

- rhs_ineq holds the right-side coefficients from the inequality (red, blue, and yellow) constraints.

- lhs_eq holds the left-side coefficients from the equality (green) constraint.

- rhs_eq holds the right-side coefficients from the equality (green) constraint.

Note: Please, be careful with the order of rows and columns!

The order of the rows for the left and right sides of the constraints must be the same. Each row represents one constraint.

The order of the coefficients from the objective function and left sides of the constraints must match. Each column corresponds to a single decision variable.

The next step is to define the bounds for each variable in the same order as the coefficients. In this case, they’re both between zero and positive infinity:

This statement is redundant because linprog() takes these bounds (zero to positive infinity) by default.

Note: Instead of float("inf") , you can use math.inf , numpy.inf , or scipy.inf .

Finally, it’s time to optimize and solve your problem of interest. You can do that with linprog() :

The parameter c refers to the coefficients from the objective function. A_ub and b_ub are related to the coefficients from the left and right sides of the inequality constraints, respectively. Similarly, A_eq and b_eq refer to equality constraints. You can use bounds to provide the lower and upper bounds on the decision variables.

You can use the parameter method to define the linear programming method that you want to use. There are three options:

- method="interior-point" selects the interior-point method. This option is set by default.

- method="revised simplex" selects the revised two-phase simplex method.

- method="simplex" selects the legacy two-phase simplex method.

linprog() returns a data structure with these attributes:

.con is the equality constraints residuals.

.fun is the objective function value at the optimum (if found).

.message is the status of the solution.

.nit is the number of iterations needed to finish the calculation.

.slack is the values of the slack variables, or the differences between the values of the left and right sides of the constraints.

.status is an integer between 0 and 4 that shows the status of the solution, such as 0 for when the optimal solution has been found.

.success is a Boolean that shows whether the optimal solution has been found.

.x is a NumPy array holding the optimal values of the decision variables.

You can access these values separately:

That’s how you get the results of optimization. You can also show them graphically:

As discussed earlier, the optimal solutions to linear programming problems lie at the vertices of the feasible regions. In this case, the feasible region is just the portion of the green line between the blue and red lines. The optimal solution is the green square that represents the point of intersection between the green and red lines.

If you want to exclude the equality (green) constraint, just drop the parameters A_eq and b_eq from the linprog() call:

The solution is different from the previous case. You can see it on the chart:

In this example, the optimal solution is the purple vertex of the feasible (gray) region where the red and blue constraints intersect. Other vertices, like the yellow one, have higher values for the objective function.

You can use SciPy to solve the resource allocation problem stated in the earlier section :

As in the previous example, you need to extract the necessary vectors and matrix from the problem above, pass them as the arguments to .linprog() , and get the results:

The result tells you that the maximal profit is 1900 and corresponds to x ₁ = 5 and x ₃ = 45. It’s not profitable to produce the second and fourth products under the given conditions. You can draw several interesting conclusions here:

The third product brings the largest profit per unit, so the factory will produce it the most.

The first slack is 0 , which means that the values of the left and right sides of the manpower (first) constraint are the same. The factory produces 50 units per day, and that’s its full capacity.

The second slack is 40 because the factory consumes 60 units of raw material A (15 units for the first product plus 45 for the third) out of a potential 100 units.

The third slack is 0 , which means that the factory consumes all 90 units of the raw material B. This entire amount is consumed for the third product. That’s why the factory can’t produce the second or fourth product at all and can’t produce more than 45 units of the third product. It lacks the raw material B.

opt.status is 0 and opt.success is True , indicating that the optimization problem was successfully solved with the optimal feasible solution.

SciPy’s linear programming capabilities are useful mainly for smaller problems. For larger and more complex problems, you might find other libraries more suitable for the following reasons:

SciPy can’t run various external solvers.

SciPy can’t work with integer decision variables.

SciPy doesn’t provide classes or functions that facilitate model building. You have to define arrays and matrices, which might be a tedious and error-prone task for large problems.

SciPy doesn’t allow you to define maximization problems directly. You must convert them to minimization problems.

SciPy doesn’t allow you to define constraints using the greater-than-or-equal-to sign directly. You must use the less-than-or-equal-to instead.

Fortunately, the Python ecosystem offers several alternative solutions for linear programming that are very useful for larger problems. One of them is PuLP, which you’ll see in action in the next section.

PuLP has a more convenient linear programming API than SciPy. You don’t have to mathematically modify your problem or use vectors and matrices. Everything is cleaner and less prone to errors.

As usual, you start by importing what you need:

Now that you have PuLP imported, you can solve your problems.

You’ll now solve this system with PuLP:

The first step is to initialize an instance of LpProblem to represent your model:

You use the sense parameter to choose whether to perform minimization ( LpMinimize or 1 , which is the default) or maximization ( LpMaximize or -1 ). This choice will affect the result of your problem.

Once that you have the model, you can define the decision variables as instances of the LpVariable class:

You need to provide a lower bound with lowBound=0 because the default value is negative infinity. The parameter upBound defines the upper bound, but you can omit it here because it defaults to positive infinity.

The optional parameter cat defines the category of a decision variable. If you’re working with continuous variables, then you can use the default value "Continuous" .

You can use the variables x and y to create other PuLP objects that represent linear expressions and constraints:

When you multiply a decision variable with a scalar or build a linear combination of multiple decision variables, you get an instance of pulp.LpAffineExpression that represents a linear expression.

Note: You can add or subtract variables or expressions, and you can multiply them with constants because PuLP classes implement some of the Python special methods that emulate numeric types like __add__() , __sub__() , and __mul__() . These methods are used to customize the behavior of operators like + , - , and * .

Similarly, you can combine linear expressions, variables, and scalars with the operators == , <= , or >= to get instances of pulp.LpConstraint that represent the linear constraints of your model.

Note: It’s also possible to build constraints with the rich comparison methods .__eq__() , .__le__() , and .__ge__() that define the behavior of the operators == , <= , and >= .

Having this in mind, the next step is to create the constraints and objective function as well as to assign them to your model. You don’t need to create lists or matrices. Just write Python expressions and use the += operator to append them to the model:

In the above code, you define tuples that hold the constraints and their names. LpProblem allows you to add constraints to a model by specifying them as tuples. The first element is a LpConstraint instance. The second element is a human-readable name for that constraint.

Setting the objective function is very similar:

Alternatively, you can use a shorter notation:

Now you have the objective function added and the model defined.

Note: You can append a constraint or objective to the model with the operator += because its class, LpProblem , implements the special method .__iadd__() , which is used to specify the behavior of += .

For larger problems, it’s often more convenient to use lpSum() with a list or other sequence than to repeat the + operator. For example, you could add the objective function to the model with this statement:

It produces the same result as the previous statement.

You can now see the full definition of this model:

The string representation of the model contains all relevant data: the variables, constraints, objective, and their names.

Note: String representations are built by defining the special method .__repr__() . For more details about .__repr__() , check out Pythonic OOP String Conversion: __repr__ vs __str__ or When Should You Use .__repr__() vs .__str__() in Python? .

Finally, you’re ready to solve the problem. You can do that by calling .solve() on your model object. If you want to use the default solver (CBC), then you don’t need to pass any arguments:

.solve() calls the underlying solver, modifies the model object, and returns the integer status of the solution, which will be 1 if the optimum is found. For the rest of the status codes, see LpStatus[] .

You can get the optimization results as the attributes of model . The function value() and the corresponding method .value() return the actual values of the attributes:

model.objective holds the value of the objective function, model.constraints contains the values of the slack variables, and the objects x and y have the optimal values of the decision variables. model.variables() returns a list with the decision variables:

As you can see, this list contains the exact objects that are created with the constructor of LpVariable .

The results are approximately the same as the ones you got with SciPy.

Note: Be careful with the method .solve() —it changes the state of the objects x and y !

You can see which solver was used by calling .solver :

The output informs you that the solver is CBC. You didn’t specify a solver, so PuLP called the default one.

If you want to run a different solver, then you can specify it as an argument of .solve() . For example, if you want to use GLPK and already have it installed, then you can use solver=GLPK(msg=False) in the last line. Keep in mind that you’ll also need to import it:

Now that you have GLPK imported, you can use it inside .solve() :

The msg parameter is used to display information from the solver. msg=False disables showing this information. If you want to include the information, then just omit msg or set msg=True .

Your model is defined and solved, so you can inspect the results the same way you did in the previous case:

You got practically the same result with GLPK as you did with SciPy and CBC.

Let’s peek and see which solver was used this time:

As you defined above with the highlighted statement model.solve(solver=GLPK(msg=False)) , the solver is GLPK.

You can also use PuLP to solve mixed-integer linear programming problems. To define an integer or binary variable, just pass cat="Integer" or cat="Binary" to LpVariable . Everything else remains the same:

In this example, you have one integer variable and get different results from before:

Now x is an integer, as specified in the model. (Technically it holds a float value with zero after the decimal point.) This fact changes the whole solution. Let’s show this on the graph:

As you can see, the optimal solution is the rightmost green point on the gray background. This is the feasible solution with the largest values of both x and y , giving it the maximal objective function value.

GLPK is capable of solving such problems as well.

Now you can use PuLP to solve the resource allocation problem from above:

The approach for defining and solving the problem is the same as in the previous example:

In this case, you use the dictionary x to store all decision variables. This approach is convenient because dictionaries can store the names or indices of decision variables as keys and the corresponding LpVariable objects as values. Lists or tuples of LpVariable instances can be useful as well.

The code above produces the following result:

As you can see, the solution is consistent with the one obtained using SciPy. The most profitable solution is to produce 5.0 units of the first product and 45.0 units of the third product per day.

Let’s make this problem more complicated and interesting. Say the factory can’t produce the first and third products in parallel due to a machinery issue. What’s the most profitable solution in this case?

Now you have another logical constraint: if x ₁ is positive, then x ₃ must be zero and vice versa. This is where binary decision variables are very useful. You’ll use two binary decision variables, y ₁ and y ₃, that’ll denote if the first or third products are generated at all:

The code is very similar to the previous example except for the highlighted lines. Here are the differences:

Line 5 defines the binary decision variables y[1] and y[3] held in the dictionary y .

Line 12 defines an arbitrarily large number M . The value 100 is large enough in this case because you can’t have more than 100 units per day.

Line 13 says that if y[1] is zero, then x[1] must be zero, else it can be any non-negative number.

Line 14 says that if y[3] is zero, then x[3] must be zero, else it can be any non-negative number.

Line 15 says that either y[1] or y[3] is zero (or both are), so either x[1] or x[3] must be zero as well.

Here’s the solution:

It turns out that the optimal approach is to exclude the first product and to produce only the third one.

Linear programming and mixed-integer linear programming are very important topics. If you want to learn more about them—and there’s much more to learn than what you saw here—then you can find plenty of resources. Here are a few to get started with:

- Wikipedia Linear Programming Article

- Wikipedia Integer Programming Article

- MIT Introduction to Mathematical Programming Course

- Brilliant.org Linear Programming Article

- CalcWorkshop What Is Linear Programming?

- BYJU’S Linear Programming Article

Gurobi Optimization is a company that offers a very fast commercial solver with a Python API. It also provides valuable resources on linear programming and mixed-integer linear programming, including the following:

- Linear Programming (LP) – A Primer on the Basics

- Mixed-Integer Programming (MIP) – A Primer on the Basics

- Choosing a Math Programming Solver

If you’re in the mood to learn optimization theory, then there’s plenty of math books out there. Here are a few popular choices:

- Linear Programming: Foundations and Extensions

- Convex Optimization

- Model Building in Mathematical Programming

- Engineering Optimization: Theory and Practice

This is just a part of what’s available. Linear programming and mixed-integer linear programming are popular and widely used techniques, so you can find countless resources to help deepen your understanding.

Just like there are many resources to help you learn linear programming and mixed-integer linear programming, there’s also a wide range of solvers that have Python wrappers available. Here’s a partial list:

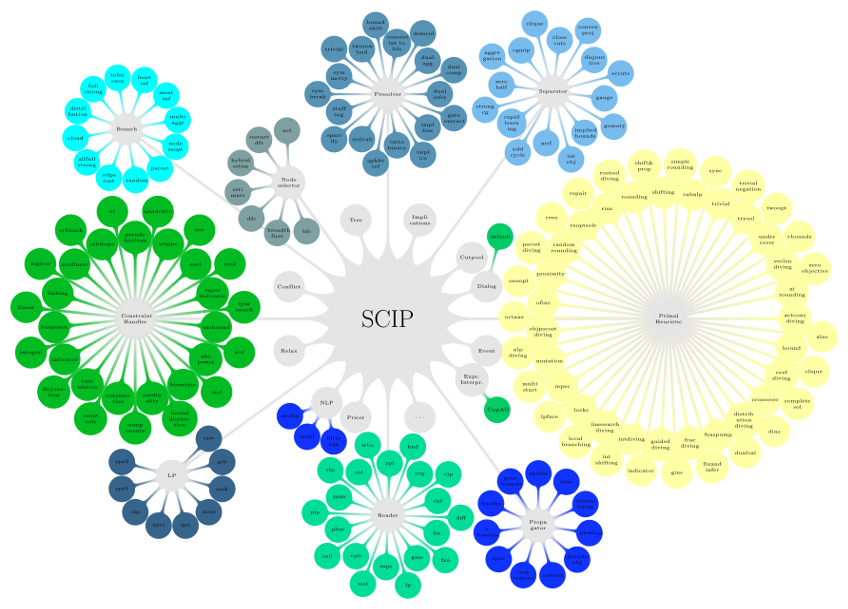

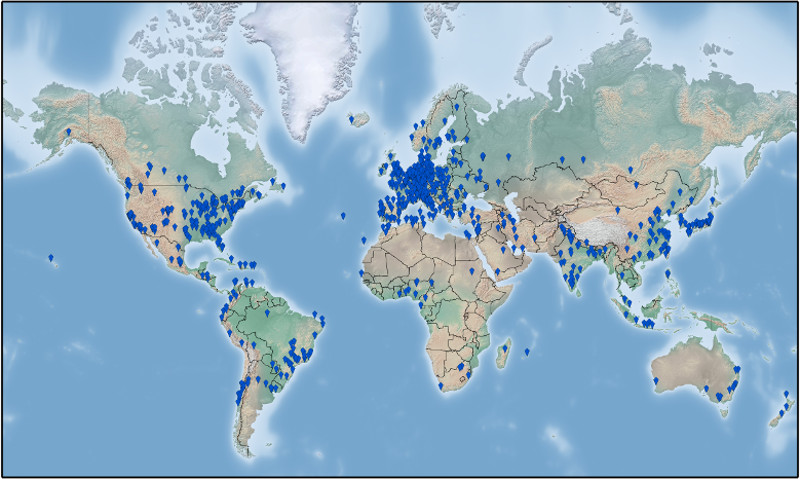

- SCIP with PySCIPOpt

- Gurobi Optimizer

Some of these libraries, like Gurobi, include their own Python wrappers. Others use external wrappers. For example, you saw that you can access CBC and GLPK with PuLP.

You now know what linear programming is and how to use Python to solve linear programming problems. You also learned that Python linear programming libraries are just wrappers around native solvers. When the solver finishes its job, the wrapper returns the solution status, the decision variable values, the slack variables, the objective function, and so on.

In this tutorial, you learned how to:

- Define a model that represents your problem

- Create a Python program for optimization

- Run the optimization program to find the solution to the problem

- Retrieve the result of optimization

You used SciPy with its own solver as well as PuLP with CBC and GLPK, but you also learned that there are many other linear programming solvers and Python wrappers. You’re now ready to dive into the world of linear programming!

If you have any questions or comments, then please put them in the comments section below.

🐍 Python Tricks 💌

Get a short & sweet Python Trick delivered to your inbox every couple of days. No spam ever. Unsubscribe any time. Curated by the Real Python team.

About Mirko Stojiljković

Mirko has a Ph.D. in Mechanical Engineering and works as a university professor. He is a Pythonista who applies hybrid optimization and machine learning methods to support decision making in the energy sector.

Each tutorial at Real Python is created by a team of developers so that it meets our high quality standards. The team members who worked on this tutorial are:

Master Real-World Python Skills With Unlimited Access to Real Python

Join us and get access to thousands of tutorials, hands-on video courses, and a community of expert Pythonistas:

Join us and get access to thousands of tutorials, hands-on video courses, and a community of expert Pythonistas:

What Do You Think?

What’s your #1 takeaway or favorite thing you learned? How are you going to put your newfound skills to use? Leave a comment below and let us know.

Commenting Tips: The most useful comments are those written with the goal of learning from or helping out other students. Get tips for asking good questions and get answers to common questions in our support portal . Looking for a real-time conversation? Visit the Real Python Community Chat or join the next “Office Hours” Live Q&A Session . Happy Pythoning!

Keep Learning

Related Topics: intermediate data-science

Keep reading Real Python by creating a free account or signing in:

Already have an account? Sign-In

Almost there! Complete this form and click the button below to gain instant access:

5 Thoughts On Python Mastery

🔒 No spam. We take your privacy seriously.

Linear programming: minimize a linear objective function subject to linear equality and inequality constraints.

Linear programming solves problems of the following form:

where \(x\) is a vector of decision variables; \(c\) , \(b_{ub}\) , \(b_{eq}\) , \(l\) , and \(u\) are vectors; and \(A_{ub}\) and \(A_{eq}\) are matrices.

Alternatively, that’s:

minimize c @ x such that A_ub @ x <= b_ub A_eq @ x == b_eq lb <= x <= ub

Note that by default lb = 0 and ub = None . Other bounds can be specified with bounds .

The coefficients of the linear objective function to be minimized.

The inequality constraint matrix. Each row of A_ub specifies the coefficients of a linear inequality constraint on x .

The inequality constraint vector. Each element represents an upper bound on the corresponding value of A_ub @ x .

The equality constraint matrix. Each row of A_eq specifies the coefficients of a linear equality constraint on x .

The equality constraint vector. Each element of A_eq @ x must equal the corresponding element of b_eq .

A sequence of (min, max) pairs for each element in x , defining the minimum and maximum values of that decision variable. If a single tuple (min, max) is provided, then min and max will serve as bounds for all decision variables. Use None to indicate that there is no bound. For instance, the default bound (0, None) means that all decision variables are non-negative, and the pair (None, None) means no bounds at all, i.e. all variables are allowed to be any real.

The algorithm used to solve the standard form problem. ‘highs’ (default), ‘highs-ds’ , ‘highs-ipm’ , ‘interior-point’ (legacy), ‘revised simplex’ (legacy), and ‘simplex’ (legacy) are supported. The legacy methods are deprecated and will be removed in SciPy 1.11.0.

If a callback function is provided, it will be called at least once per iteration of the algorithm. The callback function must accept a single scipy.optimize.OptimizeResult consisting of the following fields:

The current solution vector.

The current value of the objective function c @ x .

True when the algorithm has completed successfully.

The (nominally positive) values of the slack, b_ub - A_ub @ x .

The (nominally zero) residuals of the equality constraints, b_eq - A_eq @ x .

The phase of the algorithm being executed.

An integer representing the status of the algorithm.

0 : Optimization proceeding nominally.

1 : Iteration limit reached.

2 : Problem appears to be infeasible.

3 : Problem appears to be unbounded.

4 : Numerical difficulties encountered.

The current iteration number.

A string descriptor of the algorithm status.

Callback functions are not currently supported by the HiGHS methods.

A dictionary of solver options. All methods accept the following options:

Maximum number of iterations to perform. Default: see method-specific documentation.

Set to True to print convergence messages. Default: False .

Set to False to disable automatic presolve. Default: True .

All methods except the HiGHS solvers also accept:

A tolerance which determines when a residual is “close enough” to zero to be considered exactly zero.

Set to True to automatically perform equilibration. Consider using this option if the numerical values in the constraints are separated by several orders of magnitude. Default: False .

Set to False to disable automatic redundancy removal. Default: True .

Method used to identify and remove redundant rows from the equality constraint matrix after presolve. For problems with dense input, the available methods for redundancy removal are:

Repeatedly performs singular value decomposition on the matrix, detecting redundant rows based on nonzeros in the left singular vectors that correspond with zero singular values. May be fast when the matrix is nearly full rank.

Uses the algorithm presented in [5] to identify redundant rows.

Uses a randomized interpolative decomposition. Identifies columns of the matrix transpose not used in a full-rank interpolative decomposition of the matrix.

Uses “svd” if the matrix is nearly full rank, that is, the difference between the matrix rank and the number of rows is less than five. If not, uses “pivot”. The behavior of this default is subject to change without prior notice.

Default: None. For problems with sparse input, this option is ignored, and the pivot-based algorithm presented in [5] is used.

For method-specific options, see show_options('linprog') .

Guess values of the decision variables, which will be refined by the optimization algorithm. This argument is currently used only by the ‘revised simplex’ method, and can only be used if x0 represents a basic feasible solution.

Indicates the type of integrality constraint on each decision variable.

0 : Continuous variable; no integrality constraint.

1 : Integer variable; decision variable must be an integer within bounds .

2 : Semi-continuous variable; decision variable must be within bounds or take value 0 .

3 : Semi-integer variable; decision variable must be an integer within bounds or take value 0 .

By default, all variables are continuous.

For mixed integrality constraints, supply an array of shape c.shape . To infer a constraint on each decision variable from shorter inputs, the argument will be broadcasted to c.shape using np.broadcast_to .

This argument is currently used only by the 'highs' method and ignored otherwise.

A scipy.optimize.OptimizeResult consisting of the fields below. Note that the return types of the fields may depend on whether the optimization was successful, therefore it is recommended to check OptimizeResult.status before relying on the other fields:

The values of the decision variables that minimizes the objective function while satisfying the constraints.

The optimal value of the objective function c @ x .

The (nominally positive) values of the slack variables, b_ub - A_ub @ x .

True when the algorithm succeeds in finding an optimal solution.

An integer representing the exit status of the algorithm.

0 : Optimization terminated successfully.

The total number of iterations performed in all phases.

A string descriptor of the exit status of the algorithm.

Additional options accepted by the solvers.

This section describes the available solvers that can be selected by the ‘method’ parameter.

‘highs-ds’ and ‘highs-ipm’ are interfaces to the HiGHS simplex and interior-point method solvers [13] , respectively. ‘highs’ (default) chooses between the two automatically. These are the fastest linear programming solvers in SciPy, especially for large, sparse problems; which of these two is faster is problem-dependent. The other solvers ( ‘interior-point’ , ‘revised simplex’ , and ‘simplex’ ) are legacy methods and will be removed in SciPy 1.11.0.

Method highs-ds is a wrapper of the C++ high performance dual revised simplex implementation (HSOL) [13] , [14] . Method highs-ipm is a wrapper of a C++ implementation of an i nterior- p oint m ethod [13] ; it features a crossover routine, so it is as accurate as a simplex solver. Method highs chooses between the two automatically. For new code involving linprog , we recommend explicitly choosing one of these three method values.

Added in version 1.6.0.

Method interior-point uses the primal-dual path following algorithm as outlined in [4] . This algorithm supports sparse constraint matrices and is typically faster than the simplex methods, especially for large, sparse problems. Note, however, that the solution returned may be slightly less accurate than those of the simplex methods and will not, in general, correspond with a vertex of the polytope defined by the constraints.

Added in version 1.0.0.

Method revised simplex uses the revised simplex method as described in [9] , except that a factorization [11] of the basis matrix, rather than its inverse, is efficiently maintained and used to solve the linear systems at each iteration of the algorithm.

Added in version 1.3.0.

Method simplex uses a traditional, full-tableau implementation of Dantzig’s simplex algorithm [1] , [2] ( not the Nelder-Mead simplex). This algorithm is included for backwards compatibility and educational purposes.

Added in version 0.15.0.

Before applying interior-point , revised simplex , or simplex , a presolve procedure based on [8] attempts to identify trivial infeasibilities, trivial unboundedness, and potential problem simplifications. Specifically, it checks for:

rows of zeros in A_eq or A_ub , representing trivial constraints;

columns of zeros in A_eq and A_ub , representing unconstrained variables;

column singletons in A_eq , representing fixed variables; and

column singletons in A_ub , representing simple bounds.

If presolve reveals that the problem is unbounded (e.g. an unconstrained and unbounded variable has negative cost) or infeasible (e.g., a row of zeros in A_eq corresponds with a nonzero in b_eq ), the solver terminates with the appropriate status code. Note that presolve terminates as soon as any sign of unboundedness is detected; consequently, a problem may be reported as unbounded when in reality the problem is infeasible (but infeasibility has not been detected yet). Therefore, if it is important to know whether the problem is actually infeasible, solve the problem again with option presolve=False .

If neither infeasibility nor unboundedness are detected in a single pass of the presolve, bounds are tightened where possible and fixed variables are removed from the problem. Then, linearly dependent rows of the A_eq matrix are removed, (unless they represent an infeasibility) to avoid numerical difficulties in the primary solve routine. Note that rows that are nearly linearly dependent (within a prescribed tolerance) may also be removed, which can change the optimal solution in rare cases. If this is a concern, eliminate redundancy from your problem formulation and run with option rr=False or presolve=False .

Several potential improvements can be made here: additional presolve checks outlined in [8] should be implemented, the presolve routine should be run multiple times (until no further simplifications can be made), and more of the efficiency improvements from [5] should be implemented in the redundancy removal routines.

After presolve, the problem is transformed to standard form by converting the (tightened) simple bounds to upper bound constraints, introducing non-negative slack variables for inequality constraints, and expressing unbounded variables as the difference between two non-negative variables. Optionally, the problem is automatically scaled via equilibration [12] . The selected algorithm solves the standard form problem, and a postprocessing routine converts the result to a solution to the original problem.

Dantzig, George B., Linear programming and extensions. Rand Corporation Research Study Princeton Univ. Press, Princeton, NJ, 1963

Hillier, S.H. and Lieberman, G.J. (1995), “Introduction to Mathematical Programming”, McGraw-Hill, Chapter 4.

Bland, Robert G. New finite pivoting rules for the simplex method. Mathematics of Operations Research (2), 1977: pp. 103-107.

Andersen, Erling D., and Knud D. Andersen. “The MOSEK interior point optimizer for linear programming: an implementation of the homogeneous algorithm.” High performance optimization. Springer US, 2000. 197-232.

Andersen, Erling D. “Finding all linearly dependent rows in large-scale linear programming.” Optimization Methods and Software 6.3 (1995): 219-227.

Freund, Robert M. “Primal-Dual Interior-Point Methods for Linear Programming based on Newton’s Method.” Unpublished Course Notes, March 2004. Available 2/25/2017 at https://ocw.mit.edu/courses/sloan-school-of-management/15-084j-nonlinear-programming-spring-2004/lecture-notes/lec14_int_pt_mthd.pdf

Fourer, Robert. “Solving Linear Programs by Interior-Point Methods.” Unpublished Course Notes, August 26, 2005. Available 2/25/2017 at http://www.4er.org/CourseNotes/Book%20B/B-III.pdf

Andersen, Erling D., and Knud D. Andersen. “Presolving in linear programming.” Mathematical Programming 71.2 (1995): 221-245.

Bertsimas, Dimitris, and J. Tsitsiklis. “Introduction to linear programming.” Athena Scientific 1 (1997): 997.

Andersen, Erling D., et al. Implementation of interior point methods for large scale linear programming. HEC/Universite de Geneve, 1996.

Bartels, Richard H. “A stabilization of the simplex method.” Journal in Numerische Mathematik 16.5 (1971): 414-434.

Tomlin, J. A. “On scaling linear programming problems.” Mathematical Programming Study 4 (1975): 146-166.

Huangfu, Q., Galabova, I., Feldmeier, M., and Hall, J. A. J. “HiGHS - high performance software for linear optimization.” https://highs.dev/

Huangfu, Q. and Hall, J. A. J. “Parallelizing the dual revised simplex method.” Mathematical Programming Computation, 10 (1), 119-142, 2018. DOI: 10.1007/s12532-017-0130-5

Consider the following problem:

The problem is not presented in the form accepted by linprog . This is easily remedied by converting the “greater than” inequality constraint to a “less than” inequality constraint by multiplying both sides by a factor of \(-1\) . Note also that the last constraint is really the simple bound \(-3 \leq x_1 \leq \infty\) . Finally, since there are no bounds on \(x_0\) , we must explicitly specify the bounds \(-\infty \leq x_0 \leq \infty\) , as the default is for variables to be non-negative. After collecting coeffecients into arrays and tuples, the input for this problem is:

The marginals (AKA dual values / shadow prices / Lagrange multipliers) and residuals (slacks) are also available.

For example, because the marginal associated with the second inequality constraint is -1, we expect the optimal value of the objective function to decrease by eps if we add a small amount eps to the right hand side of the second inequality constraint:

Also, because the residual on the first inequality constraint is 39, we can decrease the right hand side of the first constraint by 39 without affecting the optimal solution.

Integer Programming in Python

Freddy Boulton

Towards Data Science

Integer Programming (IP) problems are optimization problems where all of the variables are constrained to be integers. IP problems are useful mathematical models for how to best allocate one’s resources. Let’s say you’re organizing a marketing campaign for a political candidate and you’re deciding which constituents to send marketing materials to. You can send each constituent a catchy flyer, a detailed pamphlet explaining your agenda, or a bumper sticker (or a combination of the three). If you had a way to measure how likely someone is to vote for your candidate based on the marketing materials they received, how would you decide which materials to send while also being mindful to not exceed your supply?

Traditional optimization algorithms assume the variables can take on floating point values, but in our case, it isn’t reasonable to send someone half a bumper sticker or three quarters of a pamphlet. We’ll use a special python package called cvxpy to solve our problem such that the solutions make sense.

I’ll show you how to use cvxpy to solve the political candidate problem, but I’ll start first a simpler problem called the knapsack problem to show you how the cvxpy syntax works.

The Knapsack Problem

Let’s pretend you’re going on a hike and you’re planning which objects you can take with you. Each object has a weight in pounds w_i and will give you u_i units of utility. You’d like to take all of them but your knapsack can only carry P pounds. Suppose you can either take an object or not. Your goal is to maximize your utility without exceeding the weight limit of your bag.

A cvxpy problem has three parts:

- Creating the variable: We will represent our choice mathematically with a vector of 1’s and 0’s. A 1 will mean we’ve selected that object and a 0 will mean we’ve left it home. We construct a variable that can only take 1’s and 0’s with the cvxpy.Bool object.

- Specifying the constraints: We only need to make sure that the sum of our objects doesn’t exceed the weight limit P. We can compute the total weight of our objects with the dot product of the selection vector and the weights vector. Note that cvxpy overloads the * operator to perform matrix multiplication.

- Formulating the objective function: We want to find the selection that maximizes our utility. The utility of any given selection is the dot product of the selection vector and the utility vector.

Once we have a cost function and constraints, we pass them to a cvxpy Problem object. In this case, we’ve told cvxpy that we’re trying to maximize utility with cvxpy.Maximize. To solve the problem, we just have to run the solve method of our problem object. Afterwards, we can inspect the optimal value of our selection vector by looking at its value attribute.

We’ve selected the first four items and the sixth one. This makes sense because these have high ratio of utility to weight without weighing too much.

The Marketing Problem

Now that we’ve covered basic cvxpy syntax, we can solve the marketing optimization problem for our political candidate. Let’s say we had a model that takes in a constituent’s attributes and predicts the probability they will vote for our candidate for each combination of marketing materials we send them. I’m using fake data here but let’s pretend the model outputs the following probabilities:

There are eight total probabilities per constituent because there are eight total combinations of materials we could send an individual. Here’s what the entries of each 1 x 8 vector represent:

[1 flyer, 1 pamphlet, 1 bumper sticker, flyer and pamphlet, flyer and bumper sticker, pamphlet and bumper sticker, all three, none].

For example, the first constituent has a 0.0001 probability of voting for our candidate if he received a flyer or pamphlet, but a 0.3 probability of voting for our candidate if we sent him a bumper sticker.

Before we get into the cvxpy code, we’ll turn these probabilities into costs by taking the negative log. This makes the math work out nicer and it has a nice interpretation: if the probability is close to 1, the negative log will be close to 0. This means that there is little cost in sending that particular combination of materials to that constituent since we are certain it will lead them to vote for our candidate. Vice versa if the probability is close to 0.

Finally, let’s say that we cannot send more than 150 flyers, 80 pamphlets, and 25 bumper stickers.

Now that we’ve done all the set-up, we can get to the fun part:

- Creating the variable: We’ll use a cvxpy.Bool object again because we can only make binary choices here. We’ll specify that it must be the same shape as our probability matrix:

2. Specifying the constraints: Our selection variable will only tell us which of the 8 choices we’ve made for each constituent, but it won’t tell how many total materials we’ve decided to send to them. We need a way to turn our 1 x 8 selection vector into a 1 x 3 vector. We can do so by multiplying by the selection vector by the following matrix:

If this part is a little confusing, work out the following example:

The fourth entry of our decision vector represents sending a flyer and pamphlet to the constituent and multiplying by TRANSFORMER tells us just that! So we’ll tell cvxpy that multiplying our selection matrix by transformer can’t exceed our supply:

I’m summing over the rows with cvxpy.sum_entries to aggregate the total number of materials we’ve sent across all constituents.

We also need to ensure we’re making exactly once choice per constituent, otherwise the solver can attain zero cost by not sending anyone anything.

3. Formulating the objective function: The total cost of our assignment will be the sum of the costs we’ve incurred for each constituent. We’ll use the cvxpy.mul_elemwise function to multiply our selection matrix with our cost matrix, this will select the cost for each constituent, and the cvxpy.sum_elemwise function will compute the total cost by adding up the individual costs.

The final step is to create the cvxpy.Problem and solve it.

That’s it! Below is a snapshot of what our final assignments look like. We decided not to send any materials to the first constituent. This makes sense because the probability of them voting for our candidate is 0.3 whether we send them a bumper sticker or nothing at all.

It also turns out that the optimal assignment exhausts our supply of pamphlets and bumper stickers but only uses 83 of the total 150 flyers. We should tell our candidate that her flyers aren’t as persuasive as she thinks.

Here is all the code packaged together:

Closing Remarks

I hope you’ve enjoyed learning about integer programming problems and how to solve them in Python. Believe it or not, we’ve covered about 80% of the cvxpy knowledge you need to go out and solve your own optimization problems. I encourage you to read the official documentation to learn about the remaining 20%. CVXPY can solve more than just IP problems, check out their tutorials page to see what other problems cvxpy can solve.

To install cvxpy, follow the directions on their website. I would also install cvxopt to make sure all the solvers that come packaged with cvxpy will work on your machine

We’ve specified that cvxpy should use the GLPK_MI solver in the solve method. This is a special solver designed for IP problems. Before you solve your own problem, consult this table to see which prepackaged cvpxy solver is best suited to your problem.

Happy optimizing!

Written by Freddy Boulton

Data Scientist & Software Engineer

Text to speech

Implementation of a Integer Linear Programming Solver in Python

Introduction.

Integer linear programming problems are common in many domains. I recently found myself experimenting with formulating a probem as an integer programming problem, and I realized that I didn't really understand how the various solvers worked, so I set out to implement my own. This post is about the three pieces that need to be assembled to create one:

- Expression trees and rewrite rules to provide a convenient interface.

- Linear programming relaxation to get a non-integer solution (using scipy.optimize.linprog ).

- Branch-and-bound to refine the relaxed soltion to an integer solution.

You can follow along at [cwpearson/csips][https://github.com/cwpearson/csips]

Problem Formulation

A linear programming problem is an optimization problem with the following form:

find \(\mathbf{x}\) to maximize $$ \mathbf{c}^T\mathbf{x} $$

$$\begin{aligned} A\mathbf{x} &\leq \mathbf{b} \cr 0 &\leq \mathbf{x} \cr \end{aligned}$$

For integer linear programming (ILP) , there is one additional constrait:

$$ \mathbf{x} \in \mathbb{Z} $$

In plain english, \(\mathbf{x}\) is a vector, where each entry represents a variable we want to find the optimal value for. \(\mathbf{c}\) is a vector with one weight for each entry of \(\mathbf{x}\). We're trying to maximize the dot product \(\mathbf{c}^T\mathbf{x}\); we're just summing up each each entry of entry of \(\mathbf{x}\) weighted by the corresponding entry of \(\mathbf{c}\). For example, if we seek to maximize the sum of the first two entries of \(\mathbf{x}\), the first two entries of \(\mathbf{c}\) will be \(1\) and the rest \(0\).

What about Minimizing instead of Maximizing? Just maximize \(-\mathbf{c}^T\mathbf{x}\) instead of maximizing \(\mathbf{c}^T\mathbf{x}\).

What if one of my variables needs to be negative? At first glance, it appears \(0 \leq \mathbf{x}\) will be a problem. However, we'll just construct \(A\) and \(\mathbf{b}\) so that all constraints are relative to \(-\mathbf{x}_i\) instead of \(\mathbf{x}_i\) for variable \(i\).

To specify our problem, we must specify \(A\), \(\mathbf{b}\), and \(\mathbf{c}^T\).

For simple problems it might be convenient enough to do this unassisted, for example (pdf) :

find \(x,y\) to maximize $$ 4x + 5y $$

$$\begin{aligned} x + 4y &\leq 10 \cr 3x - 4y &\leq 6 \cr 0 &\leq x,y \end{aligned}$$

We have two variables \(x\) and \(y\), so Our \(\mathbf{x}\) vector will be two entries, \(\mathbf{x}_0\) for \(x\) and \(\mathbf{x}_1\) for \(y\). Correspondingly, \(A\) will have two columns, one for each variable, and our \(\mathbf{c}\) will have two entries, one for each variable. We have two constraints, so our \(A\) will have two rows, one for each constraint.

How do we handle \(0 \leq x,y\)? We'll cover that later when we talk about scipy's linprog function to actually solve this.

User-friendly Interface

- Implemented in expr.py

If your problem is complicated it can get clumsy to specify every constraint and objective in this form, since you'llneed to massage all your constraints so that all the variables are on the left and are \(\leq\) a constant on the right. It would be best to have the computer do this for you, and present a more natural interface akin to the following:

To do this, we'll introduce some python classes to represent nodes in an expression tree of our constraints. Then we'll overload the * , + , - , == , <= , and >= operators on those classes to allow those nodes to be composed into a tree from a natural expression.

For example, an implementation of scaling a variable by an integer:

Using part of the constraints above:

which represents variable x[0] multiplied by integer 3 . We can construct more complicated trees to support our expression grammar by extending these classes with additional operations (e.g. __leq__ , __sum__ , ...) and additional classes to represent summation expressions, less-or-equal equations, and so forth.

Conversion to Standard Form

- Implemented at csips.py

Once each constraint (and the objective) are represented as expression trees, the challenge then becomes converting them into the standard form, e.g. all variables and scalar multiplications on the left-hand side (a row of \(A\)), and a single constant on the right-hand side (an entry of \(\mathbf{b}\)). We can use a few rewrite rules to transform our expression trees into what we want.

Distribution: $$ a \times (b + c) \rightarrow a \times b + a \times c $$

Fold: $$ a + b \rightarrow c $$

Move left $$ a \leq b + c \rightarrow a - b \leq c $$

Flip: $$ a \geq b \rightarrow -a \leq -b $$

By applying these rules, we can convert any of our expressions to one where a sum of scaled variables is on the left hand side of \(\leq\), and a single int is on the right hand side. Then it's a simple matter of generating an \(\mathbf{x}\) with one entry per unique variable, \(A\) from the scaling on those variables, and \(\mathbf{b}\) from the right-hand side.

Linear Program Relaxation

The way this is usually done is actually by initially ignoring the integer constraint ( \(\mathbf{x} \in \mathbb{Z}\) ), then going back and "fixing" the non-integer parts of your solution. When you ignore the integer constraint, you're doing what's called "linear programming relaxation" (LP relaxation) . Solving the LP relaxation will provide a (possibly non-integer) \(\mathbf{x}\).

We'll use scipy's linprog function to solve the LP relaxation.

Here's how linprog 's arguments map to our formulation

- c : this is \(\mathbf{c}^T\)

- A_ub : this is \(A\)

- b_ub : this is \(\mathbf{b}\)

linprog also allows us to specify bounds on \(\mathbf{x}\) in a convenient form.

- bounds : this allows us to provide bounds on \(\mathbf{x}\), e.g. providing the tuple (0, None) implements \(0 \leq \mathbf{x}\). This is not strictly necessary since we can directly express this constraint using A_ub and b_ub .

linprog also allows us to specify additional constraints in the form \(A\mathbf{x} = \mathbf{b}\). This is not strictly necessary, since for a variable \(i\) we could just say \(\mathbf{x}_i \leq c\) and \(-\mathbf{x}_i \leq -c\) in our original formulation to achieve \(\mathbf{x}_i = c\).

- A_eq : \(A\) in optional \(A\mathbf{x} = \mathbf{b}\)

- b_eq : \(\mathbf{b}\) in optional \(A\mathbf{x} = \mathbf{b}\)

linprog will dutifully report the optimal solution as \(x = 4\), \(y = 1.5\), and \(4x \times 5y = 23.5\). Of course, \(y = 1.5\) is not an integer, so we'll use "branch and bound" to refine towards an integer solution.

Branch and Bound

Branch and bound is a whole family of algorithms for solving discrete and combinatorial optimization problems. It represents the space of solutions in a tree, where the root node is all possible solutions. Each node branches into two child nodes each which represents two non-overlapping smaller subsets of the full solution space. As the tree is descended, the solution space will either shrink to 1 (a feasible solution) or 0 (no feasible solution). As we discover feasible solutions, we will keep track of the best one. At any point, we may be able to determine a bound on the best possible solution offered by a subset. If that bound is worse than the best solution so far, we can skip that entire subtree.

For our ILP problem, the algorithm looks like this:

- Initialize the best objective observed so far to \(\inf\)

- Find the LP relaxation solution

- If the LP relaxation objective is worse than the best solution so far, discard it. The integer solution will be no better than the relaxation solution, so if the relaxation solution is worse, then the integer solution will be too.

- Else, push that solution onto a stack

- Take solution off the stack

- If all \(\mathbf{x}\) entries are integers and the objective is the best so far, record it

- Else, branch to produce two child nodes. If \(\mathbf{x}_i = c\) is non-integer, two subproblems, each with one additional constraint \(\mathbf{x}_i \leq \lfloor c \rfloor \) and \(\mathbf{x}_i \geq \lceil c \rceil \)

- Report the solution with the best result so far.

Doing so for our example will converge on a solution of \(x = 2\), \(y = 2\), and \(4x \times 5y = 18\).

In 5.3, there are different ways to choose which non-integer variable to branch on. CSIPS branches on the first one or the most infeasible .

There are more sophisticated methods for solving the integer problem, such as branch-and-cut or branch-and-price. Unfortunately, these are difficult to investigate using scipy's linprog, as none of its solver methods make the tableau form accessible.

Exact 2.1.0

pip install Exact Copy PIP instructions

Released: Jun 5, 2024

Python bindings for Exact

Verified details

Maintainers.

Unverified details

Project links.

License: GNU Affero General Public License v3

Author: Jo Devriendt

Requires: Python >=3.10

Classifiers

- OSI Approved :: GNU Affero General Public License v3

- Python :: 3

Project description

Exact solves decision and optimization problems formulated as integer linear programs. Under the hood, it converts integer variables to binary (0-1) variables and applies highly efficient propagation routines and strong cutting-planes / pseudo-Boolean conflict analysis.

Exact is a fork of RoundingSat and improves upon its predecessor in reliability, performance and ease-of-use. In particular, Exact supports integer variables, reified linear constraints, multiplication constraints, and propagation and count inferences. The name "Exact" reflects that the answers are fully sound, as approximate and floating-point calculations only occur in heuristic parts of the algorithm. As such, Exact can soundly be used for verification and theorem proving, where its envisioned ability to emit machine-checkable certificates of optimality and unsatisfiability should prove useful.

- Native conflict analysis over binary linear constraints, constructing full-blown cutting planes proofs.

- Highly efficient watched propagation routines.

- Seamless use of arbitrary precision arithmetic when needed.

- Hybrid linear (top-down) and core-guided (bottom-up) optimization.

- Optional integration with the SoPlex LP solver .

- Core solver also compiles on macOS and Windows .

- Under development: Python interface with assumption solving and reuse of solver state (Linux only for now).

- Under development: generation of certificates of optimality and unsatisfiability that can be automatically verified by VeriPB .

Python interface

Pypi package.

The easiest way is to use Exact's Python interfaces is on an x86_64 machine with Windows or Linux . In that case, install this precompiled PyPi package , e.g., by running pip install exact .

Compile your own Python package

To use the Exact Python interface with optimal binaries for your machine (and the option to include SoPlex in the binary), compile as a shared library and install it with your package manager. E.g., on Linux systems, running pip install . in Exact's root directory should do the trick. On Windows, uncomment the build options below # FOR WINDOWS and comment out those for # FOR LINUX . Make sure to have the Boost libraries installed (see dependencies).

Documentation

The header file Exact.hpp contains the C++ methods exposed to Python via Pybind11 as well as their description. This is probably the best place to start to learn about Exact's Python interface.

Next, python_examples contains instructive examples. Of particular interest is the knapsack tutorial , which is fully commented, starts simple, and ends with some of Exact's advanced features.

Command line usage

Exact takes as command line input an integer linear program and outputs a(n optimal) solution or reports that none exists. Either pipe the program

or pass the file as a parameter

Use the flag --help to display a list of runtime parameters.

Exact supports five input formats (described in more detail in InputFormats.md ):

- .opb pseudo-Boolean decision and optimization (equivalent to 0-1 integer linear programming)

- .wbo weighted pseudo-Boolean optimization (0-1 integer linear programming with weighted soft constraints)

- .cnf DIMACS Conjunctive Normal Form (CNF)

- .wcnf Weighted Conjunctive Normal Form (WCNF)

- .mps Mathematical Programming System (MPS) via the optional CoinUtils library

- .lp Linear Program (LP) via the optional CoinUtils library

Note that .mps and .lp allow rational variables, which are not supported by Exact. Additionally, these formats permit floating point values, which may lead to tricky issues . Rewrite constraints with fractional values to integral ones by multiplying with the lowest common multiple of the denominators.

By default, Exact decides on the format based on the filename extension, but this can be overridden with the --format option.

Compilation from source

In the root directory of Exact:

Replace make by cmake --build . on Windows. For more builds, similar build directories can be created.

For installing system-wide or to the CMAKE_INSTALL_PREFIX root, use make install (on Linux).

Dependencies

- A recent C++20 compiler (GCC, Clang or MSVC should do)

- Boost library, minimal version 1.65. On a Debian/Ubuntu system, install with sudo apt install libboost-dev . On Windows, follow the instructions on boost.org

- Optionally: CoinUtils library to parse MPS and LP file formats. Use CMake option -Dcoinutils=ON after installing the library .

- Optionally: SoPlex LP solver (see below).

SoPlex (on Linux)

Exact supports an integration with the LP solver SoPlex to improve its search routine. For this, checkout SoPlex from its git repository as a submodule, compile it in some separate directory, and configure the right CMake options when compiling Exact.

By default, the following commands in Exact's root directory should work with a freshly checked out repository:

The CMake options soplex_src and soplex_build allow to look for SoPlex in a different location.

Exact is licensed under the AGPLv3 . If this would hinder your intended usage, please contact @JoD.

The current set of benchmarks which is used to assess performance is available here .

If you use Exact, please star and cite this repository and cite the RoundingSat origin paper (which focuses on cutting planes conflict analysis): [EN18] J. Elffers, J. Nordström. Divide and Conquer: Towards Faster Pseudo-Boolean Solving. IJCAI 2018

Please cite any of the following papers if they are relevant.

Integration with SoPlex: [DGN20] J. Devriendt, A. Gleixner, J. Nordström. Learn to Relax: Integrating 0-1 Integer Linear Programming with Pseudo-Boolean Conflict-Driven Search. CPAIOR 2020 / Constraints journal

Watched propagation: [D20] J. Devriendt. Watched Propagation for 0-1 Integer Linear Constraints. CP 2020

Core-guided optimization: [DGDNS21] J. Devriendt, S. Gocht, E. Demirović, J. Nordström, P. J. Stuckey. Cutting to the Core of Pseudo-Boolean Optimization: Combining Core-Guided Search with Cutting Planes Reasoning. AAAI 2021

Project details

Release history release notifications | rss feed.

Jun 5, 2024

Jun 4, 2024

Jul 19, 2023

Jun 26, 2023

Jun 13, 2023

Jun 5, 2023

May 29, 2023

Oct 1, 2022

Apr 20, 2022

Apr 19, 2022

Sep 20, 2021

Aug 24, 2021

Aug 23, 2021

Aug 18, 2021

Aug 17, 2021

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages .

Source Distributions

Built distributions.

Uploaded Jun 5, 2024 CPython 3.12 Windows x86-64

Uploaded Jun 5, 2024 CPython 3.12 manylinux: glibc 2.27+ x86-64 manylinux: glibc 2.28+ x86-64

Uploaded Jun 5, 2024 CPython 3.11 manylinux: glibc 2.27+ x86-64 manylinux: glibc 2.28+ x86-64

Uploaded Jun 5, 2024 CPython 3.10 manylinux: glibc 2.27+ x86-64 manylinux: glibc 2.28+ x86-64

Hashes for Exact-2.1.0-cp312-cp312-win_amd64.whl

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | ||

| MD5 | ||

| BLAKE2b-256 |

Hashes for Exact-2.1.0-cp312-cp312-manylinux_2_27_x86_64.manylinux_2_28_x86_64.whl

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | ||

| MD5 | ||

| BLAKE2b-256 |

Hashes for Exact-2.1.0-cp311-cp311-manylinux_2_27_x86_64.manylinux_2_28_x86_64.whl

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | ||

| MD5 | ||

| BLAKE2b-256 |

Hashes for Exact-2.1.0-cp310-cp310-manylinux_2_27_x86_64.manylinux_2_28_x86_64.whl

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | ||

| MD5 | ||

| BLAKE2b-256 |

- português (Brasil)

Supported by

Stack Exchange Network

Stack Exchange network consists of 183 Q&A communities including Stack Overflow , the largest, most trusted online community for developers to learn, share their knowledge, and build their careers.

Q&A for work

Connect and share knowledge within a single location that is structured and easy to search.

Python solvers for mixed-integer nonlinear constrained optimization

I want to minimize a black box function $f(x)$, which takes a 8$\times$3 matrix of non-negative integers as input. Each row specifies a variable, whereas each column specifies a certain time period so that $x_{ij}$ is the $i$th variable in the $j$th time period.

$f(x)$ is the activation function for a convolutional filter in the 2. convolutional layer of a convolutional neural network. It is therefore expected to be nonlinear and nonconvex. The input is subject to constraints as seen below, where $\text{sgn}(x)$ is the sign function as defined at Wikipedia .

\begin{aligned} \text{Objective:} \hspace{1cm} & \text{minimize} \hspace{0.2cm} f(x)\\ \text{Constraints:} \hspace{1cm} & \sum_{i=3}^{6}\sum_{j=1}^{3} x_{ij} = 10 & \\ & x_{3j} + x_{5j} \geq x_{1j} &\forall j = 1,2,3\\ & x_{4j} + x_{6j} \geq x_{2j} &\forall j = 1,2,3\\ & x_{7j} \leq 15 &\forall j = 1,2,3\\ & x_{8j} \leq 15 &\forall j = 1,2,3\\ & \text{sgn}(x_{7j}) = \text{sgn}(x_{3j}) &\forall j = 1,2,3 \\ & \text{sgn}(x_{8j}) = \text{sgn}(x_{4j}) &\forall j = 1,2,3 \\ & x_{ij} \in \mathbb{N_0} &\forall i = 1,2,\cdots,8 \hspace{0.2cm} \forall j = 1,2,3 \end{aligned}

Can anyone recommend any Python packages that would be able to solve this problem? Any commercial software with an interface to Python and a free academic license/evaluation period would also be great.

EDIT: It should be noted that the optimization does not have to find a global minimum (although that is, of course, preferred).

- optimization

- $\begingroup$ I might not have explained the problem well enough (see the updated question). It is nonlinear and I am certain that the optimal solution will have D's unequal to 0. $\endgroup$ – pir Commented Jun 7, 2015 at 8:01

- 2 $\begingroup$ @felbo: I'm guess that your nonlinearity consists of the restrictions on signs of certain pairs of unknowns. If you are asking how to solve this problem with off-the-shelf software packages, one approach would be breaking each of those pair-sign constraints into pairs of inequalities, e.g. $x_{7,j} \gt 0$ and $x_{3,j} \gt 0$ OR $x_{7,j} \lt 0$ and $x_{3,j} \lt 0$ (trusting you to fill in the details about how signs for zero values should be treated). But perhaps you are not as much interested in this one problem as in a broader survey of integer optimization software. $\endgroup$ – hardmath ♦ Commented Jun 7, 2015 at 14:00

- 1 $\begingroup$ @hardmath: I'm not interested in a broader survey of integer optimization software, but in solving this exact problem. Breaking the pair-sign constraints into pairs of inequalities sounds like a great idea if that can be used as input for off-the-shelf packages! The nonlinearity I mentioned in the question is not regarding the constraints, but regarding the function $f(x)$. Do you have any recommendation on off-the-shelf software for black box optimization after your reformulation of the constraints? $\endgroup$ – pir Commented Jun 7, 2015 at 14:12