Which review is that? A guide to review types

- Which review is that?

- Review Comparison Chart

- Decision Tool

- Critical Review

- Integrative Review

- Narrative Review

- State of the Art Review

- Narrative Summary

- Systematic Review

- Meta-analysis

- Comparative Effectiveness Review

- Diagnostic Systematic Review

- Network Meta-analysis

- Prognostic Review

- Psychometric Review

- Review of Economic Evaluations

- Systematic Review of Epidemiology Studies

- Living Systematic Reviews

- Umbrella Review

- Review of Reviews

- Rapid Review

- Rapid Evidence Assessment

- Rapid Realist Review

- Qualitative Evidence Synthesis

- Qualitative Interpretive Meta-synthesis

- Qualitative Meta-synthesis

- Qualitative Research Synthesis

- Framework Synthesis - Best-fit Framework Synthesis

- Meta-aggregation

- Meta-ethnography

- Meta-interpretation

- Meta-narrative Review

- Meta-summary

- Thematic Synthesis

- Mixed Methods Synthesis

- Narrative Synthesis

- Bayesian Meta-analysis

- EPPI-Centre Review

- Critical Interpretive Synthesis

- Realist Synthesis - Realist Review

- Scoping Review

- Mapping Review

- Systematised Review

- Concept Synthesis

- Expert Opinion - Policy Review

- Technology Assessment Review

Methodological Review

- Systematic Search and Review

A methodological review is a type of systematic secondary research (i.e., research synthesis) which focuses on summarising the state-of-the-art methodological practices of research in a substantive field or topic" (Chong et al, 2021).

Methodological reviews "can be performed to examine any methodological issues relating to the design, conduct and review of research studies and also evidence syntheses". Munn et al, 2018)

Further Reading/Resources

Clarke, M., Oxman, A. D., Paulsen, E., Higgins, J. P. T., & Green, S. (2011). Appendix A: Guide to the contents of a Cochrane Methodology protocol and review. Cochrane Handbook for systematic reviews of interventions . Full Text PDF

Aguinis, H., Ramani, R. S., & Alabduljader, N. (2023). Best-Practice Recommendations for Producers, Evaluators, and Users of Methodological Literature Reviews. Organizational Research Methods, 26(1), 46-76. https://doi.org/10.1177/1094428120943281 Full Text

Jha, C. K., & Kolekar, M. H. (2021). Electrocardiogram data compression techniques for cardiac healthcare systems: A methodological review. IRBM . Full Text

References Munn, Z., Stern, C., Aromataris, E., Lockwood, C., & Jordan, Z. (2018). What kind of systematic review should I conduct? A proposed typology and guidance for systematic reviewers in the medical and health sciences. BMC medical research methodology , 18 (1), 1-9. Full Text Chong, S. W., & Reinders, H. (2021). A methodological review of qualitative research syntheses in CALL: The state-of-the-art. System , 103 , 102646. Full Text

- << Previous: Technology Assessment Review

- Next: Systematic Search and Review >>

- Last Updated: Jul 4, 2024 8:46 AM

- URL: https://unimelb.libguides.com/whichreview

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- How to Write a Literature Review | Guide, Examples, & Templates

How to Write a Literature Review | Guide, Examples, & Templates

Published on January 2, 2023 by Shona McCombes . Revised on September 11, 2023.

What is a literature review? A literature review is a survey of scholarly sources on a specific topic. It provides an overview of current knowledge, allowing you to identify relevant theories, methods, and gaps in the existing research that you can later apply to your paper, thesis, or dissertation topic .

There are five key steps to writing a literature review:

- Search for relevant literature

- Evaluate sources

- Identify themes, debates, and gaps

- Outline the structure

- Write your literature review

A good literature review doesn’t just summarize sources—it analyzes, synthesizes , and critically evaluates to give a clear picture of the state of knowledge on the subject.

Instantly correct all language mistakes in your text

Upload your document to correct all your mistakes in minutes

Table of contents

What is the purpose of a literature review, examples of literature reviews, step 1 – search for relevant literature, step 2 – evaluate and select sources, step 3 – identify themes, debates, and gaps, step 4 – outline your literature review’s structure, step 5 – write your literature review, free lecture slides, other interesting articles, frequently asked questions, introduction.

- Quick Run-through

- Step 1 & 2

When you write a thesis , dissertation , or research paper , you will likely have to conduct a literature review to situate your research within existing knowledge. The literature review gives you a chance to:

- Demonstrate your familiarity with the topic and its scholarly context

- Develop a theoretical framework and methodology for your research

- Position your work in relation to other researchers and theorists

- Show how your research addresses a gap or contributes to a debate

- Evaluate the current state of research and demonstrate your knowledge of the scholarly debates around your topic.

Writing literature reviews is a particularly important skill if you want to apply for graduate school or pursue a career in research. We’ve written a step-by-step guide that you can follow below.

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

Writing literature reviews can be quite challenging! A good starting point could be to look at some examples, depending on what kind of literature review you’d like to write.

- Example literature review #1: “Why Do People Migrate? A Review of the Theoretical Literature” ( Theoretical literature review about the development of economic migration theory from the 1950s to today.)

- Example literature review #2: “Literature review as a research methodology: An overview and guidelines” ( Methodological literature review about interdisciplinary knowledge acquisition and production.)

- Example literature review #3: “The Use of Technology in English Language Learning: A Literature Review” ( Thematic literature review about the effects of technology on language acquisition.)

- Example literature review #4: “Learners’ Listening Comprehension Difficulties in English Language Learning: A Literature Review” ( Chronological literature review about how the concept of listening skills has changed over time.)

You can also check out our templates with literature review examples and sample outlines at the links below.

Download Word doc Download Google doc

Before you begin searching for literature, you need a clearly defined topic .

If you are writing the literature review section of a dissertation or research paper, you will search for literature related to your research problem and questions .

Make a list of keywords

Start by creating a list of keywords related to your research question. Include each of the key concepts or variables you’re interested in, and list any synonyms and related terms. You can add to this list as you discover new keywords in the process of your literature search.

- Social media, Facebook, Instagram, Twitter, Snapchat, TikTok

- Body image, self-perception, self-esteem, mental health

- Generation Z, teenagers, adolescents, youth

Search for relevant sources

Use your keywords to begin searching for sources. Some useful databases to search for journals and articles include:

- Your university’s library catalogue

- Google Scholar

- Project Muse (humanities and social sciences)

- Medline (life sciences and biomedicine)

- EconLit (economics)

- Inspec (physics, engineering and computer science)

You can also use boolean operators to help narrow down your search.

Make sure to read the abstract to find out whether an article is relevant to your question. When you find a useful book or article, you can check the bibliography to find other relevant sources.

You likely won’t be able to read absolutely everything that has been written on your topic, so it will be necessary to evaluate which sources are most relevant to your research question.

For each publication, ask yourself:

- What question or problem is the author addressing?

- What are the key concepts and how are they defined?

- What are the key theories, models, and methods?

- Does the research use established frameworks or take an innovative approach?

- What are the results and conclusions of the study?

- How does the publication relate to other literature in the field? Does it confirm, add to, or challenge established knowledge?

- What are the strengths and weaknesses of the research?

Make sure the sources you use are credible , and make sure you read any landmark studies and major theories in your field of research.

You can use our template to summarize and evaluate sources you’re thinking about using. Click on either button below to download.

Take notes and cite your sources

As you read, you should also begin the writing process. Take notes that you can later incorporate into the text of your literature review.

It is important to keep track of your sources with citations to avoid plagiarism . It can be helpful to make an annotated bibliography , where you compile full citation information and write a paragraph of summary and analysis for each source. This helps you remember what you read and saves time later in the process.

To begin organizing your literature review’s argument and structure, be sure you understand the connections and relationships between the sources you’ve read. Based on your reading and notes, you can look for:

- Trends and patterns (in theory, method or results): do certain approaches become more or less popular over time?

- Themes: what questions or concepts recur across the literature?

- Debates, conflicts and contradictions: where do sources disagree?

- Pivotal publications: are there any influential theories or studies that changed the direction of the field?

- Gaps: what is missing from the literature? Are there weaknesses that need to be addressed?

This step will help you work out the structure of your literature review and (if applicable) show how your own research will contribute to existing knowledge.

- Most research has focused on young women.

- There is an increasing interest in the visual aspects of social media.

- But there is still a lack of robust research on highly visual platforms like Instagram and Snapchat—this is a gap that you could address in your own research.

There are various approaches to organizing the body of a literature review. Depending on the length of your literature review, you can combine several of these strategies (for example, your overall structure might be thematic, but each theme is discussed chronologically).

Chronological

The simplest approach is to trace the development of the topic over time. However, if you choose this strategy, be careful to avoid simply listing and summarizing sources in order.

Try to analyze patterns, turning points and key debates that have shaped the direction of the field. Give your interpretation of how and why certain developments occurred.

If you have found some recurring central themes, you can organize your literature review into subsections that address different aspects of the topic.

For example, if you are reviewing literature about inequalities in migrant health outcomes, key themes might include healthcare policy, language barriers, cultural attitudes, legal status, and economic access.

Methodological

If you draw your sources from different disciplines or fields that use a variety of research methods , you might want to compare the results and conclusions that emerge from different approaches. For example:

- Look at what results have emerged in qualitative versus quantitative research

- Discuss how the topic has been approached by empirical versus theoretical scholarship

- Divide the literature into sociological, historical, and cultural sources

Theoretical

A literature review is often the foundation for a theoretical framework . You can use it to discuss various theories, models, and definitions of key concepts.

You might argue for the relevance of a specific theoretical approach, or combine various theoretical concepts to create a framework for your research.

Like any other academic text , your literature review should have an introduction , a main body, and a conclusion . What you include in each depends on the objective of your literature review.

The introduction should clearly establish the focus and purpose of the literature review.

Depending on the length of your literature review, you might want to divide the body into subsections. You can use a subheading for each theme, time period, or methodological approach.

As you write, you can follow these tips:

- Summarize and synthesize: give an overview of the main points of each source and combine them into a coherent whole

- Analyze and interpret: don’t just paraphrase other researchers — add your own interpretations where possible, discussing the significance of findings in relation to the literature as a whole

- Critically evaluate: mention the strengths and weaknesses of your sources

- Write in well-structured paragraphs: use transition words and topic sentences to draw connections, comparisons and contrasts

In the conclusion, you should summarize the key findings you have taken from the literature and emphasize their significance.

When you’ve finished writing and revising your literature review, don’t forget to proofread thoroughly before submitting. Not a language expert? Check out Scribbr’s professional proofreading services !

This article has been adapted into lecture slides that you can use to teach your students about writing a literature review.

Scribbr slides are free to use, customize, and distribute for educational purposes.

Open Google Slides Download PowerPoint

If you want to know more about the research process , methodology , research bias , or statistics , make sure to check out some of our other articles with explanations and examples.

- Sampling methods

- Simple random sampling

- Stratified sampling

- Cluster sampling

- Likert scales

- Reproducibility

Statistics

- Null hypothesis

- Statistical power

- Probability distribution

- Effect size

- Poisson distribution

Research bias

- Optimism bias

- Cognitive bias

- Implicit bias

- Hawthorne effect

- Anchoring bias

- Explicit bias

A literature review is a survey of scholarly sources (such as books, journal articles, and theses) related to a specific topic or research question .

It is often written as part of a thesis, dissertation , or research paper , in order to situate your work in relation to existing knowledge.

There are several reasons to conduct a literature review at the beginning of a research project:

- To familiarize yourself with the current state of knowledge on your topic

- To ensure that you’re not just repeating what others have already done

- To identify gaps in knowledge and unresolved problems that your research can address

- To develop your theoretical framework and methodology

- To provide an overview of the key findings and debates on the topic

Writing the literature review shows your reader how your work relates to existing research and what new insights it will contribute.

The literature review usually comes near the beginning of your thesis or dissertation . After the introduction , it grounds your research in a scholarly field and leads directly to your theoretical framework or methodology .

A literature review is a survey of credible sources on a topic, often used in dissertations , theses, and research papers . Literature reviews give an overview of knowledge on a subject, helping you identify relevant theories and methods, as well as gaps in existing research. Literature reviews are set up similarly to other academic texts , with an introduction , a main body, and a conclusion .

An annotated bibliography is a list of source references that has a short description (called an annotation ) for each of the sources. It is often assigned as part of the research process for a paper .

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

McCombes, S. (2023, September 11). How to Write a Literature Review | Guide, Examples, & Templates. Scribbr. Retrieved July 8, 2024, from https://www.scribbr.com/dissertation/literature-review/

Is this article helpful?

Shona McCombes

Other students also liked, what is a theoretical framework | guide to organizing, what is a research methodology | steps & tips, how to write a research proposal | examples & templates, get unlimited documents corrected.

✔ Free APA citation check included ✔ Unlimited document corrections ✔ Specialized in correcting academic texts

Research Methods: Literature Reviews

- Annotated Bibliographies

- Literature Reviews

- Scoping Reviews

- Systematic Reviews

- Scholarship of Teaching and Learning

- Persuasive Arguments

- Subject Specific Methodology

A literature review involves researching, reading, analyzing, evaluating, and summarizing scholarly literature (typically journals and articles) about a specific topic. The results of a literature review may be an entire report or article OR may be part of a article, thesis, dissertation, or grant proposal. A literature review helps the author learn about the history and nature of their topic, and identify research gaps and problems.

Steps & Elements

Problem formulation

- Determine your topic and its components by asking a question

- Research: locate literature related to your topic to identify the gap(s) that can be addressed

- Read: read the articles or other sources of information

- Analyze: assess the findings for relevancy

- Evaluating: determine how the article are relevant to your research and what are the key findings

- Synthesis: write about the key findings and how it is relevant to your research

Elements of a Literature Review

- Summarize subject, issue or theory under consideration, along with objectives of the review

- Divide works under review into categories (e.g. those in support of a particular position, those against, those offering alternative theories entirely)

- Explain how each work is similar to and how it varies from the others

- Conclude which pieces are best considered in their argument, are most convincing of their opinions, and make the greatest contribution to the understanding and development of an area of research

Writing a Literature Review Resources

- How to Write a Literature Review From the Wesleyan University Library

- Write a Literature Review From the University of California Santa Cruz Library. A Brief overview of a literature review, includes a list of stages for writing a lit review.

- Literature Reviews From the University of North Carolina Writing Center. Detailed information about writing a literature review.

- Undertaking a literature review: a step-by-step approach Cronin, P., Ryan, F., & Coughan, M. (2008). Undertaking a literature review: A step-by-step approach. British Journal of Nursing, 17(1), p.38-43

Literature Review Tutorial

- << Previous: Annotated Bibliographies

- Next: Scoping Reviews >>

- Last Updated: Jul 8, 2024 3:13 PM

- URL: https://guides.auraria.edu/researchmethods

1100 Lawrence Street Denver, CO 80204 303-315-7700 Ask Us Directions

- Privacy Policy

Home » Literature Review – Types Writing Guide and Examples

Literature Review – Types Writing Guide and Examples

Table of Contents

Literature Review

Definition:

A literature review is a comprehensive and critical analysis of the existing literature on a particular topic or research question. It involves identifying, evaluating, and synthesizing relevant literature, including scholarly articles, books, and other sources, to provide a summary and critical assessment of what is known about the topic.

Types of Literature Review

Types of Literature Review are as follows:

- Narrative literature review : This type of review involves a comprehensive summary and critical analysis of the available literature on a particular topic or research question. It is often used as an introductory section of a research paper.

- Systematic literature review: This is a rigorous and structured review that follows a pre-defined protocol to identify, evaluate, and synthesize all relevant studies on a specific research question. It is often used in evidence-based practice and systematic reviews.

- Meta-analysis: This is a quantitative review that uses statistical methods to combine data from multiple studies to derive a summary effect size. It provides a more precise estimate of the overall effect than any individual study.

- Scoping review: This is a preliminary review that aims to map the existing literature on a broad topic area to identify research gaps and areas for further investigation.

- Critical literature review : This type of review evaluates the strengths and weaknesses of the existing literature on a particular topic or research question. It aims to provide a critical analysis of the literature and identify areas where further research is needed.

- Conceptual literature review: This review synthesizes and integrates theories and concepts from multiple sources to provide a new perspective on a particular topic. It aims to provide a theoretical framework for understanding a particular research question.

- Rapid literature review: This is a quick review that provides a snapshot of the current state of knowledge on a specific research question or topic. It is often used when time and resources are limited.

- Thematic literature review : This review identifies and analyzes common themes and patterns across a body of literature on a particular topic. It aims to provide a comprehensive overview of the literature and identify key themes and concepts.

- Realist literature review: This review is often used in social science research and aims to identify how and why certain interventions work in certain contexts. It takes into account the context and complexities of real-world situations.

- State-of-the-art literature review : This type of review provides an overview of the current state of knowledge in a particular field, highlighting the most recent and relevant research. It is often used in fields where knowledge is rapidly evolving, such as technology or medicine.

- Integrative literature review: This type of review synthesizes and integrates findings from multiple studies on a particular topic to identify patterns, themes, and gaps in the literature. It aims to provide a comprehensive understanding of the current state of knowledge on a particular topic.

- Umbrella literature review : This review is used to provide a broad overview of a large and diverse body of literature on a particular topic. It aims to identify common themes and patterns across different areas of research.

- Historical literature review: This type of review examines the historical development of research on a particular topic or research question. It aims to provide a historical context for understanding the current state of knowledge on a particular topic.

- Problem-oriented literature review : This review focuses on a specific problem or issue and examines the literature to identify potential solutions or interventions. It aims to provide practical recommendations for addressing a particular problem or issue.

- Mixed-methods literature review : This type of review combines quantitative and qualitative methods to synthesize and analyze the available literature on a particular topic. It aims to provide a more comprehensive understanding of the research question by combining different types of evidence.

Parts of Literature Review

Parts of a literature review are as follows:

Introduction

The introduction of a literature review typically provides background information on the research topic and why it is important. It outlines the objectives of the review, the research question or hypothesis, and the scope of the review.

Literature Search

This section outlines the search strategy and databases used to identify relevant literature. The search terms used, inclusion and exclusion criteria, and any limitations of the search are described.

Literature Analysis

The literature analysis is the main body of the literature review. This section summarizes and synthesizes the literature that is relevant to the research question or hypothesis. The review should be organized thematically, chronologically, or by methodology, depending on the research objectives.

Critical Evaluation

Critical evaluation involves assessing the quality and validity of the literature. This includes evaluating the reliability and validity of the studies reviewed, the methodology used, and the strength of the evidence.

The conclusion of the literature review should summarize the main findings, identify any gaps in the literature, and suggest areas for future research. It should also reiterate the importance of the research question or hypothesis and the contribution of the literature review to the overall research project.

The references list includes all the sources cited in the literature review, and follows a specific referencing style (e.g., APA, MLA, Harvard).

How to write Literature Review

Here are some steps to follow when writing a literature review:

- Define your research question or topic : Before starting your literature review, it is essential to define your research question or topic. This will help you identify relevant literature and determine the scope of your review.

- Conduct a comprehensive search: Use databases and search engines to find relevant literature. Look for peer-reviewed articles, books, and other academic sources that are relevant to your research question or topic.

- Evaluate the sources: Once you have found potential sources, evaluate them critically to determine their relevance, credibility, and quality. Look for recent publications, reputable authors, and reliable sources of data and evidence.

- Organize your sources: Group the sources by theme, method, or research question. This will help you identify similarities and differences among the literature, and provide a structure for your literature review.

- Analyze and synthesize the literature : Analyze each source in depth, identifying the key findings, methodologies, and conclusions. Then, synthesize the information from the sources, identifying patterns and themes in the literature.

- Write the literature review : Start with an introduction that provides an overview of the topic and the purpose of the literature review. Then, organize the literature according to your chosen structure, and analyze and synthesize the sources. Finally, provide a conclusion that summarizes the key findings of the literature review, identifies gaps in knowledge, and suggests areas for future research.

- Edit and proofread: Once you have written your literature review, edit and proofread it carefully to ensure that it is well-organized, clear, and concise.

Examples of Literature Review

Here’s an example of how a literature review can be conducted for a thesis on the topic of “ The Impact of Social Media on Teenagers’ Mental Health”:

- Start by identifying the key terms related to your research topic. In this case, the key terms are “social media,” “teenagers,” and “mental health.”

- Use academic databases like Google Scholar, JSTOR, or PubMed to search for relevant articles, books, and other publications. Use these keywords in your search to narrow down your results.

- Evaluate the sources you find to determine if they are relevant to your research question. You may want to consider the publication date, author’s credentials, and the journal or book publisher.

- Begin reading and taking notes on each source, paying attention to key findings, methodologies used, and any gaps in the research.

- Organize your findings into themes or categories. For example, you might categorize your sources into those that examine the impact of social media on self-esteem, those that explore the effects of cyberbullying, and those that investigate the relationship between social media use and depression.

- Synthesize your findings by summarizing the key themes and highlighting any gaps or inconsistencies in the research. Identify areas where further research is needed.

- Use your literature review to inform your research questions and hypotheses for your thesis.

For example, after conducting a literature review on the impact of social media on teenagers’ mental health, a thesis might look like this:

“Using a mixed-methods approach, this study aims to investigate the relationship between social media use and mental health outcomes in teenagers. Specifically, the study will examine the effects of cyberbullying, social comparison, and excessive social media use on self-esteem, anxiety, and depression. Through an analysis of survey data and qualitative interviews with teenagers, the study will provide insight into the complex relationship between social media use and mental health outcomes, and identify strategies for promoting positive mental health outcomes in young people.”

Reference: Smith, J., Jones, M., & Lee, S. (2019). The effects of social media use on adolescent mental health: A systematic review. Journal of Adolescent Health, 65(2), 154-165. doi:10.1016/j.jadohealth.2019.03.024

Reference Example: Author, A. A., Author, B. B., & Author, C. C. (Year). Title of article. Title of Journal, volume number(issue number), page range. doi:0000000/000000000000 or URL

Applications of Literature Review

some applications of literature review in different fields:

- Social Sciences: In social sciences, literature reviews are used to identify gaps in existing research, to develop research questions, and to provide a theoretical framework for research. Literature reviews are commonly used in fields such as sociology, psychology, anthropology, and political science.

- Natural Sciences: In natural sciences, literature reviews are used to summarize and evaluate the current state of knowledge in a particular field or subfield. Literature reviews can help researchers identify areas where more research is needed and provide insights into the latest developments in a particular field. Fields such as biology, chemistry, and physics commonly use literature reviews.

- Health Sciences: In health sciences, literature reviews are used to evaluate the effectiveness of treatments, identify best practices, and determine areas where more research is needed. Literature reviews are commonly used in fields such as medicine, nursing, and public health.

- Humanities: In humanities, literature reviews are used to identify gaps in existing knowledge, develop new interpretations of texts or cultural artifacts, and provide a theoretical framework for research. Literature reviews are commonly used in fields such as history, literary studies, and philosophy.

Role of Literature Review in Research

Here are some applications of literature review in research:

- Identifying Research Gaps : Literature review helps researchers identify gaps in existing research and literature related to their research question. This allows them to develop new research questions and hypotheses to fill those gaps.

- Developing Theoretical Framework: Literature review helps researchers develop a theoretical framework for their research. By analyzing and synthesizing existing literature, researchers can identify the key concepts, theories, and models that are relevant to their research.

- Selecting Research Methods : Literature review helps researchers select appropriate research methods and techniques based on previous research. It also helps researchers to identify potential biases or limitations of certain methods and techniques.

- Data Collection and Analysis: Literature review helps researchers in data collection and analysis by providing a foundation for the development of data collection instruments and methods. It also helps researchers to identify relevant data sources and identify potential data analysis techniques.

- Communicating Results: Literature review helps researchers to communicate their results effectively by providing a context for their research. It also helps to justify the significance of their findings in relation to existing research and literature.

Purpose of Literature Review

Some of the specific purposes of a literature review are as follows:

- To provide context: A literature review helps to provide context for your research by situating it within the broader body of literature on the topic.

- To identify gaps and inconsistencies: A literature review helps to identify areas where further research is needed or where there are inconsistencies in the existing literature.

- To synthesize information: A literature review helps to synthesize the information from multiple sources and present a coherent and comprehensive picture of the current state of knowledge on the topic.

- To identify key concepts and theories : A literature review helps to identify key concepts and theories that are relevant to your research question and provide a theoretical framework for your study.

- To inform research design: A literature review can inform the design of your research study by identifying appropriate research methods, data sources, and research questions.

Characteristics of Literature Review

Some Characteristics of Literature Review are as follows:

- Identifying gaps in knowledge: A literature review helps to identify gaps in the existing knowledge and research on a specific topic or research question. By analyzing and synthesizing the literature, you can identify areas where further research is needed and where new insights can be gained.

- Establishing the significance of your research: A literature review helps to establish the significance of your own research by placing it in the context of existing research. By demonstrating the relevance of your research to the existing literature, you can establish its importance and value.

- Informing research design and methodology : A literature review helps to inform research design and methodology by identifying the most appropriate research methods, techniques, and instruments. By reviewing the literature, you can identify the strengths and limitations of different research methods and techniques, and select the most appropriate ones for your own research.

- Supporting arguments and claims: A literature review provides evidence to support arguments and claims made in academic writing. By citing and analyzing the literature, you can provide a solid foundation for your own arguments and claims.

- I dentifying potential collaborators and mentors: A literature review can help identify potential collaborators and mentors by identifying researchers and practitioners who are working on related topics or using similar methods. By building relationships with these individuals, you can gain valuable insights and support for your own research and practice.

- Keeping up-to-date with the latest research : A literature review helps to keep you up-to-date with the latest research on a specific topic or research question. By regularly reviewing the literature, you can stay informed about the latest findings and developments in your field.

Advantages of Literature Review

There are several advantages to conducting a literature review as part of a research project, including:

- Establishing the significance of the research : A literature review helps to establish the significance of the research by demonstrating the gap or problem in the existing literature that the study aims to address.

- Identifying key concepts and theories: A literature review can help to identify key concepts and theories that are relevant to the research question, and provide a theoretical framework for the study.

- Supporting the research methodology : A literature review can inform the research methodology by identifying appropriate research methods, data sources, and research questions.

- Providing a comprehensive overview of the literature : A literature review provides a comprehensive overview of the current state of knowledge on a topic, allowing the researcher to identify key themes, debates, and areas of agreement or disagreement.

- Identifying potential research questions: A literature review can help to identify potential research questions and areas for further investigation.

- Avoiding duplication of research: A literature review can help to avoid duplication of research by identifying what has already been done on a topic, and what remains to be done.

- Enhancing the credibility of the research : A literature review helps to enhance the credibility of the research by demonstrating the researcher’s knowledge of the existing literature and their ability to situate their research within a broader context.

Limitations of Literature Review

Limitations of Literature Review are as follows:

- Limited scope : Literature reviews can only cover the existing literature on a particular topic, which may be limited in scope or depth.

- Publication bias : Literature reviews may be influenced by publication bias, which occurs when researchers are more likely to publish positive results than negative ones. This can lead to an incomplete or biased picture of the literature.

- Quality of sources : The quality of the literature reviewed can vary widely, and not all sources may be reliable or valid.

- Time-limited: Literature reviews can become quickly outdated as new research is published, making it difficult to keep up with the latest developments in a field.

- Subjective interpretation : Literature reviews can be subjective, and the interpretation of the findings can vary depending on the researcher’s perspective or bias.

- Lack of original data : Literature reviews do not generate new data, but rather rely on the analysis of existing studies.

- Risk of plagiarism: It is important to ensure that literature reviews do not inadvertently contain plagiarism, which can occur when researchers use the work of others without proper attribution.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Data Verification – Process, Types and Examples

Research Results Section – Writing Guide and...

Research Gap – Types, Examples and How to...

Table of Contents – Types, Formats, Examples

Ethical Considerations – Types, Examples and...

Research Paper Conclusion – Writing Guide and...

- Open access

- Published: 07 September 2020

A tutorial on methodological studies: the what, when, how and why

- Lawrence Mbuagbaw ORCID: orcid.org/0000-0001-5855-5461 1 , 2 , 3 ,

- Daeria O. Lawson 1 ,

- Livia Puljak 4 ,

- David B. Allison 5 &

- Lehana Thabane 1 , 2 , 6 , 7 , 8

BMC Medical Research Methodology volume 20 , Article number: 226 ( 2020 ) Cite this article

40k Accesses

61 Altmetric

Metrics details

Methodological studies – studies that evaluate the design, analysis or reporting of other research-related reports – play an important role in health research. They help to highlight issues in the conduct of research with the aim of improving health research methodology, and ultimately reducing research waste.

We provide an overview of some of the key aspects of methodological studies such as what they are, and when, how and why they are done. We adopt a “frequently asked questions” format to facilitate reading this paper and provide multiple examples to help guide researchers interested in conducting methodological studies. Some of the topics addressed include: is it necessary to publish a study protocol? How to select relevant research reports and databases for a methodological study? What approaches to data extraction and statistical analysis should be considered when conducting a methodological study? What are potential threats to validity and is there a way to appraise the quality of methodological studies?

Appropriate reflection and application of basic principles of epidemiology and biostatistics are required in the design and analysis of methodological studies. This paper provides an introduction for further discussion about the conduct of methodological studies.

Peer Review reports

The field of meta-research (or research-on-research) has proliferated in recent years in response to issues with research quality and conduct [ 1 , 2 , 3 ]. As the name suggests, this field targets issues with research design, conduct, analysis and reporting. Various types of research reports are often examined as the unit of analysis in these studies (e.g. abstracts, full manuscripts, trial registry entries). Like many other novel fields of research, meta-research has seen a proliferation of use before the development of reporting guidance. For example, this was the case with randomized trials for which risk of bias tools and reporting guidelines were only developed much later – after many trials had been published and noted to have limitations [ 4 , 5 ]; and for systematic reviews as well [ 6 , 7 , 8 ]. However, in the absence of formal guidance, studies that report on research differ substantially in how they are named, conducted and reported [ 9 , 10 ]. This creates challenges in identifying, summarizing and comparing them. In this tutorial paper, we will use the term methodological study to refer to any study that reports on the design, conduct, analysis or reporting of primary or secondary research-related reports (such as trial registry entries and conference abstracts).

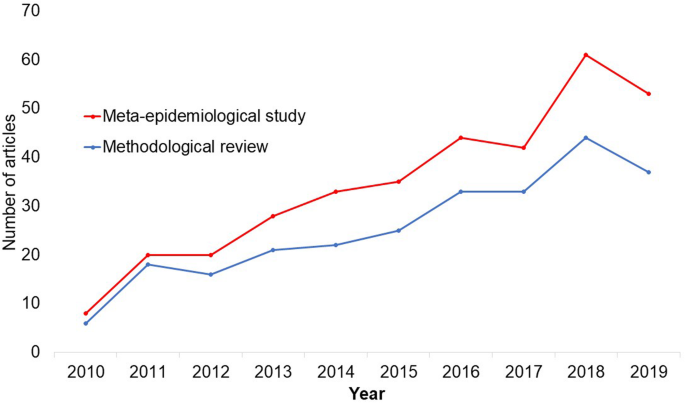

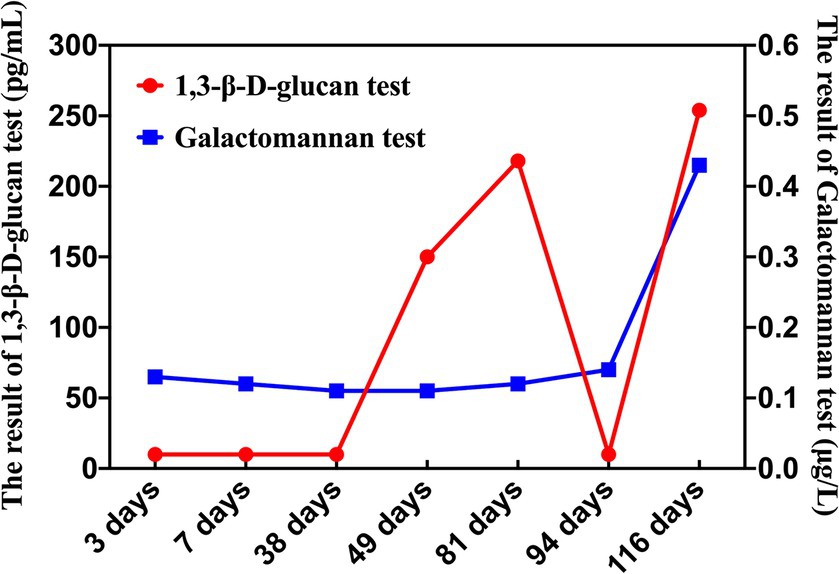

In the past 10 years, there has been an increase in the use of terms related to methodological studies (based on records retrieved with a keyword search [in the title and abstract] for “methodological review” and “meta-epidemiological study” in PubMed up to December 2019), suggesting that these studies may be appearing more frequently in the literature. See Fig. 1 .

Trends in the number studies that mention “methodological review” or “meta-

epidemiological study” in PubMed.

The methods used in many methodological studies have been borrowed from systematic and scoping reviews. This practice has influenced the direction of the field, with many methodological studies including searches of electronic databases, screening of records, duplicate data extraction and assessments of risk of bias in the included studies. However, the research questions posed in methodological studies do not always require the approaches listed above, and guidance is needed on when and how to apply these methods to a methodological study. Even though methodological studies can be conducted on qualitative or mixed methods research, this paper focuses on and draws examples exclusively from quantitative research.

The objectives of this paper are to provide some insights on how to conduct methodological studies so that there is greater consistency between the research questions posed, and the design, analysis and reporting of findings. We provide multiple examples to illustrate concepts and a proposed framework for categorizing methodological studies in quantitative research.

What is a methodological study?

Any study that describes or analyzes methods (design, conduct, analysis or reporting) in published (or unpublished) literature is a methodological study. Consequently, the scope of methodological studies is quite extensive and includes, but is not limited to, topics as diverse as: research question formulation [ 11 ]; adherence to reporting guidelines [ 12 , 13 , 14 ] and consistency in reporting [ 15 ]; approaches to study analysis [ 16 ]; investigating the credibility of analyses [ 17 ]; and studies that synthesize these methodological studies [ 18 ]. While the nomenclature of methodological studies is not uniform, the intents and purposes of these studies remain fairly consistent – to describe or analyze methods in primary or secondary studies. As such, methodological studies may also be classified as a subtype of observational studies.

Parallel to this are experimental studies that compare different methods. Even though they play an important role in informing optimal research methods, experimental methodological studies are beyond the scope of this paper. Examples of such studies include the randomized trials by Buscemi et al., comparing single data extraction to double data extraction [ 19 ], and Carrasco-Labra et al., comparing approaches to presenting findings in Grading of Recommendations, Assessment, Development and Evaluations (GRADE) summary of findings tables [ 20 ]. In these studies, the unit of analysis is the person or groups of individuals applying the methods. We also direct readers to the Studies Within a Trial (SWAT) and Studies Within a Review (SWAR) programme operated through the Hub for Trials Methodology Research, for further reading as a potential useful resource for these types of experimental studies [ 21 ]. Lastly, this paper is not meant to inform the conduct of research using computational simulation and mathematical modeling for which some guidance already exists [ 22 ], or studies on the development of methods using consensus-based approaches.

When should we conduct a methodological study?

Methodological studies occupy a unique niche in health research that allows them to inform methodological advances. Methodological studies should also be conducted as pre-cursors to reporting guideline development, as they provide an opportunity to understand current practices, and help to identify the need for guidance and gaps in methodological or reporting quality. For example, the development of the popular Preferred Reporting Items of Systematic reviews and Meta-Analyses (PRISMA) guidelines were preceded by methodological studies identifying poor reporting practices [ 23 , 24 ]. In these instances, after the reporting guidelines are published, methodological studies can also be used to monitor uptake of the guidelines.

These studies can also be conducted to inform the state of the art for design, analysis and reporting practices across different types of health research fields, with the aim of improving research practices, and preventing or reducing research waste. For example, Samaan et al. conducted a scoping review of adherence to different reporting guidelines in health care literature [ 18 ]. Methodological studies can also be used to determine the factors associated with reporting practices. For example, Abbade et al. investigated journal characteristics associated with the use of the Participants, Intervention, Comparison, Outcome, Timeframe (PICOT) format in framing research questions in trials of venous ulcer disease [ 11 ].

How often are methodological studies conducted?

There is no clear answer to this question. Based on a search of PubMed, the use of related terms (“methodological review” and “meta-epidemiological study”) – and therefore, the number of methodological studies – is on the rise. However, many other terms are used to describe methodological studies. There are also many studies that explore design, conduct, analysis or reporting of research reports, but that do not use any specific terms to describe or label their study design in terms of “methodology”. This diversity in nomenclature makes a census of methodological studies elusive. Appropriate terminology and key words for methodological studies are needed to facilitate improved accessibility for end-users.

Why do we conduct methodological studies?

Methodological studies provide information on the design, conduct, analysis or reporting of primary and secondary research and can be used to appraise quality, quantity, completeness, accuracy and consistency of health research. These issues can be explored in specific fields, journals, databases, geographical regions and time periods. For example, Areia et al. explored the quality of reporting of endoscopic diagnostic studies in gastroenterology [ 25 ]; Knol et al. investigated the reporting of p -values in baseline tables in randomized trial published in high impact journals [ 26 ]; Chen et al. describe adherence to the Consolidated Standards of Reporting Trials (CONSORT) statement in Chinese Journals [ 27 ]; and Hopewell et al. describe the effect of editors’ implementation of CONSORT guidelines on reporting of abstracts over time [ 28 ]. Methodological studies provide useful information to researchers, clinicians, editors, publishers and users of health literature. As a result, these studies have been at the cornerstone of important methodological developments in the past two decades and have informed the development of many health research guidelines including the highly cited CONSORT statement [ 5 ].

Where can we find methodological studies?

Methodological studies can be found in most common biomedical bibliographic databases (e.g. Embase, MEDLINE, PubMed, Web of Science). However, the biggest caveat is that methodological studies are hard to identify in the literature due to the wide variety of names used and the lack of comprehensive databases dedicated to them. A handful can be found in the Cochrane Library as “Cochrane Methodology Reviews”, but these studies only cover methodological issues related to systematic reviews. Previous attempts to catalogue all empirical studies of methods used in reviews were abandoned 10 years ago [ 29 ]. In other databases, a variety of search terms may be applied with different levels of sensitivity and specificity.

Some frequently asked questions about methodological studies

In this section, we have outlined responses to questions that might help inform the conduct of methodological studies.

Q: How should I select research reports for my methodological study?

A: Selection of research reports for a methodological study depends on the research question and eligibility criteria. Once a clear research question is set and the nature of literature one desires to review is known, one can then begin the selection process. Selection may begin with a broad search, especially if the eligibility criteria are not apparent. For example, a methodological study of Cochrane Reviews of HIV would not require a complex search as all eligible studies can easily be retrieved from the Cochrane Library after checking a few boxes [ 30 ]. On the other hand, a methodological study of subgroup analyses in trials of gastrointestinal oncology would require a search to find such trials, and further screening to identify trials that conducted a subgroup analysis [ 31 ].

The strategies used for identifying participants in observational studies can apply here. One may use a systematic search to identify all eligible studies. If the number of eligible studies is unmanageable, a random sample of articles can be expected to provide comparable results if it is sufficiently large [ 32 ]. For example, Wilson et al. used a random sample of trials from the Cochrane Stroke Group’s Trial Register to investigate completeness of reporting [ 33 ]. It is possible that a simple random sample would lead to underrepresentation of units (i.e. research reports) that are smaller in number. This is relevant if the investigators wish to compare multiple groups but have too few units in one group. In this case a stratified sample would help to create equal groups. For example, in a methodological study comparing Cochrane and non-Cochrane reviews, Kahale et al. drew random samples from both groups [ 34 ]. Alternatively, systematic or purposeful sampling strategies can be used and we encourage researchers to justify their selected approaches based on the study objective.

Q: How many databases should I search?

A: The number of databases one should search would depend on the approach to sampling, which can include targeting the entire “population” of interest or a sample of that population. If you are interested in including the entire target population for your research question, or drawing a random or systematic sample from it, then a comprehensive and exhaustive search for relevant articles is required. In this case, we recommend using systematic approaches for searching electronic databases (i.e. at least 2 databases with a replicable and time stamped search strategy). The results of your search will constitute a sampling frame from which eligible studies can be drawn.

Alternatively, if your approach to sampling is purposeful, then we recommend targeting the database(s) or data sources (e.g. journals, registries) that include the information you need. For example, if you are conducting a methodological study of high impact journals in plastic surgery and they are all indexed in PubMed, you likely do not need to search any other databases. You may also have a comprehensive list of all journals of interest and can approach your search using the journal names in your database search (or by accessing the journal archives directly from the journal’s website). Even though one could also search journals’ web pages directly, using a database such as PubMed has multiple advantages, such as the use of filters, so the search can be narrowed down to a certain period, or study types of interest. Furthermore, individual journals’ web sites may have different search functionalities, which do not necessarily yield a consistent output.

Q: Should I publish a protocol for my methodological study?

A: A protocol is a description of intended research methods. Currently, only protocols for clinical trials require registration [ 35 ]. Protocols for systematic reviews are encouraged but no formal recommendation exists. The scientific community welcomes the publication of protocols because they help protect against selective outcome reporting, the use of post hoc methodologies to embellish results, and to help avoid duplication of efforts [ 36 ]. While the latter two risks exist in methodological research, the negative consequences may be substantially less than for clinical outcomes. In a sample of 31 methodological studies, 7 (22.6%) referenced a published protocol [ 9 ]. In the Cochrane Library, there are 15 protocols for methodological reviews (21 July 2020). This suggests that publishing protocols for methodological studies is not uncommon.

Authors can consider publishing their study protocol in a scholarly journal as a manuscript. Advantages of such publication include obtaining peer-review feedback about the planned study, and easy retrieval by searching databases such as PubMed. The disadvantages in trying to publish protocols includes delays associated with manuscript handling and peer review, as well as costs, as few journals publish study protocols, and those journals mostly charge article-processing fees [ 37 ]. Authors who would like to make their protocol publicly available without publishing it in scholarly journals, could deposit their study protocols in publicly available repositories, such as the Open Science Framework ( https://osf.io/ ).

Q: How to appraise the quality of a methodological study?

A: To date, there is no published tool for appraising the risk of bias in a methodological study, but in principle, a methodological study could be considered as a type of observational study. Therefore, during conduct or appraisal, care should be taken to avoid the biases common in observational studies [ 38 ]. These biases include selection bias, comparability of groups, and ascertainment of exposure or outcome. In other words, to generate a representative sample, a comprehensive reproducible search may be necessary to build a sampling frame. Additionally, random sampling may be necessary to ensure that all the included research reports have the same probability of being selected, and the screening and selection processes should be transparent and reproducible. To ensure that the groups compared are similar in all characteristics, matching, random sampling or stratified sampling can be used. Statistical adjustments for between-group differences can also be applied at the analysis stage. Finally, duplicate data extraction can reduce errors in assessment of exposures or outcomes.

Q: Should I justify a sample size?

A: In all instances where one is not using the target population (i.e. the group to which inferences from the research report are directed) [ 39 ], a sample size justification is good practice. The sample size justification may take the form of a description of what is expected to be achieved with the number of articles selected, or a formal sample size estimation that outlines the number of articles required to answer the research question with a certain precision and power. Sample size justifications in methodological studies are reasonable in the following instances:

Comparing two groups

Determining a proportion, mean or another quantifier

Determining factors associated with an outcome using regression-based analyses

For example, El Dib et al. computed a sample size requirement for a methodological study of diagnostic strategies in randomized trials, based on a confidence interval approach [ 40 ].

Q: What should I call my study?

A: Other terms which have been used to describe/label methodological studies include “ methodological review ”, “methodological survey” , “meta-epidemiological study” , “systematic review” , “systematic survey”, “meta-research”, “research-on-research” and many others. We recommend that the study nomenclature be clear, unambiguous, informative and allow for appropriate indexing. Methodological study nomenclature that should be avoided includes “ systematic review” – as this will likely be confused with a systematic review of a clinical question. “ Systematic survey” may also lead to confusion about whether the survey was systematic (i.e. using a preplanned methodology) or a survey using “ systematic” sampling (i.e. a sampling approach using specific intervals to determine who is selected) [ 32 ]. Any of the above meanings of the words “ systematic” may be true for methodological studies and could be potentially misleading. “ Meta-epidemiological study” is ideal for indexing, but not very informative as it describes an entire field. The term “ review ” may point towards an appraisal or “review” of the design, conduct, analysis or reporting (or methodological components) of the targeted research reports, yet it has also been used to describe narrative reviews [ 41 , 42 ]. The term “ survey ” is also in line with the approaches used in many methodological studies [ 9 ], and would be indicative of the sampling procedures of this study design. However, in the absence of guidelines on nomenclature, the term “ methodological study ” is broad enough to capture most of the scenarios of such studies.

Q: Should I account for clustering in my methodological study?

A: Data from methodological studies are often clustered. For example, articles coming from a specific source may have different reporting standards (e.g. the Cochrane Library). Articles within the same journal may be similar due to editorial practices and policies, reporting requirements and endorsement of guidelines. There is emerging evidence that these are real concerns that should be accounted for in analyses [ 43 ]. Some cluster variables are described in the section: “ What variables are relevant to methodological studies?”

A variety of modelling approaches can be used to account for correlated data, including the use of marginal, fixed or mixed effects regression models with appropriate computation of standard errors [ 44 ]. For example, Kosa et al. used generalized estimation equations to account for correlation of articles within journals [ 15 ]. Not accounting for clustering could lead to incorrect p -values, unduly narrow confidence intervals, and biased estimates [ 45 ].

Q: Should I extract data in duplicate?

A: Yes. Duplicate data extraction takes more time but results in less errors [ 19 ]. Data extraction errors in turn affect the effect estimate [ 46 ], and therefore should be mitigated. Duplicate data extraction should be considered in the absence of other approaches to minimize extraction errors. However, much like systematic reviews, this area will likely see rapid new advances with machine learning and natural language processing technologies to support researchers with screening and data extraction [ 47 , 48 ]. However, experience plays an important role in the quality of extracted data and inexperienced extractors should be paired with experienced extractors [ 46 , 49 ].

Q: Should I assess the risk of bias of research reports included in my methodological study?

A : Risk of bias is most useful in determining the certainty that can be placed in the effect measure from a study. In methodological studies, risk of bias may not serve the purpose of determining the trustworthiness of results, as effect measures are often not the primary goal of methodological studies. Determining risk of bias in methodological studies is likely a practice borrowed from systematic review methodology, but whose intrinsic value is not obvious in methodological studies. When it is part of the research question, investigators often focus on one aspect of risk of bias. For example, Speich investigated how blinding was reported in surgical trials [ 50 ], and Abraha et al., investigated the application of intention-to-treat analyses in systematic reviews and trials [ 51 ].

Q: What variables are relevant to methodological studies?

A: There is empirical evidence that certain variables may inform the findings in a methodological study. We outline some of these and provide a brief overview below:

Country: Countries and regions differ in their research cultures, and the resources available to conduct research. Therefore, it is reasonable to believe that there may be differences in methodological features across countries. Methodological studies have reported loco-regional differences in reporting quality [ 52 , 53 ]. This may also be related to challenges non-English speakers face in publishing papers in English.

Authors’ expertise: The inclusion of authors with expertise in research methodology, biostatistics, and scientific writing is likely to influence the end-product. Oltean et al. found that among randomized trials in orthopaedic surgery, the use of analyses that accounted for clustering was more likely when specialists (e.g. statistician, epidemiologist or clinical trials methodologist) were included on the study team [ 54 ]. Fleming et al. found that including methodologists in the review team was associated with appropriate use of reporting guidelines [ 55 ].

Source of funding and conflicts of interest: Some studies have found that funded studies report better [ 56 , 57 ], while others do not [ 53 , 58 ]. The presence of funding would indicate the availability of resources deployed to ensure optimal design, conduct, analysis and reporting. However, the source of funding may introduce conflicts of interest and warrant assessment. For example, Kaiser et al. investigated the effect of industry funding on obesity or nutrition randomized trials and found that reporting quality was similar [ 59 ]. Thomas et al. looked at reporting quality of long-term weight loss trials and found that industry funded studies were better [ 60 ]. Kan et al. examined the association between industry funding and “positive trials” (trials reporting a significant intervention effect) and found that industry funding was highly predictive of a positive trial [ 61 ]. This finding is similar to that of a recent Cochrane Methodology Review by Hansen et al. [ 62 ]

Journal characteristics: Certain journals’ characteristics may influence the study design, analysis or reporting. Characteristics such as journal endorsement of guidelines [ 63 , 64 ], and Journal Impact Factor (JIF) have been shown to be associated with reporting [ 63 , 65 , 66 , 67 ].

Study size (sample size/number of sites): Some studies have shown that reporting is better in larger studies [ 53 , 56 , 58 ].

Year of publication: It is reasonable to assume that design, conduct, analysis and reporting of research will change over time. Many studies have demonstrated improvements in reporting over time or after the publication of reporting guidelines [ 68 , 69 ].

Type of intervention: In a methodological study of reporting quality of weight loss intervention studies, Thabane et al. found that trials of pharmacologic interventions were reported better than trials of non-pharmacologic interventions [ 70 ].

Interactions between variables: Complex interactions between the previously listed variables are possible. High income countries with more resources may be more likely to conduct larger studies and incorporate a variety of experts. Authors in certain countries may prefer certain journals, and journal endorsement of guidelines and editorial policies may change over time.

Q: Should I focus only on high impact journals?

A: Investigators may choose to investigate only high impact journals because they are more likely to influence practice and policy, or because they assume that methodological standards would be higher. However, the JIF may severely limit the scope of articles included and may skew the sample towards articles with positive findings. The generalizability and applicability of findings from a handful of journals must be examined carefully, especially since the JIF varies over time. Even among journals that are all “high impact”, variations exist in methodological standards.

Q: Can I conduct a methodological study of qualitative research?

A: Yes. Even though a lot of methodological research has been conducted in the quantitative research field, methodological studies of qualitative studies are feasible. Certain databases that catalogue qualitative research including the Cumulative Index to Nursing & Allied Health Literature (CINAHL) have defined subject headings that are specific to methodological research (e.g. “research methodology”). Alternatively, one could also conduct a qualitative methodological review; that is, use qualitative approaches to synthesize methodological issues in qualitative studies.

Q: What reporting guidelines should I use for my methodological study?

A: There is no guideline that covers the entire scope of methodological studies. One adaptation of the PRISMA guidelines has been published, which works well for studies that aim to use the entire target population of research reports [ 71 ]. However, it is not widely used (40 citations in 2 years as of 09 December 2019), and methodological studies that are designed as cross-sectional or before-after studies require a more fit-for purpose guideline. A more encompassing reporting guideline for a broad range of methodological studies is currently under development [ 72 ]. However, in the absence of formal guidance, the requirements for scientific reporting should be respected, and authors of methodological studies should focus on transparency and reproducibility.

Q: What are the potential threats to validity and how can I avoid them?

A: Methodological studies may be compromised by a lack of internal or external validity. The main threats to internal validity in methodological studies are selection and confounding bias. Investigators must ensure that the methods used to select articles does not make them differ systematically from the set of articles to which they would like to make inferences. For example, attempting to make extrapolations to all journals after analyzing high-impact journals would be misleading.

Many factors (confounders) may distort the association between the exposure and outcome if the included research reports differ with respect to these factors [ 73 ]. For example, when examining the association between source of funding and completeness of reporting, it may be necessary to account for journals that endorse the guidelines. Confounding bias can be addressed by restriction, matching and statistical adjustment [ 73 ]. Restriction appears to be the method of choice for many investigators who choose to include only high impact journals or articles in a specific field. For example, Knol et al. examined the reporting of p -values in baseline tables of high impact journals [ 26 ]. Matching is also sometimes used. In the methodological study of non-randomized interventional studies of elective ventral hernia repair, Parker et al. matched prospective studies with retrospective studies and compared reporting standards [ 74 ]. Some other methodological studies use statistical adjustments. For example, Zhang et al. used regression techniques to determine the factors associated with missing participant data in trials [ 16 ].

With regard to external validity, researchers interested in conducting methodological studies must consider how generalizable or applicable their findings are. This should tie in closely with the research question and should be explicit. For example. Findings from methodological studies on trials published in high impact cardiology journals cannot be assumed to be applicable to trials in other fields. However, investigators must ensure that their sample truly represents the target sample either by a) conducting a comprehensive and exhaustive search, or b) using an appropriate and justified, randomly selected sample of research reports.

Even applicability to high impact journals may vary based on the investigators’ definition, and over time. For example, for high impact journals in the field of general medicine, Bouwmeester et al. included the Annals of Internal Medicine (AIM), BMJ, the Journal of the American Medical Association (JAMA), Lancet, the New England Journal of Medicine (NEJM), and PLoS Medicine ( n = 6) [ 75 ]. In contrast, the high impact journals selected in the methodological study by Schiller et al. were BMJ, JAMA, Lancet, and NEJM ( n = 4) [ 76 ]. Another methodological study by Kosa et al. included AIM, BMJ, JAMA, Lancet and NEJM ( n = 5). In the methodological study by Thabut et al., journals with a JIF greater than 5 were considered to be high impact. Riado Minguez et al. used first quartile journals in the Journal Citation Reports (JCR) for a specific year to determine “high impact” [ 77 ]. Ultimately, the definition of high impact will be based on the number of journals the investigators are willing to include, the year of impact and the JIF cut-off [ 78 ]. We acknowledge that the term “generalizability” may apply differently for methodological studies, especially when in many instances it is possible to include the entire target population in the sample studied.

Finally, methodological studies are not exempt from information bias which may stem from discrepancies in the included research reports [ 79 ], errors in data extraction, or inappropriate interpretation of the information extracted. Likewise, publication bias may also be a concern in methodological studies, but such concepts have not yet been explored.

A proposed framework

In order to inform discussions about methodological studies, the development of guidance for what should be reported, we have outlined some key features of methodological studies that can be used to classify them. For each of the categories outlined below, we provide an example. In our experience, the choice of approach to completing a methodological study can be informed by asking the following four questions:

What is the aim?

Methodological studies that investigate bias

A methodological study may be focused on exploring sources of bias in primary or secondary studies (meta-bias), or how bias is analyzed. We have taken care to distinguish bias (i.e. systematic deviations from the truth irrespective of the source) from reporting quality or completeness (i.e. not adhering to a specific reporting guideline or norm). An example of where this distinction would be important is in the case of a randomized trial with no blinding. This study (depending on the nature of the intervention) would be at risk of performance bias. However, if the authors report that their study was not blinded, they would have reported adequately. In fact, some methodological studies attempt to capture both “quality of conduct” and “quality of reporting”, such as Richie et al., who reported on the risk of bias in randomized trials of pharmacy practice interventions [ 80 ]. Babic et al. investigated how risk of bias was used to inform sensitivity analyses in Cochrane reviews [ 81 ]. Further, biases related to choice of outcomes can also be explored. For example, Tan et al investigated differences in treatment effect size based on the outcome reported [ 82 ].

Methodological studies that investigate quality (or completeness) of reporting

Methodological studies may report quality of reporting against a reporting checklist (i.e. adherence to guidelines) or against expected norms. For example, Croituro et al. report on the quality of reporting in systematic reviews published in dermatology journals based on their adherence to the PRISMA statement [ 83 ], and Khan et al. described the quality of reporting of harms in randomized controlled trials published in high impact cardiovascular journals based on the CONSORT extension for harms [ 84 ]. Other methodological studies investigate reporting of certain features of interest that may not be part of formally published checklists or guidelines. For example, Mbuagbaw et al. described how often the implications for research are elaborated using the Evidence, Participants, Intervention, Comparison, Outcome, Timeframe (EPICOT) format [ 30 ].

Methodological studies that investigate the consistency of reporting

Sometimes investigators may be interested in how consistent reports of the same research are, as it is expected that there should be consistency between: conference abstracts and published manuscripts; manuscript abstracts and manuscript main text; and trial registration and published manuscript. For example, Rosmarakis et al. investigated consistency between conference abstracts and full text manuscripts [ 85 ].

Methodological studies that investigate factors associated with reporting

In addition to identifying issues with reporting in primary and secondary studies, authors of methodological studies may be interested in determining the factors that are associated with certain reporting practices. Many methodological studies incorporate this, albeit as a secondary outcome. For example, Farrokhyar et al. investigated the factors associated with reporting quality in randomized trials of coronary artery bypass grafting surgery [ 53 ].

Methodological studies that investigate methods

Methodological studies may also be used to describe methods or compare methods, and the factors associated with methods. Muller et al. described the methods used for systematic reviews and meta-analyses of observational studies [ 86 ].

Methodological studies that summarize other methodological studies

Some methodological studies synthesize results from other methodological studies. For example, Li et al. conducted a scoping review of methodological reviews that investigated consistency between full text and abstracts in primary biomedical research [ 87 ].

Methodological studies that investigate nomenclature and terminology

Some methodological studies may investigate the use of names and terms in health research. For example, Martinic et al. investigated the definitions of systematic reviews used in overviews of systematic reviews (OSRs), meta-epidemiological studies and epidemiology textbooks [ 88 ].

Other types of methodological studies

In addition to the previously mentioned experimental methodological studies, there may exist other types of methodological studies not captured here.

What is the design?

Methodological studies that are descriptive

Most methodological studies are purely descriptive and report their findings as counts (percent) and means (standard deviation) or medians (interquartile range). For example, Mbuagbaw et al. described the reporting of research recommendations in Cochrane HIV systematic reviews [ 30 ]. Gohari et al. described the quality of reporting of randomized trials in diabetes in Iran [ 12 ].

Methodological studies that are analytical

Some methodological studies are analytical wherein “analytical studies identify and quantify associations, test hypotheses, identify causes and determine whether an association exists between variables, such as between an exposure and a disease.” [ 89 ] In the case of methodological studies all these investigations are possible. For example, Kosa et al. investigated the association between agreement in primary outcome from trial registry to published manuscript and study covariates. They found that larger and more recent studies were more likely to have agreement [ 15 ]. Tricco et al. compared the conclusion statements from Cochrane and non-Cochrane systematic reviews with a meta-analysis of the primary outcome and found that non-Cochrane reviews were more likely to report positive findings. These results are a test of the null hypothesis that the proportions of Cochrane and non-Cochrane reviews that report positive results are equal [ 90 ].

What is the sampling strategy?