Docker by Example

If you’re the kind of developer who learns best by doing, then this guide is for you. Rather than learning Docker by focusing on CLI commands, this guide focuses on learning how to author Dockerfiles to run programs in containers.

Starting with simple examples, you’ll become familiar with important Dockerfile instructions and learn relevant CLI commands as you go.

The pace is quick and explanations are succinct, but this guide will get you up and running with a solid foundation in professional Docker best practices.

See Getting Started in the next section to prepare for running the examples on your own.

How-To Geek

Docker for beginners: everything you need to know.

Your changes have been saved

Email Is sent

Please verify your email address.

You’ve reached your account maximum for followed topics.

Save 20% Sitewide with Urban Armor Gear's 4th of July Sale

Psa: the xbox 360 store is shutting down this month, considering a new tablet don't overlook the ipad mini, quick links, docker basics, how does docker work, why do so many people use docker, getting started, creating images, image registries, managing your containers, persistent data storage, maintaining security, working with multiple containers, container orchestration, a powerful platform for containers.

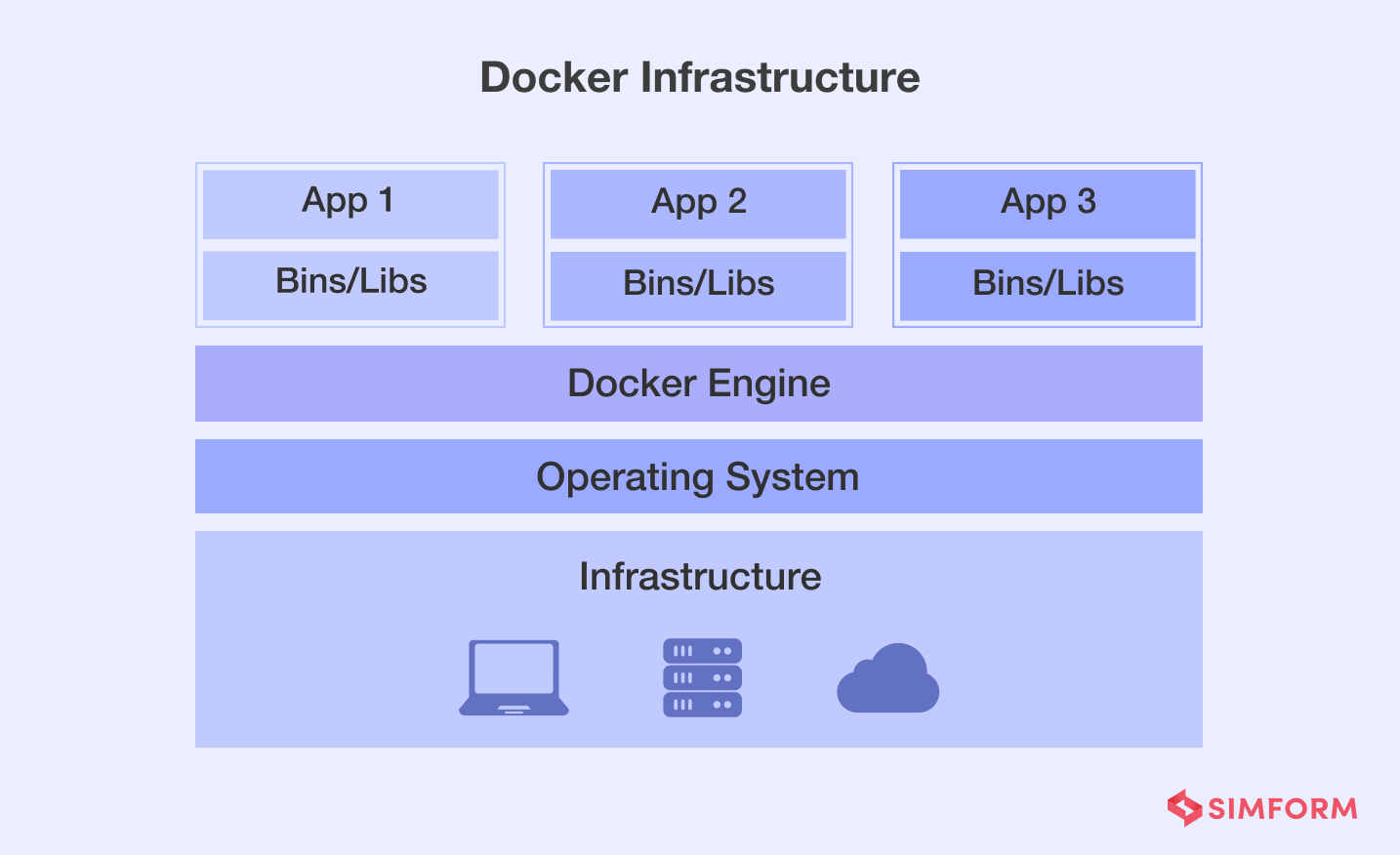

Docker creates packaged applications called containers. Each container provides an isolated environment similar to a virtual machine (VM). Unlike VMs, Docker containers don't run a full operating system . They share your host's kernel and virtualize at a software level.

Docker has become a standard tool for software developers and system administrators. It's a neat way to quickly launch applications without impacting the rest of your system. You can spin up a new service with a single docker run command.

Containers encapsulate everything needed to run an application, from OS package dependencies to your own source code. You define a container's creation steps as instructions in a

. Docker uses the Dockerfile to construct an image.

Images define the software available in containers. This is loosely equivalent to starting a VM with an operating system ISO. If you create an image, any Docker user will be able to launch your app with docker run .

Containers utilize operating system kernel features to provide partially virtualized environments. It's possible to create containers from scratch with commands like

. This starts a process with a specified root directory instead of the system root. But using kernel features directly is fiddly, insecure, and error-prone.

Docker is a complete solution for the production, distribution, and use of containers. Modern Docker releases are comprised of several independent components . First, there's the Docker CLI, which is what you interact with in your terminal. The CLI sends commands to a Docker daemon. This can run locally or on a remote host . The daemon is responsible for managing containers and the images they're created from.

The final component is called the container runtime. The runtime invokes kernel features to actually launch containers. Docker is compatible with runtimes that adhere to the OCI specification. This open standard allows for interoperability between different containerization tools.

You don't need to worry too much about Docker's inner workings when you're first getting started. Installing docker on your system will give you everything you need to build and run containers.

Containers have become so popular because they solve many common challenges in software development. The ability to containerize once and run everywhere reduces the gap between your development environment and your production servers.

Using containers gives you confidence that every environment is identical. If you have a new team member, they only need to docker run to set up their own development instance. When you launch your service, you can use your Docker image to deploy to production. The live environment will exactly match your local instance, avoiding "it works on my machine" scenarios.

Docker is more convenient than a full-blown virtual machine. VMs are general-purpose tools designed to support every possible workload. By contrast, containers are lightweight, self-sufficient, and better suited to throwaway use cases. As Docker shares the host's kernel, containers have a negligible impact on system performance. Container launch time is almost instantaneous, as you're only starting processes, not an entire operating system.

Docker is available on all popular Linux distributions. It also runs on Windows and macOS. Follow the Docker setup instructions for your platform to get it up and running.

You can check that your installation is working by starting a simple container:

docker run hello-world

This will start a new container with the basic hello-world image. The image emits some output explaining how to use Docker. The container then exits, dropping you back to your terminal.

Once you've run hello-world , you're ready to create your own Docker images. A Dockerfile describes how to run your service by installing required software and copying in files. Here's a simple example using the Apache web server:

FROM httpd:latest

RUN echo "LoadModule headers_module modules/mod_headers.so" >> /usr/local/apache2/conf/httpd.conf

COPY .htaccess /var/www/html/.htaccess

COPY index.html /var/www/html/index.html

COPY css/ /var/www/html/css

The FROM line defines the base image. In this case, we're starting from the official Apache image. Docker applies the remaining instructions in your Dockerfile on top of the base image.

The RUN stage runs a command within the container. This can be any command available in the container's environment. We're enabling the headers Apache module, which could be used by the .htaccess file to set up routing rules.

The final lines copy the HTML and CSS files in your working directory into the container image. Your image now contains everything you need to run your website.

Now, you can build the image:

docker build -t my-website:v1 .

Docker will use your Dockerfile to construct the image. You'll see output in your terminal as Docker runs each of your instructions.

The -t in the command tags your image with a given name ( my-website:v1 ). This makes it easier to refer to in the future. Tags have two components, separated by a colon. The first part sets the image name, while the second usually denotes its version. If you omit the colon, Docker will default to using latest as the tag version.

The . at the end of the command tells Docker to use the Dockerfile in your local working directory. This also sets the build context , allowing you to use files and folders in your working directory with COPY instructions in your Dockerfile.

Once you've created your image, you can start a container using docker run :

docker run -d -p 8080:80 my-website:v1

We're using a few extra flags with docker run here. The -d flag makes the Docker CLI detach from the container, allowing it to run in the background. A port mapping is defined with -p , so port 8080 on your host maps to port 80 in the container. You should see your web page if you visit localhost:8080 in your browser.

Docker images are formed from layers. Each instruction in your Dockerfile creates a new layer. You can use advanced building features to reference multiple base images , discarding intermediary layers from earlier images.

Once you have an image, you can push it to a registry. Registries provide centralized storage so that you can share containers with others. The default registry is Docker Hub .

When you run a command that references an image, Docker first checks whether it's available locally. If it isn't, it will try to pull it from Docker Hub. You can manually pull images with the docker pull command:

docker pull httpd:latest

If you want to publish an image, create a Docker Hub account. Run docker login and enter your username and password.

Next, tag your image using your Docker Hub username:

docker tag my-image:latest docker-hub-username/my-image:latest

Now, you can push your image:

docker push docker-hub-username/my-image:latest

Other users will be able to pull your image and start containers with it.

You can run your own registry if you need private image storage. Several third-party services also offer Docker registries as alternatives to Docker Hub.

The Docker CLI has several commands to let you manage your running containers. Here are some of the most useful ones to know:

Listing Containers

docker ps shows you all your running containers. Adding the -a flag will show stopped containers, too.

Stopping and Starting Containers

To stop a container, run docker stop my-container . Replace my-container with the container's name or ID. You can get this information from the ps command. A stopped container is restarted with docker start my-container .

Containers usually run for as long as their main process stays alive. Restart policies control what happens when a container stops or your host restarts. Pass --restart always to docker run to make a container restart immediately after it stops.

Getting a Shell

You can run a command in a container using docker exec my-container my-command . This is useful when you want to manually invoke an executable that's separate to the container's main process.

Add the -it flag if you need interactive access. This lets you drop into a shell by running docker exec -it my-container sh .

Monitoring Logs

Docker automatically collects output emitted to a container's standard input and output streams. The docker logs my-container command will show a container's logs inside your terminal. The --follow flag sets up a continuous stream so that you can view logs in real time.

Cleaning Up Resources

Old containers and images can quickly pile up on your system. Use docker rm my-container to delete a container by its ID or name.

The command for images is docker rmi my-image:latest . Pass the image's ID or full tag name. If you specify a tag, the image won't be deleted until it has no more tags assigned. Otherwise, the given tag will be removed but the image's other tags will remain usable.

Bulk clean-ups are possible using the docker prune command . This gives you an easy way to remove all stopped containers and redundant images.

Graphical Management

If the terminal's not your thing, you can use third-party tools to set up a graphical interface for Docker . Web dashboards let you quickly monitor and manage your installation. They also help you take remote control of your containers.

Docker containers are ephemeral by default. Changes made to a container's filesystem won't persist after the container stops. It's not safe to run any form of file storage system in a container started with a basic docker run command.

There are a few different approaches to managing persistent data . The most common is to use a Docker Volume. Volumes are storage units that are mounted into container filesystems. Any data in a volume will remain intact after its linked container stops, letting you connect another container in the future.

Dockerized workloads can be more secure than their bare metal counterparts, as Docker provides some separation between the operating system and your services. Nonetheless, Docker is a potential security issue, as it normally runs as root and could be exploited to run malicious software.

If you're only running Docker as a development tool, the default installation is generally safe to use. Production servers and machines with a network-exposed daemon socket should be hardened before you go live.

Audit your Docker installation to identify potential security issues. There are automated tools available that can help you find weaknesses and suggest resolutions. You can also scan individual container images for issues that could be exploited from within.

The docker command only works with one container at a time. You'll often want to use containers in aggregate. Docker Compose is a tool that lets you define your containers declaratively in a YAML file. You can start them all up with a single command.

This is helpful when your project depends on other services, such as a web backend that relies on a database server. You can define both containers in your docker-compose.yml and benefit from streamlined management with automatic networking .

Here's a simple docker-compose.yml file:

version: "3"

image: app-server:latest

image: database-server:latest

- database-data:/data

database-data:

This defines two containers ( app and database ). A volume is created for the database. This gets mounted to /data in the container. The app server's port 80 is exposed as 8000 on the host. Run docker-compose up -d to spin up both services, including the network and volume.

The use of Docker Compose lets you write reusable container definitions that you can share with others. You could commit a docker-compose.yml into your version control instead of having developers memorize docker run commands.

There are other approaches to running multiple containers, too. Docker App is an emerging solution that provides another level of abstraction. Elsewhere in the ecosystem, Podman is a Docker alternative that lets you create "pods" of containers within your terminal.

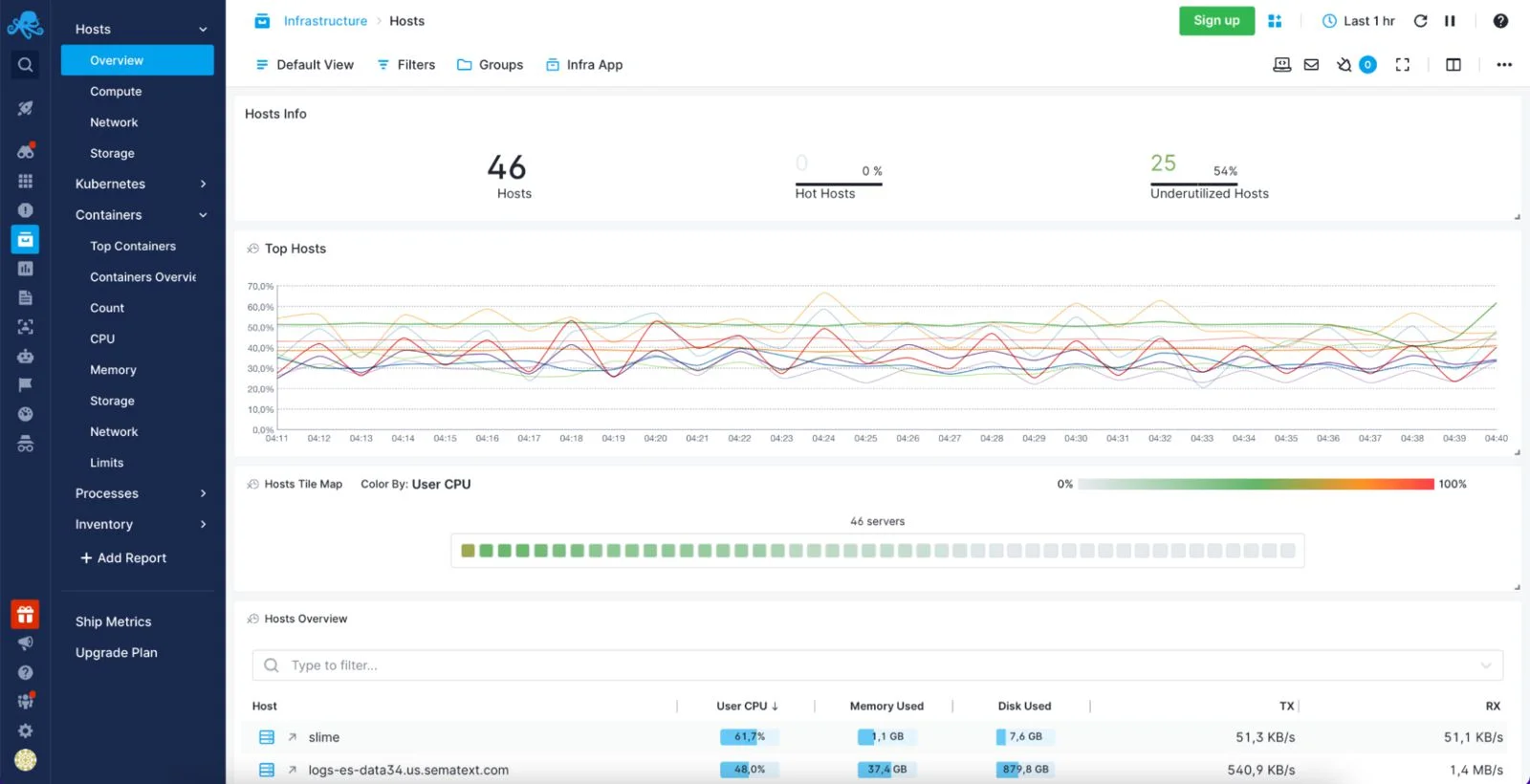

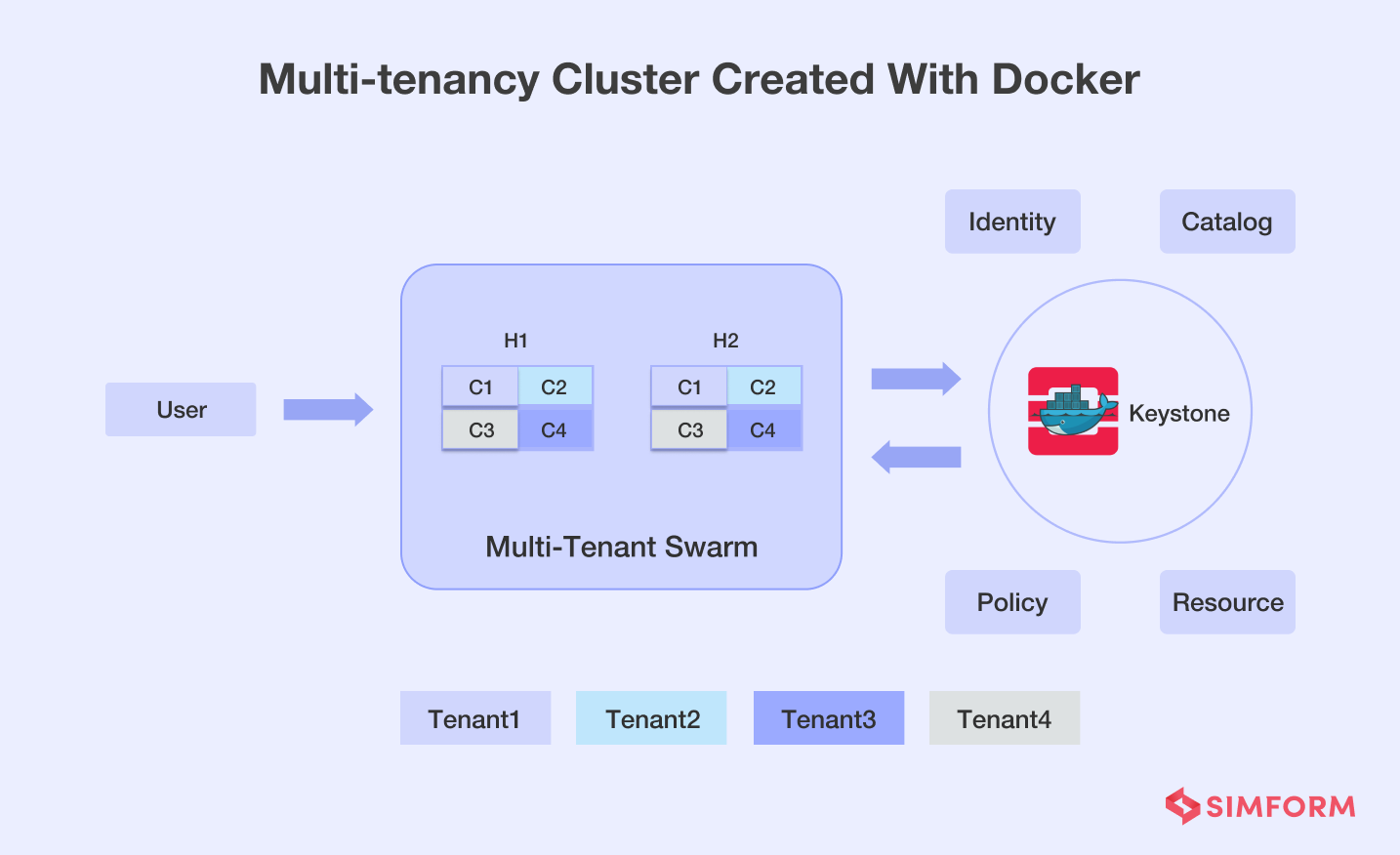

Docker isn't normally run as-is in production. It's now more common to use an orchestration platform such as Kubernetes or Docker Swarm mode. These tools are designed to handle multiple container replicas, which improves scalability and reliability.

Docker is only one component in the broader containerization movement. Orchestrators utilize the same container runtime technologies to provide an environment that's a better fit for production. Using multiple container instances allows for rolling updates as well as distribution across machines, making your deployment more resilient to change and outage. The regular docker CLI targets one host and works with individual containers.

Docker gives you everything you need to work with containers. It has become a key tool for software development and system administration. The principal benefits are increased isolation and portability for individual services.

Getting acquainted with Docker requires an understanding of the basic container and image concepts. You can apply these to create your specialized images and environments that containerize your workloads.

Docker Simplified: A Hands-On Guide for Absolute Beginners

Whether you are planning to start your career in DevOps, or you are already into it, if you do not have Docker listed on your resume, it’s undoubtedly time for you to think about it, as Docker is one of the critical skill for anyone who is into DevOps arena.

In this post, I will try my best to explain Docker in the simplest way I can.

Before we take a deep dive and start exploring Docker, let’s take a look at what topics we will be covering as part of this beginner’s guide.

- What is Docker?

- The problem Docker solves

- Advantages and disadvantages of using Docker

- Core components of Docker

Docker Terminology

What is docker hub, docker editions, installing docker.

- Some essential Docker commands to get you started

Let’s begin by understanding, What is Docker?

In simple terms, Docker is a software platform that simplifies the process of building, running, managing and distributing applications. It does this by virtualizing the operating system of the computer on which it is installed and running.

The first edition of Docker was released in 2013.

Docker is developed using the GO programming language.

Looking at the rich set of functionality Docker has got to offer, it’s been widely accepted by some of the world’s leading organizations and universities, such as Visa, PayPal, Cornell University and Indiana University (just to name a few) to run and manage their applications using Docker.

Now let’s try to understand the problem, and the solution Docker has got to offer

The problem.

Let’s say you have three different Python-based applications that you plan to host on a single server (which could either be a physical or a virtual machine).

Each of these applications makes use of a different version of Python, as well as the associated libraries and dependencies, differ from one application to another.

Since we cannot have different versions of Python installed on the same machine, this prevents us from hosting all three applications on the same computer.

The Solution

Let’s look at how we could solve this problem without making use of Docker. In such a scenario, we could solve this problem either by having three physical machines, or a single physical machine, which is powerful enough to host and run three virtual machines on it.

Both the options would allow us to install different versions of Python on each of these machines, along with their associated dependencies.

Irrespective of which solution we choose, the costs associated with procuring and maintaining the hardware are quite expensive.

Now, let’s check out how Docker could be an efficient and cost-effective solution to this problem.

To understand this, we need to take a look at how exactly Docker functions.

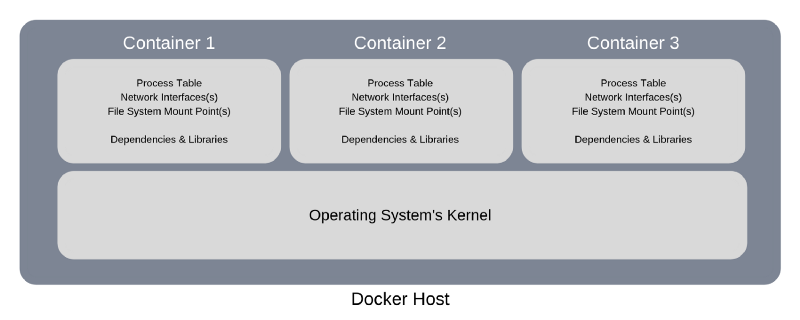

The machine on which Docker is installed and running is usually referred to as a Docker Host or Host in simple terms.

So, whenever you plan to deploy an application on the host, it would create a logical entity on it to host that application. In Docker terminology, we call this logical entity a Container or Docker Container to be more precise.

A Docker Container doesn’t have any operating system installed and running on it. But it would have a virtual copy of the process table, network interface(s), and the file system mount point(s). These have been inherited from the operating system of the host on which the container is hosted and running.

Whereas the kernel of the host’s operating system is shared across all the containers that are running on it.

This allows each container to be isolated from the other present on the same host. Thus it supports multiple containers with different application requirements and dependencies to run on the same host, as long as they have the same operating system requirements.

To understand how Docker has been beneficial in solving this problem, you need to refer to the next section, which discusses the advantages and disadvantages of using Docker.

In short, Docker would virtualize the operating system of the host on which it is installed and running, rather than virtualizing the hardware components.

The Advantages and Disadvantages of using Docker

Advantages of using docker.

Some of the key benefits of using Docker are listed below:

- Docker supports multiple applications with different application requirements and dependencies, to be hosted together on the same host, as long as they have the same operating system requirements.

- Storage Optimized. A large number of applications can be hosted on the same host, as containers are usually few megabytes in size and consume very little disk space.

- Robustness. A container does not have an operating system installed on it. Thus, it consumes very little memory in comparison to a virtual machine (which would have a complete operating system installed and running on it). This also reduces the bootup time to just a few seconds, as compared to a couple of minutes required to boot up a virtual machine.

- Reduces costs. Docker is less demanding when it comes to the hardware required to run it.

Disadvantages of using Docker

- Applications with different operating system requirements cannot be hosted together on the same Docker Host. For example, let’s say we have 4 different applications, out of which 3 applications require a Linux-based operating system and the other application requires a Windows-based operating system. In such a scenario, the 3 applications that require Linux-based operating system can be hosted on a single Docker Host, whereas the application that requires a Windows-based operating system needs to be hosted on a different Docker Host.

Core Components of Docker

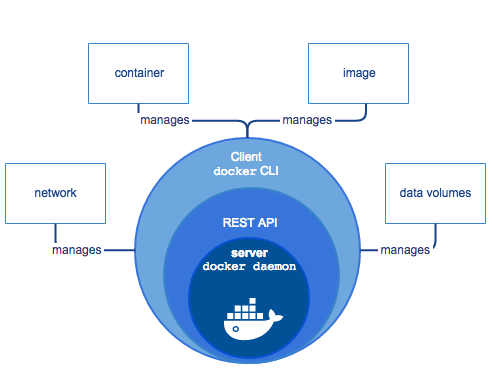

Docker Engine is one of the core components of Docker. It is responsible for the overall functioning of the Docker platform.

Docker Engine is a client-server based application and consists of 3 main components.

The Server runs a daemon known as dockerd (Docker Daemon) , which is nothing but a process. It is responsible for creating and managing Docker Images, Containers, Networks and Volumes on the Docker platform.

The REST API specifies how the applications can interact with the Server, and instruct it to get their job done.

The Client is nothing but a command line interface, that allows users to interact with Docker using the commands.

Let us take a quick look at some of the terminology associated with Docker.

Docker Images and Docker Containers are the two essential things that you will come across daily while working with Docker .

In simple terms, a Docker Image is a template that contains the application, and all the dependencies required to run that application on Docker.

On the other hand, as stated earlier, a Docker Container is a logical entity. In more precise terms, it is a running instance of the Docker Image.

Docker Hub is the official online repository where you could find all the Docker Images that are available for us to use.

Docker Hub also allows us to store and distribute our custom images as well if we wish to do so. We could also make them either public or private, based on our requirements.

Please Note: Free users are only allowed to keep one Docker Image as private. If we wish to keep more than one Docker Image as private, we need to subscribe to a paid subscription plan.

Docker is available in 2 different editions, as listed below:

- Community Edition (CE)

- Enterprise Edition (EE)

The Community Edition is suitable for individual developers and small teams. It offers limited functionality, in comparison to the Enterprise Edition.

The Enterprise Edition, on the other hand, is suitable for large teams and for using Docker in production environments.

The Enterprise Edition is further categorized into three different editions, as listed below:

- Basic Edition

- Standard Edition

- Advanced Edition

One last thing that we need to know before we go ahead and get our hands dirty with Docker is actually to have Docker installed.

Below are the links to the official Docker CE installation guides. You can follow these guides to install Docker on your machine, as they are simple and straightforward.

- CentOS Linux

- Debian Linux

- Fedora Linux

- Ubuntu Linux

- Microsoft Windows

Want to skip installation and head off straight to practicing Docker?

Just in case you are feeling too lazy to install Docker, or you don’t have enough resources available on your computer, you need not have to worry — here’s the solution to your problem.

You can head over to Play with Docker , which is an online playground for Docker. It allows users to practice Docker commands immediately, without having to install anything on your machine. The best part is it’s simple to use and available free of cost.

Docker Commands

Now it’s time to get our hands dirty with Docker commands, for which we all have been waiting till now.

docker create

The first command which we will be looking at is the docker create command.

This command allows us to create a new container.

The syntax for this command is as shown below:

Please Note: Anything enclosed within the square brackets is optional. This is applicable to all the commands that you would see on this guide.

Some of the examples of using this command are shown below:

In the above example, the docker create command would create a new container using the latest Fedora image.

Before creating the container, it will check if the latest official image of the Fedora is available on the Docker Host or not. If the latest image isn’t available on the Docker Host, it will then go ahead and download the Fedora image from the Docker Hub before creating the container. If the Fedora image is already present on the Docker Host, it will make use of that image and create the container.

If the container was created successfully, Docker will return the container ID. For instance, in the above example 02576e880a2ccbb4ce5c51032ea3b3bb8316e5b626861fc87d28627c810af03 is the container ID returned by Docker.

Each container has a unique container ID. We refer to the container using its container ID for performing various operations on the container, such as starting, stopping, restarting, and so on.

Now, let us refer to another example of docker create command, which has options and commands being passed to it.

In the above example, the docker create command creates a container using the Ubuntu image (As stated earlier, if the image isn’t available on the Docker Host, it will go ahead and download the latest image from the Docker Hub before creating the container).

The options -t and -i instruct Docker to allocate a terminal to the container so that the user can interact with the container. It also instructs Docker to execute the bash command whenever the container is started.

The next command we will look at is the docker ps command.

The docker ps command allows us to view all the containers that are running on the Docker Host.

It only displays the containers that are presently running on the Docker Host.

If you want to view all the containers that were created on this Docker Host, irrespective of their current status, such as whether they are running or exited, then you would need to include the option -a, which in turn would display all the containers that were created on this Docker Host.

Before we proceed further, let’s try to decode and understand the output of the docker ps command.

CONTAINER ID: A unique string consisting of alpha-numeric characters, associated with each container.

IMAGE: Name of the Docker Image used to create this container.

COMMAND: Any application specific command(s) that needs to be executed when the container is started.

CREATED: This shows the time elapsed since this container has been created.

STATUS: This shows the current status of the container, along with the time elapsed, in its present state.

If the container is running, it will display as Up along with the time period elapsed (for example, Up About an hour or Up 3 minutes).

If the container is stopped, then it will display as Exited followed by the exit status code within round brackets, along with the time period elapsed (for example, Exited (0) 3 weeks ago or Exited (137) 15 seconds ago, where 0 and 137 are the exit codes).

PORTS: This displays any port mappings defined for the container.

NAMES: Apart from the CONTAINER ID, each container is also assigned a unique name. We can refer to a container either using its container ID or its unique name. Docker automatically assigns a unique silly name to each container it creates. But if you want to specify your own name to the container, you can do that by including the — — name (double hyphen name) option to the docker create or the docker run (we will look at the docker run command later) command.

I hope this gives you a better understanding of the output of the docker ps command.

docker start

The next command we will look at, is the docker start command.

This command starts any stopped container(s).

We can start a container either by specifying the first few unique characters of its container ID or by specifying its name.

In the above example, Docker starts the container beginning with the container ID 30986.

Whereas in this example, Docker starts the container named elated_franklin.

docker stop

The next command on the list is the docker stop command.

This command stops any running container(s).

It is similar to the docker start command.

We can stop the container either by specifying the first few unique characters of its container ID or by specifying its name.

In the above example, Docker will stop the container beginning with the container ID 30986.

Whereas in this example, Docker will stop the container named elated_franklin.

docker restart

The next command we will look at is the docker restart command.

This command restarts any running container(s).

We can restart the container either by specifying the first few unique characters of its container ID or by specifying its name.

In the above example, Docker will restart the container beginning with the container ID 30986.

Whereas in this example, Docker will restart the container named elated_franklin.

The next command we will be looking at is the docker run command.

This command first creates the container, and then it starts the container. In short, this command is a combination of the docker create and the docker start command.

It has a syntax similar to that of the docker create command.

In the above example, Docker will create the container using the latest Ubuntu image and then immediately start the container.

If we execute the above command, it would start the container and immediately stop it — we wouldn’t get any chance to interact with the container at all.

If we want to interact with the container, then we need to specify the options: -it (hyphen followed by i and t) to the docker run command presents us with the terminal, using which we could interact with the container by typing in appropriate commands. Below is an example of the same.

In order to come out of the container, you need to type exit in the terminal.

Moving on to the next command — if we want to delete a container, we use the docker rm command.

In the above example, we are instructing Docker to delete 2 containers within a single command. The first container to be deleted is specified using its container ID, and the second container to be deleted is specified using its name.

Please Note: The containers need to be in a stopped state in order to be deleted.

docker images

docker images is the next command on the list.

This command lists out all the Docker Images that are present on your Docker Host.

Let us decode the output of the docker images command.

REPOSITORY: This represents the unique name of the Docker Image.

TAG: Each image is associated with a unique tag. A tag basically represents a version of the image.

A tag is usually represented either using a word or set of numbers or a combination of alphanumeric characters.

IMAGE ID: A unique string consisting of alpha-numeric characters, associated with each image.

CREATED: This shows the time elapsed since this image has been created.

SIZE: This shows the size of the image.

The next command on the list is the docker rmi command.

The docker rmi command allows us to remove an image(s) from the Docker Host.

The above command removes the image named mysql from the Docker Host.

The above command removes the images named httpd and fedora from the Docker Host.

The above command removes the image starting with the image ID 94e81 from the Docker Host.

The above command removes the image named ubuntu, with the tag trusty from the Docker Host.

These were some of the basic Docker commands you will see. There are many more Docker commands to explore.

Containerization has recently gotten the attention it deserves, although it has been around for a long time. Some of the top tech companies like Google, Amazon Web Services (AWS), Intel, Tesla, and Juniper Networks have their own custom version of container engines. They heavily rely on them to build, run, manage, and distribute their applications.

Docker is an extremely powerful containerization engine, and it has a lot to offer when it comes to building, running, managing and distributing your applications efficiently.

You have just seen Docker at a very high level. There is a lot more to learn about Docker, such as:

- Docker commands (More powerful commands)

- Docker Images (Build your own custom images)

- Docker Networking (Setup and configure networking)

- Docker Services (Grouping containers that use the same image)

- Docker Stack (Grouping services required by an application)

- Docker Compose (Tool for managing and running multiple containers)

- Docker Swarm (Grouping and managing one or more machines on which docker is running)

- And much more…

If you have found Docker to be fascinating, and are interested in learning more about it, then I would recommend that you enroll in the courses which are listed below. I found them to be very informative and straight to the point.

If you are an absolute beginner, then I would suggest you enroll in this course , which has been designed for beginners.

If you have some good knowledge about Docker, and are pretty much confident with the basic stuff and want to expand your knowledge, then I would suggest you should enroll into this course , which is aimed more towards advanced topics related to Docker.

Docker is a future-proofed skill and is just picking up momentum.

Investing your time and money into learning Docker wouldn’t be something that you would repent.

Hope you found this post to be informative. feel free to share it across. This really means a lot to me.

Before you say goodbye…

Let’s stay in touch, click here to enter your email address (Use this link if the above widget doesn’t show up on your screen).

Thank you so much for taking your precious time to read this post.

Disclaimer: All product and company names are trademarks™ or registered® trademarks of their respective holders. Use of them does not imply any endorsement by them. There may be affiliate links within this post.

If this article was helpful, share it .

Learn to code for free. freeCodeCamp's open source curriculum has helped more than 40,000 people get jobs as developers. Get started

Learn to build and deploy your distributed applications easily to the cloud with Docker

Written and developed by Prakhar Srivastav

Star

Introduction

What is docker.

Wikipedia defines Docker as

an open-source project that automates the deployment of software applications inside containers by providing an additional layer of abstraction and automation of OS-level virtualization on Linux.

Wow! That's a mouthful. In simpler words, Docker is a tool that allows developers, sys-admins etc. to easily deploy their applications in a sandbox (called containers ) to run on the host operating system i.e. Linux. The key benefit of Docker is that it allows users to package an application with all of its dependencies into a standardized unit for software development. Unlike virtual machines, containers do not have high overhead and hence enable more efficient usage of the underlying system and resources.

What are containers?

The industry standard today is to use Virtual Machines (VMs) to run software applications. VMs run applications inside a guest Operating System, which runs on virtual hardware powered by the server’s host OS.

VMs are great at providing full process isolation for applications: there are very few ways a problem in the host operating system can affect the software running in the guest operating system, and vice-versa. But this isolation comes at great cost — the computational overhead spent virtualizing hardware for a guest OS to use is substantial.

Containers take a different approach: by leveraging the low-level mechanics of the host operating system, containers provide most of the isolation of virtual machines at a fraction of the computing power.

Why use containers?

Containers offer a logical packaging mechanism in which applications can be abstracted from the environment in which they actually run. This decoupling allows container-based applications to be deployed easily and consistently, regardless of whether the target environment is a private data center, the public cloud, or even a developer’s personal laptop. This gives developers the ability to create predictable environments that are isolated from the rest of the applications and can be run anywhere.

From an operations standpoint, apart from portability containers also give more granular control over resources giving your infrastructure improved efficiency which can result in better utilization of your compute resources.

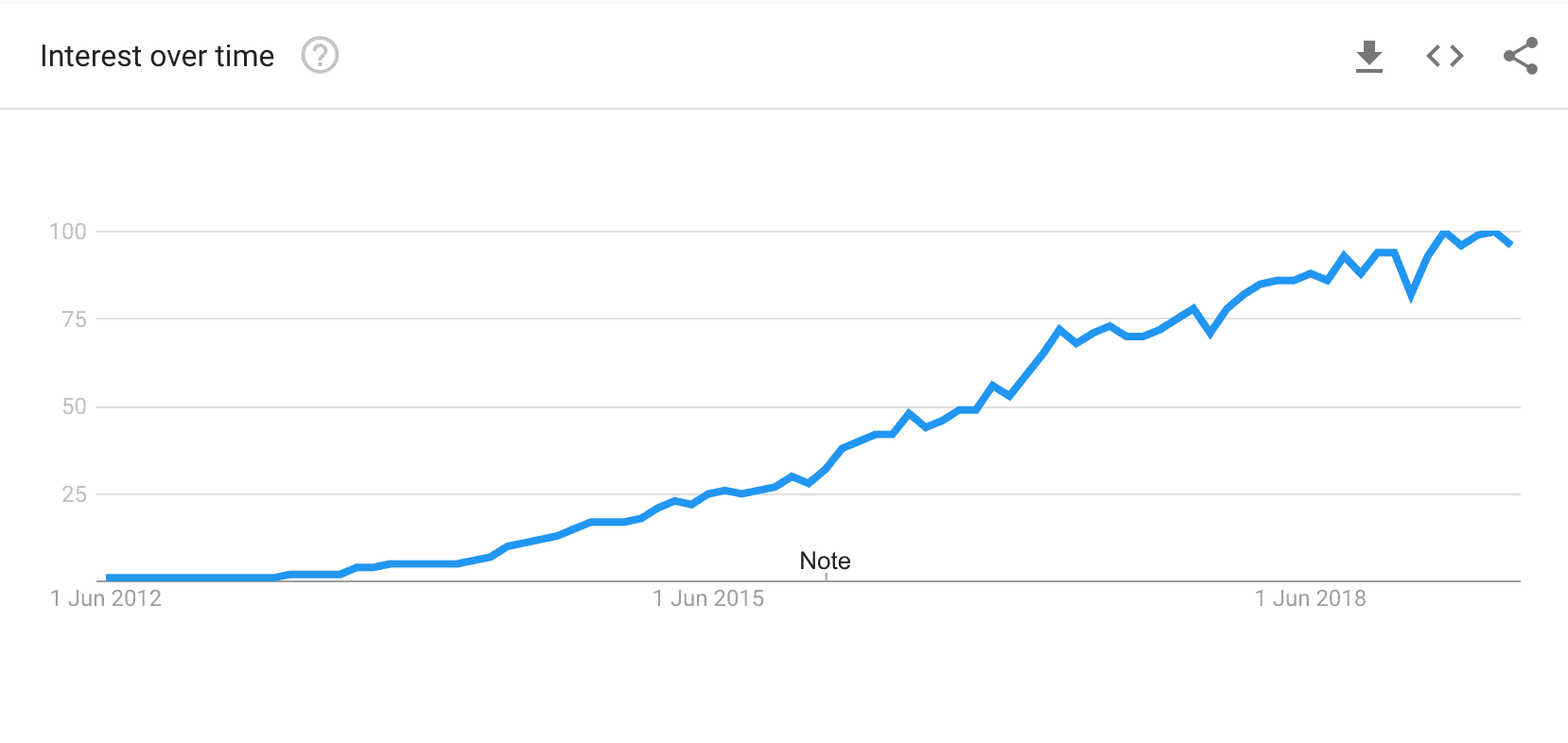

Google Trends for Docker

Due to these benefits, containers (& Docker) have seen widespread adoption. Companies like Google, Facebook, Netflix and Salesforce leverage containers to make large engineering teams more productive and to improve utilization of compute resources. In fact, Google credited containers for eliminating the need for an entire data center.

What will this tutorial teach me?

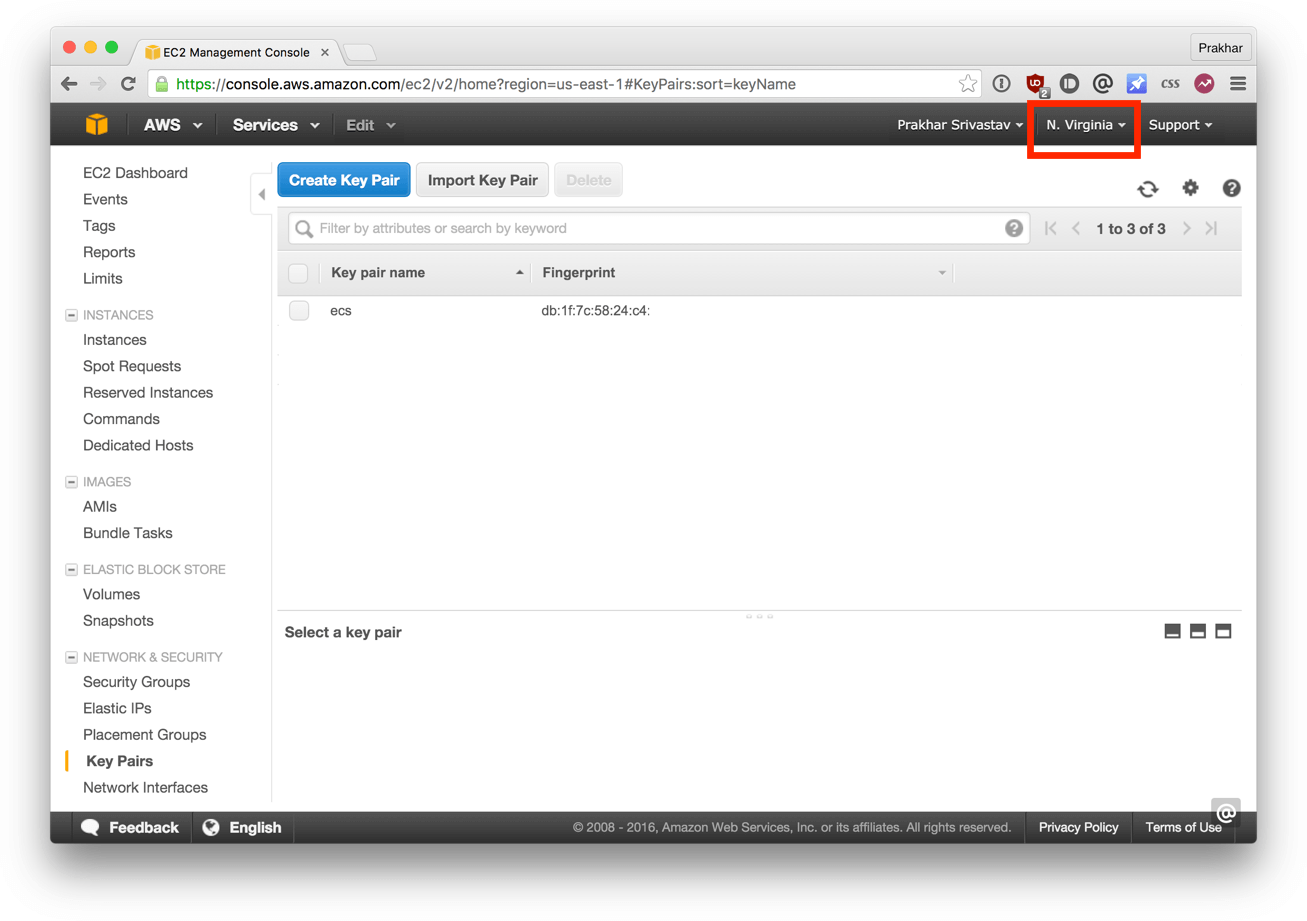

This tutorial aims to be the one-stop shop for getting your hands dirty with Docker. Apart from demystifying the Docker landscape, it'll give you hands-on experience with building and deploying your own webapps on the Cloud. We'll be using Amazon Web Services to deploy a static website, and two dynamic webapps on EC2 using Elastic Beanstalk and Elastic Container Service . Even if you have no prior experience with deployments, this tutorial should be all you need to get started.

Getting Started

This document contains a series of several sections, each of which explains a particular aspect of Docker. In each section, we will be typing commands (or writing code). All the code used in the tutorial is available in the Github repo .

Note: This tutorial uses version 18.05.0-ce of Docker. If you find any part of the tutorial incompatible with a future version, please raise an issue . Thanks!

Prerequisites

There are no specific skills needed for this tutorial beyond a basic comfort with the command line and using a text editor. This tutorial uses git clone to clone the repository locally. If you don't have Git installed on your system, either install it or remember to manually download the zip files from Github. Prior experience in developing web applications will be helpful but is not required. As we proceed further along the tutorial, we'll make use of a few cloud services. If you're interested in following along, please create an account on each of these websites:

- Amazon Web Services

Setting up your computer

Getting all the tooling setup on your computer can be a daunting task, but thankfully as Docker has become stable, getting Docker up and running on your favorite OS has become very easy.

Until a few releases ago, running Docker on OSX and Windows was quite a hassle. Lately however, Docker has invested significantly into improving the on-boarding experience for its users on these OSes, thus running Docker now is a cakewalk. The getting started guide on Docker has detailed instructions for setting up Docker on Mac , Linux and Windows .

Once you are done installing Docker, test your Docker installation by running the following:

Hello World

Playing with busybox.

Now that we have everything setup, it's time to get our hands dirty. In this section, we are going to run a Busybox container on our system and get a taste of the docker run command.

To get started, let's run the following in our terminal:

Note: Depending on how you've installed docker on your system, you might see a permission denied error after running the above command. If you're on a Mac, make sure the Docker engine is running. If you're on Linux, then prefix your docker commands with sudo . Alternatively, you can create a docker group to get rid of this issue.

The pull command fetches the busybox image from the Docker registry and saves it to our system. You can use the docker images command to see a list of all images on your system.

Great! Let's now run a Docker container based on this image. To do that we are going to use the almighty docker run command.

Wait, nothing happened! Is that a bug? Well, no. Behind the scenes, a lot of stuff happened. When you call run , the Docker client finds the image (busybox in this case), loads up the container and then runs a command in that container. When we run docker run busybox , we didn't provide a command, so the container booted up, ran an empty command and then exited. Well, yeah - kind of a bummer. Let's try something more exciting.

Nice - finally we see some output. In this case, the Docker client dutifully ran the echo command in our busybox container and then exited it. If you've noticed, all of that happened pretty quickly. Imagine booting up a virtual machine, running a command and then killing it. Now you know why they say containers are fast! Ok, now it's time to see the docker ps command. The docker ps command shows you all containers that are currently running.

Since no containers are running, we see a blank line. Let's try a more useful variant: docker ps -a

So what we see above is a list of all containers that we ran. Do notice that the STATUS column shows that these containers exited a few minutes ago.

You're probably wondering if there is a way to run more than just one command in a container. Let's try that now:

Running the run command with the -it flags attaches us to an interactive tty in the container. Now we can run as many commands in the container as we want. Take some time to run your favorite commands.

Danger Zone : If you're feeling particularly adventurous you can try rm -rf bin in the container. Make sure you run this command in the container and not in your laptop/desktop. Doing this will make any other commands like ls , uptime not work. Once everything stops working, you can exit the container (type exit and press Enter) and then start it up again with the docker run -it busybox sh command. Since Docker creates a new container every time, everything should start working again.

That concludes a whirlwind tour of the mighty docker run command, which would most likely be the command you'll use most often. It makes sense to spend some time getting comfortable with it. To find out more about run , use docker run --help to see a list of all flags it supports. As we proceed further, we'll see a few more variants of docker run .

Before we move ahead though, let's quickly talk about deleting containers. We saw above that we can still see remnants of the container even after we've exited by running docker ps -a . Throughout this tutorial, you'll run docker run multiple times and leaving stray containers will eat up disk space. Hence, as a rule of thumb, I clean up containers once I'm done with them. To do that, you can run the docker rm command. Just copy the container IDs from above and paste them alongside the command.

On deletion, you should see the IDs echoed back to you. If you have a bunch of containers to delete in one go, copy-pasting IDs can be tedious. In that case, you can simply run -

This command deletes all containers that have a status of exited . In case you're wondering, the -q flag, only returns the numeric IDs and -f filters output based on conditions provided. One last thing that'll be useful is the --rm flag that can be passed to docker run which automatically deletes the container once it's exited from. For one off docker runs, --rm flag is very useful.

In later versions of Docker, the docker container prune command can be used to achieve the same effect.

Lastly, you can also delete images that you no longer need by running docker rmi .

Terminology

In the last section, we used a lot of Docker-specific jargon which might be confusing to some. So before we go further, let me clarify some terminology that is used frequently in the Docker ecosystem.

- Images - The blueprints of our application which form the basis of containers. In the demo above, we used the docker pull command to download the busybox image.

- Containers - Created from Docker images and run the actual application. We create a container using docker run which we did using the busybox image that we downloaded. A list of running containers can be seen using the docker ps command.

- Docker Daemon - The background service running on the host that manages building, running and distributing Docker containers. The daemon is the process that runs in the operating system which clients talk to.

- Docker Client - The command line tool that allows the user to interact with the daemon. More generally, there can be other forms of clients too - such as Kitematic which provide a GUI to the users.

- Docker Hub - A registry of Docker images. You can think of the registry as a directory of all available Docker images. If required, one can host their own Docker registries and can use them for pulling images.

Webapps with Docker

Great! So we have now looked at docker run , played with a Docker container and also got a hang of some terminology. Armed with all this knowledge, we are now ready to get to the real-stuff, i.e. deploying web applications with Docker!

Static Sites

Let's start by taking baby-steps. The first thing we're going to look at is how we can run a dead-simple static website. We're going to pull a Docker image from Docker Hub, run the container and see how easy it is to run a webserver.

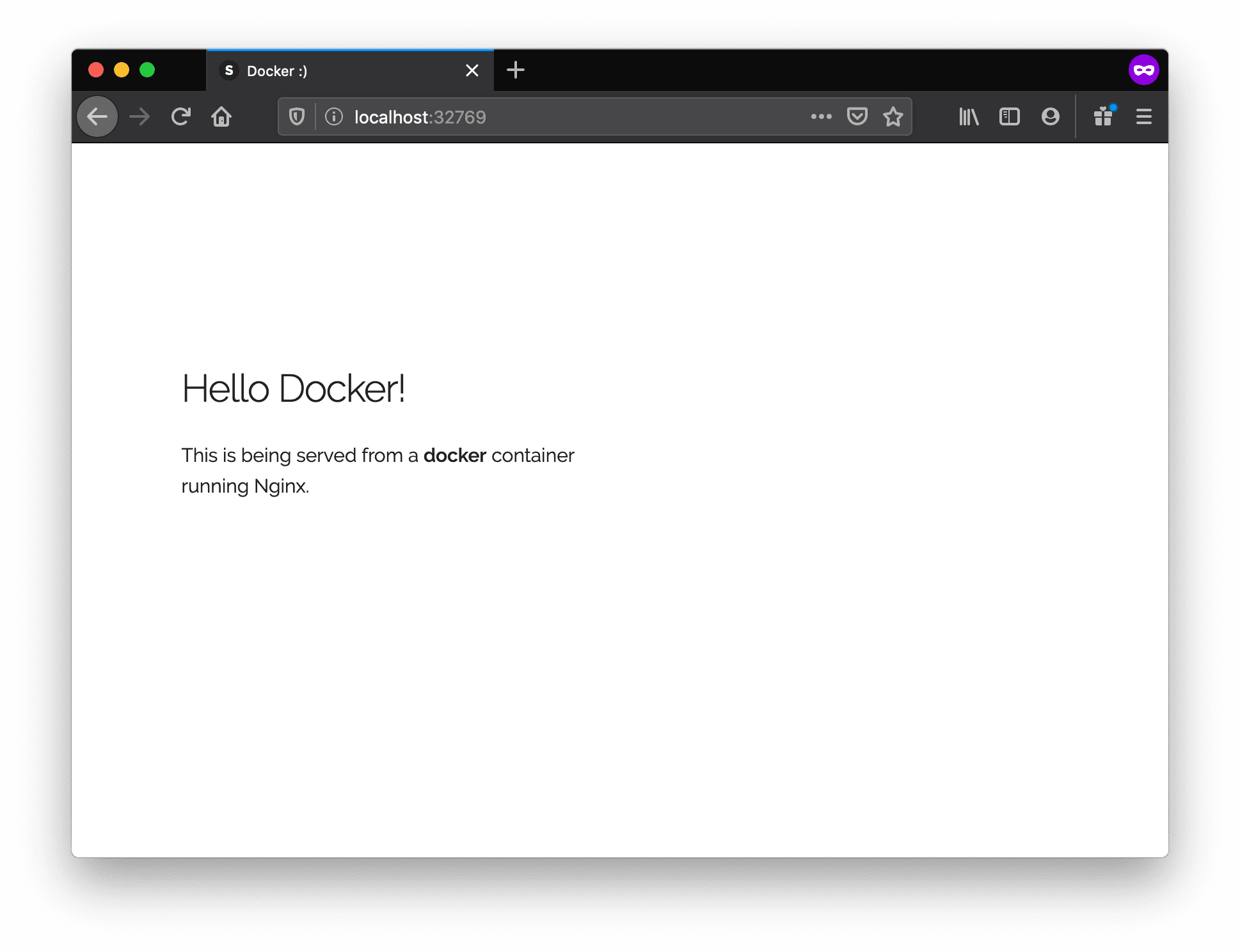

Let's begin. The image that we are going to use is a single-page website that I've already created for the purpose of this demo and hosted on the registry - prakhar1989/static-site . We can download and run the image directly in one go using docker run . As noted above, the --rm flag automatically removes the container when it exits and the -it flag specifies an interactive terminal which makes it easier to kill the container with Ctrl+C (on windows).

Since the image doesn't exist locally, the client will first fetch the image from the registry and then run the image. If all goes well, you should see a Nginx is running... message in your terminal. Okay now that the server is running, how to see the website? What port is it running on? And more importantly, how do we access the container directly from our host machine? Hit Ctrl+C to stop the container.

Well, in this case, the client is not exposing any ports so we need to re-run the docker run command to publish ports. While we're at it, we should also find a way so that our terminal is not attached to the running container. This way, you can happily close your terminal and keep the container running. This is called detached mode.

In the above command, -d will detach our terminal, -P will publish all exposed ports to random ports and finally --name corresponds to a name we want to give. Now we can see the ports by running the docker port [CONTAINER] command

You can open http://localhost:32769 in your browser.

Note: If you're using docker-toolbox, then you might need to use docker-machine ip default to get the IP.

You can also specify a custom port to which the client will forward connections to the container.

To stop a detached container, run docker stop by giving the container ID. In this case, we can use the name static-site we used to start the container.

I'm sure you agree that was super simple. To deploy this on a real server you would just need to install Docker, and run the above Docker command. Now that you've seen how to run a webserver inside a Docker image, you must be wondering - how do I create my own Docker image? This is the question we'll be exploring in the next section.

Docker Images

We've looked at images before, but in this section we'll dive deeper into what Docker images are and build our own image! Lastly, we'll also use that image to run our application locally and finally deploy on AWS to share it with our friends! Excited? Great! Let's get started.

Docker images are the basis of containers. In the previous example, we pulled the Busybox image from the registry and asked the Docker client to run a container based on that image. To see the list of images that are available locally, use the docker images command.

The above gives a list of images that I've pulled from the registry, along with ones that I've created myself (we'll shortly see how). The TAG refers to a particular snapshot of the image and the IMAGE ID is the corresponding unique identifier for that image.

For simplicity, you can think of an image akin to a git repository - images can be committed with changes and have multiple versions. If you don't provide a specific version number, the client defaults to latest . For example, you can pull a specific version of ubuntu image

To get a new Docker image you can either get it from a registry (such as the Docker Hub) or create your own. There are tens of thousands of images available on Docker Hub . You can also search for images directly from the command line using docker search .

An important distinction to be aware of when it comes to images is the difference between base and child images.

Base images are images that have no parent image, usually images with an OS like ubuntu, busybox or debian.

Child images are images that build on base images and add additional functionality.

Then there are official and user images, which can be both base and child images.

Official images are images that are officially maintained and supported by the folks at Docker. These are typically one word long. In the list of images above, the python , ubuntu , busybox and hello-world images are official images.

User images are images created and shared by users like you and me. They build on base images and add additional functionality. Typically, these are formatted as user/image-name .

Our First Image

Now that we have a better understanding of images, it's time to create our own. Our goal in this section will be to create an image that sandboxes a simple Flask application. For the purposes of this workshop, I've already created a fun little Flask app that displays a random cat .gif every time it is loaded - because you know, who doesn't like cats? If you haven't already, please go ahead and clone the repository locally like so -

This should be cloned on the machine where you are running the docker commands and not inside a docker container.

The next step now is to create an image with this web app. As mentioned above, all user images are based on a base image. Since our application is written in Python, the base image we're going to use will be Python 3 .

A Dockerfile is a simple text file that contains a list of commands that the Docker client calls while creating an image. It's a simple way to automate the image creation process. The best part is that the commands you write in a Dockerfile are almost identical to their equivalent Linux commands. This means you don't really have to learn new syntax to create your own dockerfiles.

The application directory does contain a Dockerfile but since we're doing this for the first time, we'll create one from scratch. To start, create a new blank file in our favorite text-editor and save it in the same folder as the flask app by the name of Dockerfile .

We start with specifying our base image. Use the FROM keyword to do that -

The next step usually is to write the commands of copying the files and installing the dependencies. First, we set a working directory and then copy all the files for our app.

Now, that we have the files, we can install the dependencies.

The next thing we need to specify is the port number that needs to be exposed. Since our flask app is running on port 5000 , that's what we'll indicate.

The last step is to write the command for running the application, which is simply - python ./app.py . We use the CMD command to do that -

The primary purpose of CMD is to tell the container which command it should run when it is started. With that, our Dockerfile is now ready. This is how it looks -

Now that we have our Dockerfile , we can build our image. The docker build command does the heavy-lifting of creating a Docker image from a Dockerfile .

The section below shows you the output of running the same. Before you run the command yourself (don't forget the period), make sure to replace my username with yours. This username should be the same one you created when you registered on Docker hub . If you haven't done that yet, please go ahead and create an account. The docker build command is quite simple - it takes an optional tag name with -t and a location of the directory containing the Dockerfile .

If you don't have the python:3.8 image, the client will first pull the image and then create your image. Hence, your output from running the command will look different from mine. If everything went well, your image should be ready! Run docker images and see if your image shows.

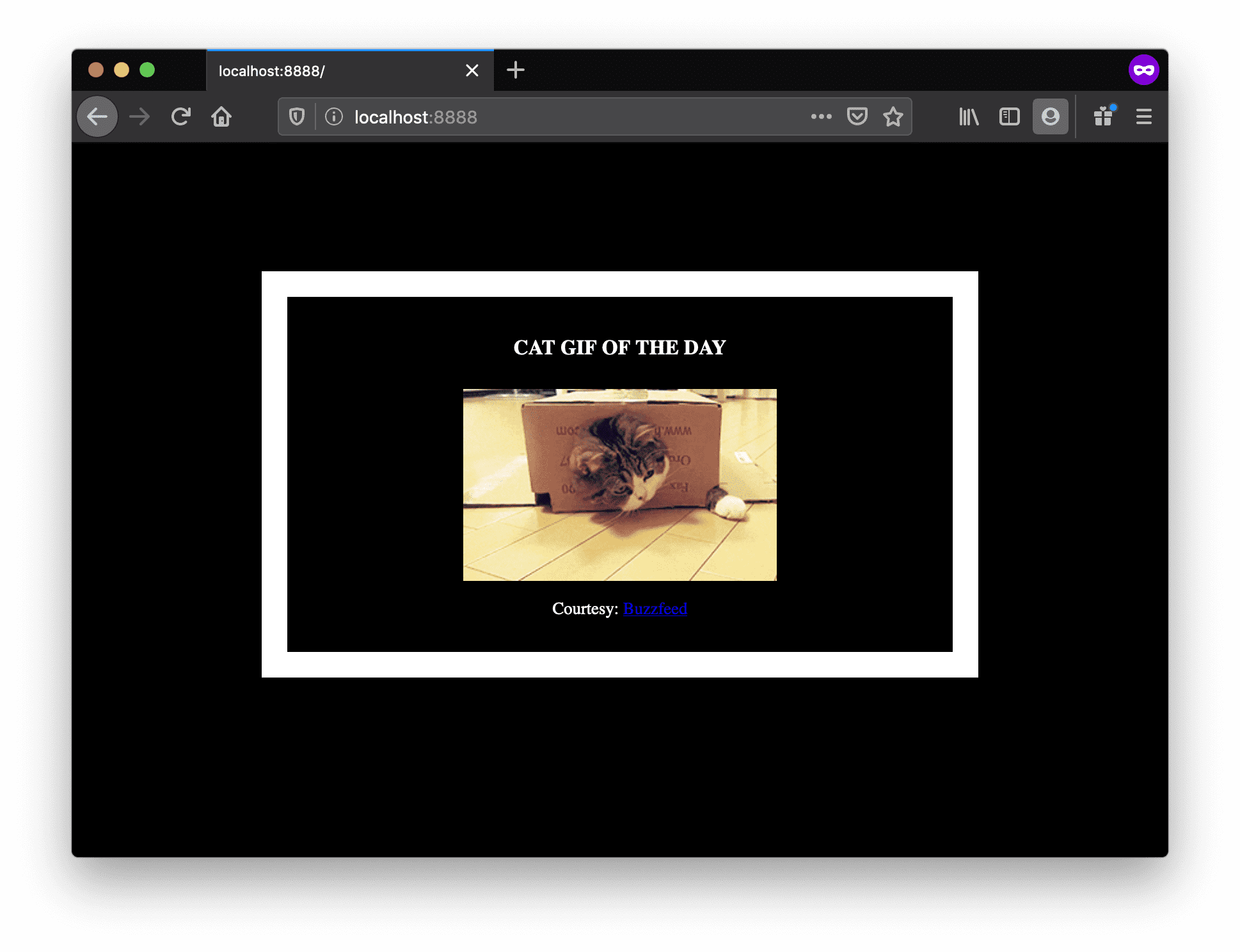

The last step in this section is to run the image and see if it actually works (replacing my username with yours).

The command we just ran used port 5000 for the server inside the container and exposed this externally on port 8888. Head over to the URL with port 8888, where your app should be live.

Congratulations! You have successfully created your first docker image.

Docker on AWS

What good is an application that can't be shared with friends, right? So in this section we are going to see how we can deploy our awesome application to the cloud so that we can share it with our friends! We're going to use AWS Elastic Beanstalk to get our application up and running in a few clicks. We'll also see how easy it is to make our application scalable and manageable with Beanstalk!

Docker push

The first thing that we need to do before we deploy our app to AWS is to publish our image on a registry which can be accessed by AWS. There are many different Docker registries you can use (you can even host your own ). For now, let's use Docker Hub to publish the image.

If this is the first time you are pushing an image, the client will ask you to login. Provide the same credentials that you used for logging into Docker Hub.

To publish, just type the below command remembering to replace the name of the image tag above with yours. It is important to have the format of yourusername/image_name so that the client knows where to publish.

Once that is done, you can view your image on Docker Hub. For example, here's the web page for my image.

Note: One thing that I'd like to clarify before we go ahead is that it is not imperative to host your image on a public registry (or any registry) in order to deploy to AWS. In case you're writing code for the next million-dollar unicorn startup you can totally skip this step. The reason why we're pushing our images publicly is that it makes deployment super simple by skipping a few intermediate configuration steps.

Now that your image is online, anyone who has docker installed can play with your app by typing just a single command.

If you've pulled your hair out in setting up local dev environments / sharing application configuration in the past, you very well know how awesome this sounds. That's why Docker is so cool!

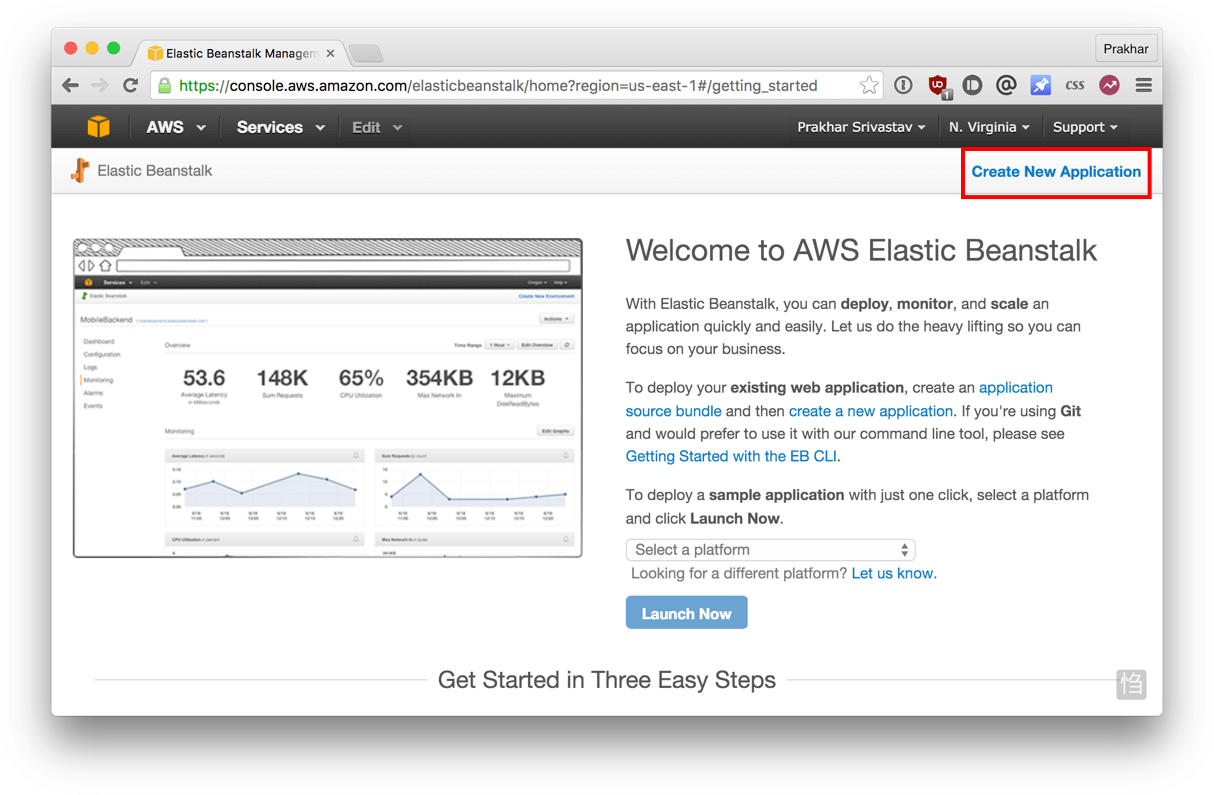

AWS Elastic Beanstalk (EB) is a PaaS (Platform as a Service) offered by AWS. If you've used Heroku, Google App Engine etc. you'll feel right at home. As a developer, you just tell EB how to run your app and it takes care of the rest - including scaling, monitoring and even updates. In April 2014, EB added support for running single-container Docker deployments which is what we'll use to deploy our app. Although EB has a very intuitive CLI , it does require some setup, and to keep things simple we'll use the web UI to launch our application.

To follow along, you need a functioning AWS account. If you haven't already, please go ahead and do that now - you will need to enter your credit card information. But don't worry, it's free and anything we do in this tutorial will also be free! Let's get started.

Here are the steps:

- Login to your AWS console .

- Click on Elastic Beanstalk. It will be in the compute section on the top left. Alternatively, you can access the Elastic Beanstalk console .

- Click on "Create New Application" in the top right

- Give your app a memorable (but unique) name and provide an (optional) description

- In the New Environment screen, create a new environment and choose the Web Server Environment .

- Fill in the environment information by choosing a domain. This URL is what you'll share with your friends so make sure it's easy to remember.

- Under base configuration section. Choose Docker from the predefined platform .

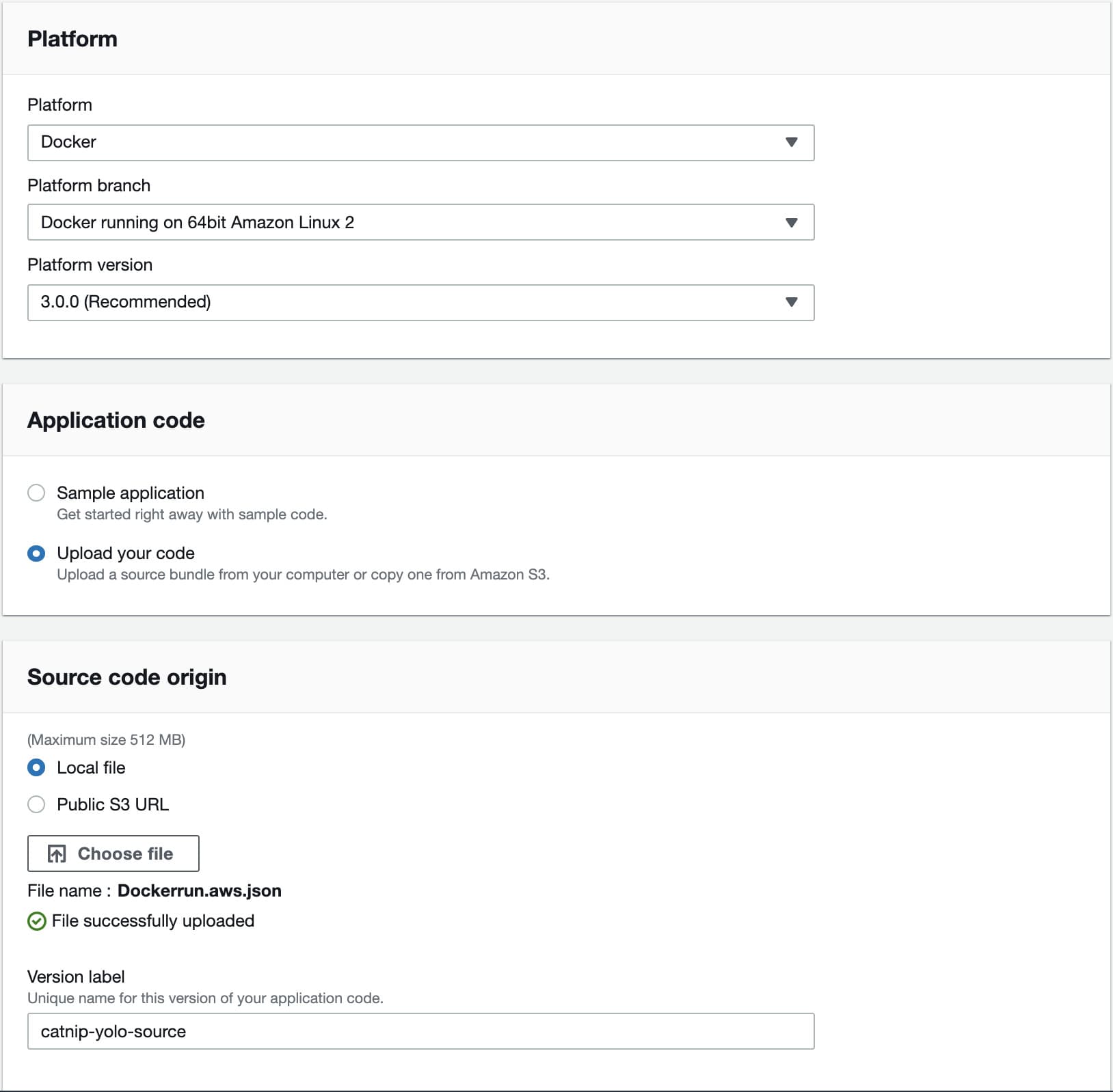

- Now we need to upload our application code. But since our application is packaged in a Docker container, we just need to tell EB about our container. Open the Dockerrun.aws.json file located in the flask-app folder and edit the Name of the image to your image's name. Don't worry, I'll explain the contents of the file shortly. When you are done, click on the radio button for "Upload your Code", choose this file, and click on "Upload".

- Now click on "Create environment". The final screen that you see will have a few spinners indicating that your environment is being set up. It typically takes around 5 minutes for the first-time setup.

While we wait, let's quickly see what the Dockerrun.aws.json file contains. This file is basically an AWS specific file that tells EB details about our application and docker configuration.

The file should be pretty self-explanatory, but you can always reference the official documentation for more information. We provide the name of the image that EB should use along with a port that the container should open.

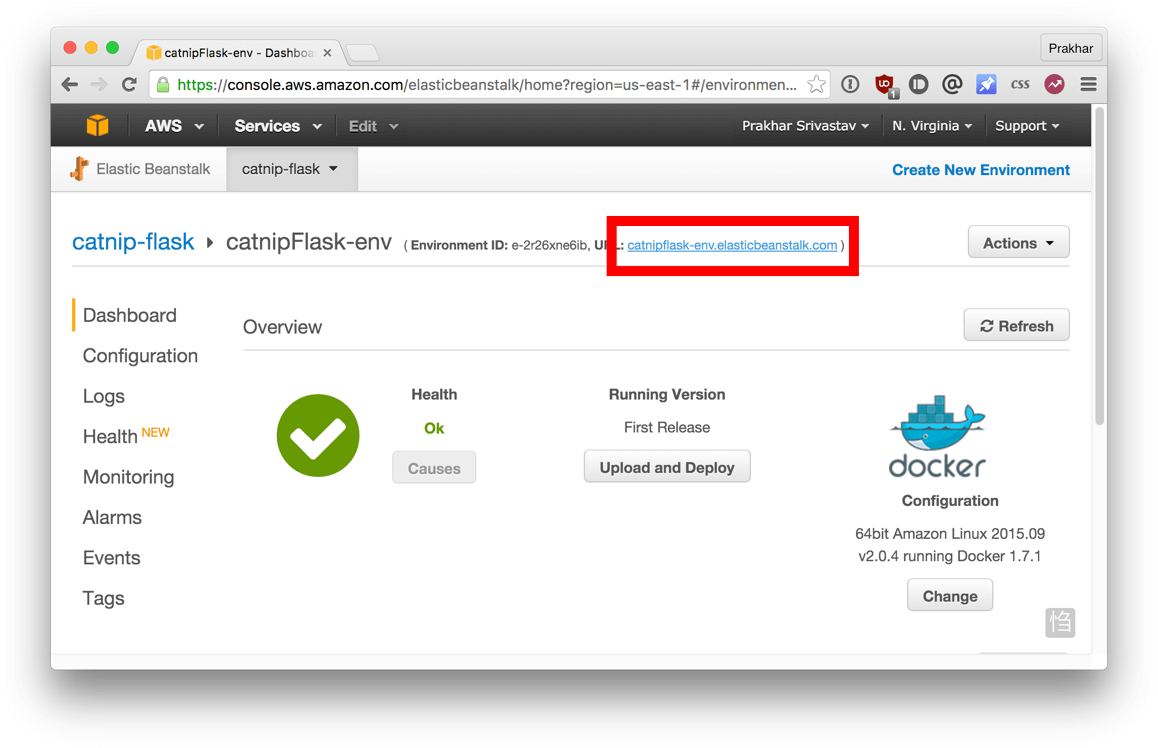

Hopefully by now, our instance should be ready. Head over to the EB page and you should see a green tick indicating that your app is alive and kicking.

Go ahead and open the URL in your browser and you should see the application in all its glory. Feel free to email / IM / snapchat this link to your friends and family so that they can enjoy a few cat gifs, too.

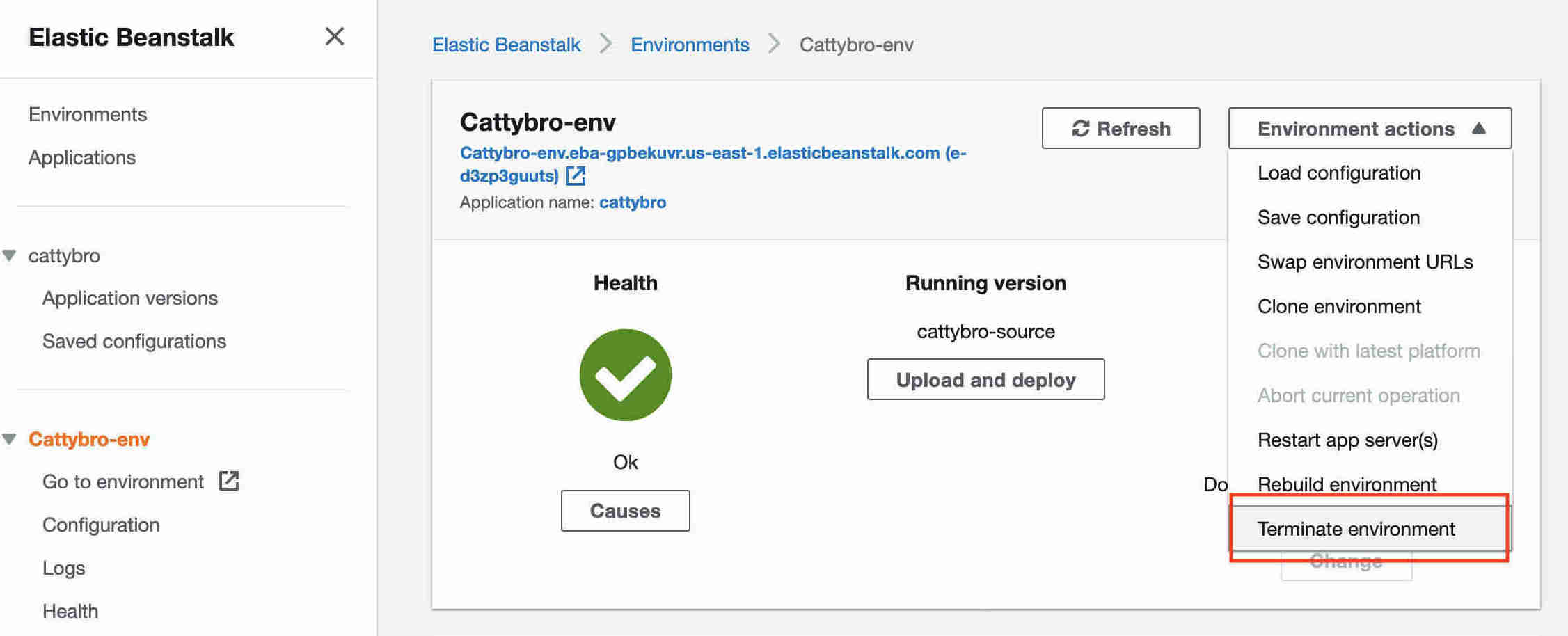

Once you done basking in the glory of your app, remember to terminate the environment so that you don't end up getting charged for extra resources.

Congratulations! You have deployed your first Docker application! That might seem like a lot of steps, but with the command-line tool for EB you can almost mimic the functionality of Heroku in a few keystrokes! Hopefully, you agree that Docker takes away a lot of the pains of building and deploying applications in the cloud. I would encourage you to read the AWS documentation on single-container Docker environments to get an idea of what features exist.

In the next (and final) part of the tutorial, we'll up the ante a bit and deploy an application that mimics the real-world more closely; an app with a persistent back-end storage tier. Let's get straight to it!

Multi-container Environments

In the last section, we saw how easy and fun it is to run applications with Docker. We started with a simple static website and then tried a Flask app. Both of which we could run locally and in the cloud with just a few commands. One thing both these apps had in common was that they were running in a single container .

Those of you who have experience running services in production know that usually apps nowadays are not that simple. There's almost always a database (or any other kind of persistent storage) involved. Systems such as Redis and Memcached have become de rigueur of most web application architectures. Hence, in this section we are going to spend some time learning how to Dockerize applications which rely on different services to run.

In particular, we are going to see how we can run and manage multi-container docker environments. Why multi-container you might ask? Well, one of the key points of Docker is the way it provides isolation. The idea of bundling a process with its dependencies in a sandbox (called containers) is what makes this so powerful.

Just like it's a good strategy to decouple your application tiers, it is wise to keep containers for each of the services separate. Each tier is likely to have different resource needs and those needs might grow at different rates. By separating the tiers into different containers, we can compose each tier using the most appropriate instance type based on different resource needs. This also plays in very well with the whole microservices movement which is one of the main reasons why Docker (or any other container technology) is at the forefront of modern microservices architectures.

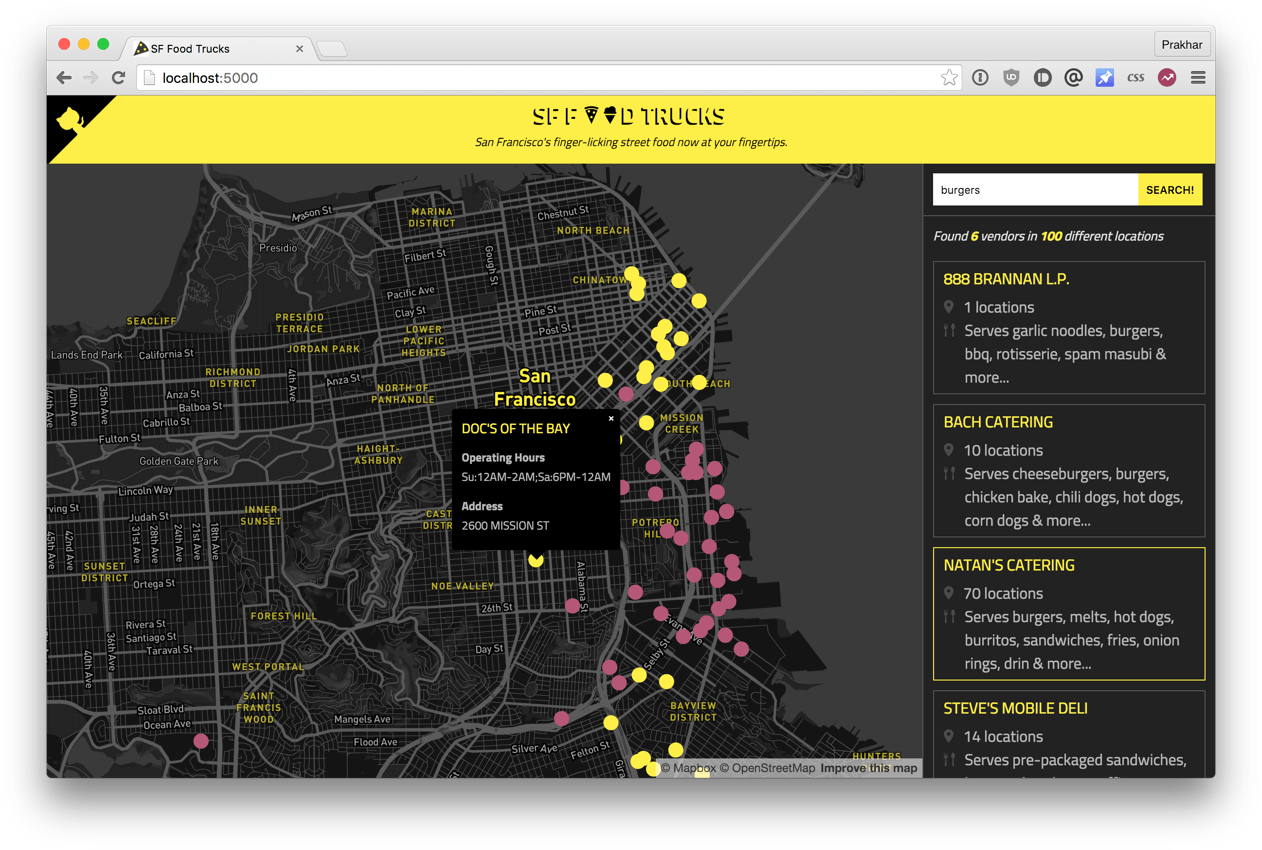

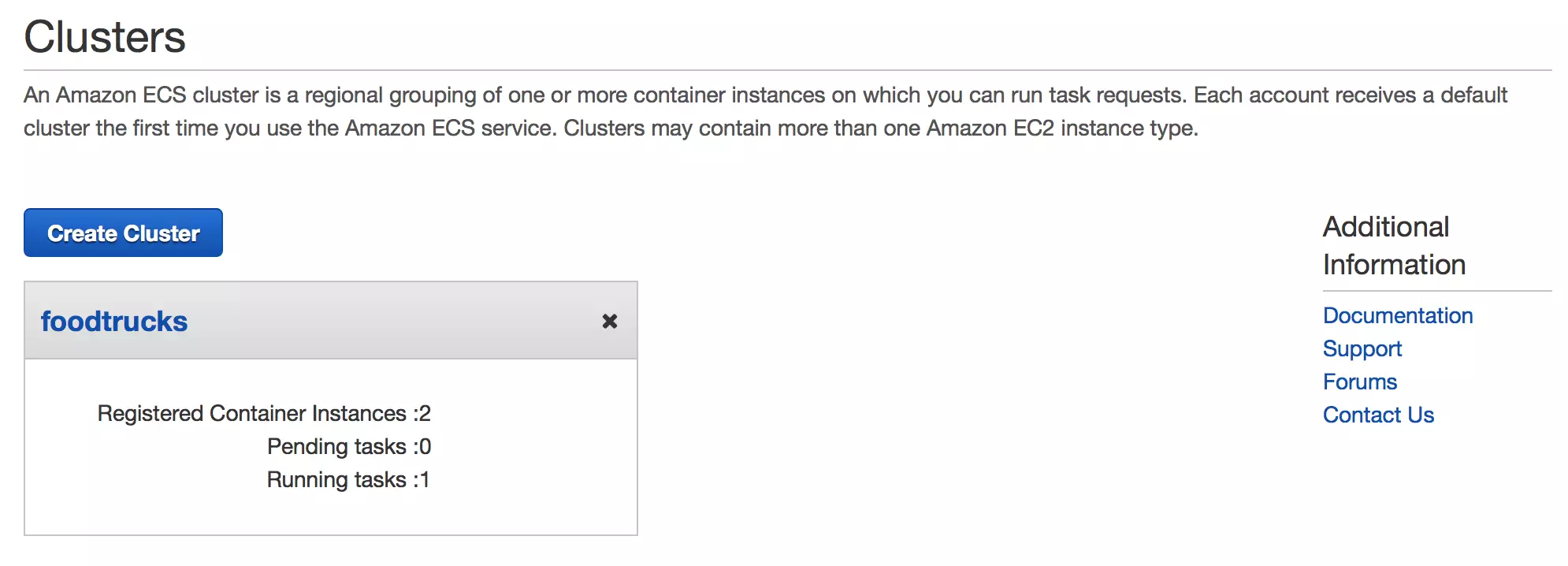

SF Food Trucks

The app that we're going to Dockerize is called SF Food Trucks. My goal in building this app was to have something that is useful (in that it resembles a real-world application), relies on at least one service, but is not too complex for the purpose of this tutorial. This is what I came up with.

The app's backend is written in Python (Flask) and for search it uses Elasticsearch . Like everything else in this tutorial, the entire source is available on Github . We'll use this as our candidate application for learning out how to build, run and deploy a multi-container environment.

First up, let's clone the repository locally.

The flask-app folder contains the Python application, while the utils folder has some utilities to load the data into Elasticsearch. The directory also contains some YAML files and a Dockerfile, all of which we'll see in greater detail as we progress through this tutorial. If you are curious, feel free to take a look at the files.

Now that you're excited (hopefully), let's think of how we can Dockerize the app. We can see that the application consists of a Flask backend server and an Elasticsearch service. A natural way to split this app would be to have two containers - one running the Flask process and another running the Elasticsearch (ES) process. That way if our app becomes popular, we can scale it by adding more containers depending on where the bottleneck lies.

Great, so we need two containers. That shouldn't be hard right? We've already built our own Flask container in the previous section. And for Elasticsearch, let's see if we can find something on the hub.

Quite unsurprisingly, there exists an officially supported image for Elasticsearch. To get ES running, we can simply use docker run and have a single-node ES container running locally within no time.

Note: Elastic, the company behind Elasticsearch, maintains its own registry for Elastic products. It's recommended to use the images from that registry if you plan to use Elasticsearch.

Let's first pull the image

and then run it in development mode by specifying ports and setting an environment variable that configures the Elasticsearch cluster to run as a single-node.

Note: If your container runs into memory issues, you might need to tweak some JVM flags to limit its memory consumption.

As seen above, we use --name es to give our container a name which makes it easy to use in subsequent commands. Once the container is started, we can see the logs by running docker container logs with the container name (or ID) to inspect the logs. You should see logs similar to below if Elasticsearch started successfully.

Note: Elasticsearch takes a few seconds to start so you might need to wait before you see initialized in the logs.

Now, lets try to see if can send a request to the Elasticsearch container. We use the 9200 port to send a cURL request to the container.

Sweet! It's looking good! While we are at it, let's get our Flask container running too. But before we get to that, we need a Dockerfile . In the last section, we used python:3.8 image as our base image. This time, however, apart from installing Python dependencies via pip , we want our application to also generate our minified Javascript file for production. For this, we'll require Nodejs. Since we need a custom build step, we'll start from the ubuntu base image to build our Dockerfile from scratch.

Note: if you find that an existing image doesn't cater to your needs, feel free to start from another base image and tweak it yourself. For most of the images on Docker Hub, you should be able to find the corresponding Dockerfile on Github. Reading through existing Dockerfiles is one of the best ways to learn how to roll your own.

Our Dockerfile for the flask app looks like below -

Quite a few new things here so let's quickly go over this file. We start off with the Ubuntu LTS base image and use the package manager apt-get to install the dependencies namely - Python and Node. The yqq flag is used to suppress output and assumes "Yes" to all prompts.

We then use the ADD command to copy our application into a new volume in the container - /opt/flask-app . This is where our code will reside. We also set this as our working directory, so that the following commands will be run in the context of this location. Now that our system-wide dependencies are installed, we get around to installing app-specific ones. First off we tackle Node by installing the packages from npm and running the build command as defined in our package.json file . We finish the file off by installing the Python packages, exposing the port and defining the CMD to run as we did in the last section.

Finally, we can go ahead, build the image and run the container (replace yourusername with your username below).

In the first run, this will take some time as the Docker client will download the ubuntu image, run all the commands and prepare your image. Re-running docker build after any subsequent changes you make to the application code will almost be instantaneous. Now let's try running our app.

Oops! Our flask app was unable to run since it was unable to connect to Elasticsearch. How do we tell one container about the other container and get them to talk to each other? The answer lies in the next section.

Docker Network

Before we talk about the features Docker provides especially to deal with such scenarios, let's see if we can figure out a way to get around the problem. Hopefully, this should give you an appreciation for the specific feature that we are going to study.

Okay, so let's run docker container ls (which is same as docker ps ) and see what we have.

So we have one ES container running on 0.0.0.0:9200 port which we can directly access. If we can tell our Flask app to connect to this URL, it should be able to connect and talk to ES, right? Let's dig into our Python code and see how the connection details are defined.

To make this work, we need to tell the Flask container that the ES container is running on 0.0.0.0 host (the port by default is 9200 ) and that should make it work, right? Unfortunately, that is not correct since the IP 0.0.0.0 is the IP to access ES container from the host machine i.e. from my Mac. Another container will not be able to access this on the same IP address. Okay if not that IP, then which IP address should the ES container be accessible by? I'm glad you asked this question.

Now is a good time to start our exploration of networking in Docker. When docker is installed, it creates three networks automatically.

The bridge network is the network in which containers are run by default. So that means that when I ran the ES container, it was running in this bridge network. To validate this, let's inspect the network.

You can see that our container 277451c15ec1 is listed under the Containers section in the output. What we also see is the IP address this container has been allotted - 172.17.0.2 . Is this the IP address that we're looking for? Let's find out by running our flask container and trying to access this IP.

This should be fairly straightforward to you by now. We start the container in the interactive mode with the bash process. The --rm is a convenient flag for running one off commands since the container gets cleaned up when its work is done. We try a curl but we need to install it first. Once we do that, we see that we can indeed talk to ES on 172.17.0.2:9200 . Awesome!

Although we have figured out a way to make the containers talk to each other, there are still two problems with this approach -

How do we tell the Flask container that es hostname stands for 172.17.0.2 or some other IP since the IP can change?

Since the bridge network is shared by every container by default, this method is not secure . How do we isolate our network?

The good news that Docker has a great answer to our questions. It allows us to define our own networks while keeping them isolated using the docker network command.

Let's first go ahead and create our own network.

The network create command creates a new bridge network, which is what we need at the moment. In terms of Docker, a bridge network uses a software bridge which allows containers connected to the same bridge network to communicate, while providing isolation from containers which are not connected to that bridge network. The Docker bridge driver automatically installs rules in the host machine so that containers on different bridge networks cannot communicate directly with each other. There are other kinds of networks that you can create, and you are encouraged to read about them in the official docs .

Now that we have a network, we can launch our containers inside this network using the --net flag. Let's do that - but first, in order to launch a new container with the same name, we will stop and remove our ES container that is running in the bridge (default) network.

As you can see, our es container is now running inside the foodtrucks-net bridge network. Now let's inspect what happens when we launch in our foodtrucks-net network.

Wohoo! That works! On user-defined networks like foodtrucks-net, containers can not only communicate by IP address, but can also resolve a container name to an IP address. This capability is called automatic service discovery . Great! Let's launch our Flask container for real now -

Head over to http://0.0.0.0:5000 and see your glorious app live! Although that might have seemed like a lot of work, we actually just typed 4 commands to go from zero to running. I've collated the commands in a bash script .

Now imagine you are distributing your app to a friend, or running on a server that has docker installed. You can get a whole app running with just one command!

And that's it! If you ask me, I find this to be an extremely awesome, and a powerful way of sharing and running your applications!

Docker Compose

Till now we've spent all our time exploring the Docker client. In the Docker ecosystem, however, there are a bunch of other open-source tools which play very nicely with Docker. A few of them are -

- Docker Machine - Create Docker hosts on your computer, on cloud providers, and inside your own data center

- Docker Compose - A tool for defining and running multi-container Docker applications.

- Docker Swarm - A native clustering solution for Docker

- Kubernetes - Kubernetes is an open-source system for automating deployment, scaling, and management of containerized applications.

In this section, we are going to look at one of these tools, Docker Compose, and see how it can make dealing with multi-container apps easier.

The background story of Docker Compose is quite interesting. Roughly around January 2014, a company called OrchardUp launched a tool called Fig. The idea behind Fig was to make isolated development environments work with Docker. The project was very well received on Hacker News - I oddly remember reading about it but didn't quite get the hang of it.

The first comment on the forum actually does a good job of explaining what Fig is all about.

So really at this point, that's what Docker is about: running processes. Now Docker offers a quite rich API to run the processes: shared volumes (directories) between containers (i.e. running images), forward port from the host to the container, display logs, and so on. But that's it: Docker as of now, remains at the process level.

While it provides options to orchestrate multiple containers to create a single "app", it doesn't address the management of such group of containers as a single entity. And that's where tools such as Fig come in: talking about a group of containers as a single entity. Think "run an app" (i.e. "run an orchestrated cluster of containers") instead of "run a container".

It turns out that a lot of people using docker agree with this sentiment. Slowly and steadily as Fig became popular, Docker Inc. took notice, acquired the company and re-branded Fig as Docker Compose.

So what is Compose used for? Compose is a tool that is used for defining and running multi-container Docker apps in an easy way. It provides a configuration file called docker-compose.yml that can be used to bring up an application and the suite of services it depends on with just one command. Compose works in all environments: production, staging, development, testing, as well as CI workflows, although Compose is ideal for development and testing environments.

Let's see if we can create a docker-compose.yml file for our SF-Foodtrucks app and evaluate whether Docker Compose lives up to its promise.

The first step, however, is to install Docker Compose. If you're running Windows or Mac, Docker Compose is already installed as it comes in the Docker Toolbox. Linux users can easily get their hands on Docker Compose by following the instructions on the docs. Since Compose is written in Python, you can also simply do pip install docker-compose . Test your installation with -

Now that we have it installed, we can jump on the next step i.e. the Docker Compose file docker-compose.yml . The syntax for YAML is quite simple and the repo already contains the docker-compose file that we'll be using.

Let me breakdown what the file above means. At the parent level, we define the names of our services - es and web . The image parameter is always required, and for each service that we want Docker to run, we can add additional parameters. For es , we just refer to the elasticsearch image available on Elastic registry. For our Flask app, we refer to the image that we built at the beginning of this section.

Other parameters such as command and ports provide more information about the container. The volumes parameter specifies a mount point in our web container where the code will reside. This is purely optional and is useful if you need access to logs, etc. We'll later see how this can be useful during development. Refer to the online reference to learn more about the parameters this file supports. We also add volumes for the es container so that the data we load persists between restarts. We also specify depends_on , which tells docker to start the es container before web . You can read more about it on docker compose docs .

Note: You must be inside the directory with the docker-compose.yml file in order to execute most Compose commands.

Great! Now the file is ready, let's see docker-compose in action. But before we start, we need to make sure the ports and names are free. So if you have the Flask and ES containers running, lets turn them off.

Now we can run docker-compose . Navigate to the food trucks directory and run docker-compose up .

Head over to the IP to see your app live. That was amazing wasn't it? Just a few lines of configuration and we have two Docker containers running successfully in unison. Let's stop the services and re-run in detached mode.

Unsurprisingly, we can see both the containers running successfully. Where do the names come from? Those were created automatically by Compose. But does Compose also create the network automatically? Good question! Let's find out.

First off, let us stop the services from running. We can always bring them back up in just one command. Data volumes will persist, so it’s possible to start the cluster again with the same data using docker-compose up. To destroy the cluster and the data volumes, just type docker-compose down -v .

While we're are at it, we'll also remove the foodtrucks network that we created last time.

Great! Now that we have a clean slate, let's re-run our services and see if Compose does its magic.

So far, so good. Time to see if any networks were created.

You can see that compose went ahead and created a new network called foodtrucks_default and attached both the new services in that network so that each of these are discoverable to the other. Each container for a service joins the default network and is both reachable by other containers on that network, and discoverable by them at a hostname identical to the container name.

Development Workflow

Before we jump to the next section, there's one last thing I wanted to cover about docker-compose. As stated earlier, docker-compose is really great for development and testing. So let's see how we can configure compose to make our lives easier during development.

Throughout this tutorial, we've worked with readymade docker images. While we've built images from scratch, we haven't touched any application code yet and mostly restricted ourselves to editing Dockerfiles and YAML configurations. One thing that you must be wondering is how does the workflow look during development? Is one supposed to keep creating Docker images for every change, then publish it and then run it to see if the changes work as expected? I'm sure that sounds super tedious. There has to be a better way. In this section, that's what we're going to explore.

Let's see how we can make a change in the Foodtrucks app we just ran. Make sure you have the app running,

Now let's see if we can change this app to display a Hello world! message when a request is made to /hello route. Currently, the app responds with a 404.